Welcome to Agent Angle #29: The Edge Case.

As I research and write about these stories on the frontier of AI, I’ve had a constant sense that I haven't been using AI to its full potential in my own life. It gives me anxiety, which I haven’t fully resolved. What a time to be living in.

This week didn’t come with a single headline moment. Instead, agents showed up in places where outcomes matter and did the work better than we usually expect.

Out-Iterated: An AI agent beats 800 elite programmers in a contest.

The SaaS Line Breaks: One of SaaS’s most influential voices stops hiring humans

Healthcare finds its real leverage with agents

In last week’s poll, 41% of you saw Manus becoming a core layer inside WhatsApp and Instagram. 35% are expecting a quieter ending: folded into Meta and no longer its own thing. We’ll check back in a year to see how well our community prediction is!

Let’s get into it.

#1 My SaaS Prediction Plays Out

"We're done with hiring humans. We're going to push the limits with agents."

A few weeks ago, I wrote about how the end of SaaS was nearing once agents got good enough. This week, that prediction got more real. Jason Lemkin said he’s done hiring humans for sales.

If you don’t follow SaaS closely, Lemkin sits close to the center of it. He runs SaaStr, the biggest B2B software community on the planet. He’s around founders who are scaling companies for a living. When he changes how his own operation works, it often foreshadows what others will copy next.

What surprised me was how fast this happened.

Not long ago, SaaStr still had a mostly human go-to-market team. Then, around the annual SaaStr conference, two senior sales reps quit. Instead of replacing them, Lemkin made a call and told his team to stop hiring humans for sales and push agents as far as they could.

This isn’t happening in isolation either. Around the same time, OpenAI started asking contractors to upload real work from past jobs so agents could be evaluated against actual office tasks. The industry is clearly stress-testing agents against real jobs, not demos.

Today, SaaStr is running about 20 AI agents doing the work that used to be handled by roughly 10 sales reps and account executives. They even re-labeled the office desks with agent names now: “Quali for qualified,” “Arty for artisan,” and “Repli for Replit.” (An engineer definitely named these.)

Lemkin’s explanation is blunt, and it’s hard to argue with. A strong sales rep costs around $150K a year and might leave. An agent can be trained on your best seller, works nonstop, and doesn’t quit. Once you frame it that way, the decision seems obvious.

When someone like Jason decides humans are the riskier hire, it’s a signal.

#2 The New Turing Test

For decades, the Turing Test shaped how we talked about intelligence. If a machine could hold a conversation well enough to fool a human, it was a major milestone towards AGI.

This week, Microsoft AI’s CEO Mustafa Suleyman proposed a much sharper test.

Give an AI agent $100,000 and see if it can legally turn it into $1 million on its own.

Not many people know this, but Suleyman actually floated this idea in a blog post back in 2023. At the time, it felt academic. Agents weren’t ready, and it sounded more philosophical than practical.

That’s changed. Today’s agents can hold goals, call tools, write code, and operate for long stretches without falling apart. The thought experiment suddenly feels close.

People are already fighting about it, which is usually a good sign. Some think this is the only benchmark that matters. If an agent can allocate capital in the real world and win, what else is there to prove?

Others hate it. If everyone has access to the same agent, returns collapse. Or it just turns into a contest for luck, financial loopholes, and even outright grifting.

Source: Reddit

Someone even joked that we’re measuring the wrong thing entirely, and that instead of AGI we should be testing for “Artificial Joy Intelligence.” How much happiness can a model actually create? Money apparently is a pretty sad proxy lol.

I get why Suleyman likes this test. It’s concrete and hard to fake. And it forces agents out of demos and into the harsh real world.

But I don’t buy money as the definition of intelligence. An agent that turns $100K into $1M might be broadly capable…or more likely, it might just be good at one narrow game. So I see this as just another checkpoint in our tech tree towards AGI. Useful and revealing, but not the finish line.

The AI Debate: Your View

Is making $$$ a great proxy for intelligence?

#3 An Agent Beat 800 Humans

For the first time ever, an AI agent finished 1st place at the AtCoder Heuristic Contest.

Last week, Sakana AI entered their agent into the four-hour contest where 800+ elite human programmers competed on a single optimization problem. Let me explain why this one stuck with me.

Source: Sakana AI

AtCoder’s heuristic contests don’t have a “right” answer. You write a program, run it, look at the score, tweak it, and repeat until time runs out. This format usually favors experienced humans who know how to nurse a solution for hours and keep improving it.

The problem itself was a factory planning game. You had to decide which machines to build, which machines could build other machines, and when to upgrade everything so output kept compounding. Bad early choices snowball quickly in the wrong direction.

About halfway through the contest, the agent jumped to the top of the leaderboard and never gave it back.

It found an angle most people missed. It started treating future machines as more valuable much earlier than standard strategies would justify. That changed every downstream decision. The gains stacked. Other approaches stalled.

Reading the logs, the pattern is almost boring: try something, test it, keep what works, delete what doesn’t. Repeat. The agent did that loop thousands of times without getting tired, frustrated, or attached to a clever idea.

AHC is one of the toughest optimization contests around. At last year’s world championship, even an OpenAI agent only reached 2nd place (humans still kept the crown… until now)

These contests measure something we don’t talk about enough: how expensive patience is. Humans pay with attention and fatigue. Machines pay with money. 4 hours of that kind of experimentation is draining for a human. For the agent, it cost ~$1,300.

When the rules reward endless iteration, whoever can afford more patience usually wins.

#4 Doctors Aren’t the Bottleneck

I spent 7 years in healthcare in my previous career, and I don’t say this lightly: it’s probably the most inefficient industry on the planet.

The bottleneck is rarely doctors or nurses. It’s the system around them: scheduling, follow-ups, documentation that eats hours, staffing gaps, messy handoffs.

That’s why I keep coming back to agents in healthcare, even though I’m cautious about “AI doctor” hype. There’s huge leverage in fixing the work that decides whether care actually happens.

This week, Cera, Europe’s largest home care provider, rolled out a suite of AI agents across its 10,000-carer workforce and plans to deploy nearly 1,000 agents internally.

Source: Cera

Cera’s agent lineup tells you exactly where the pain is.

AI Care Coordinator Agent: Automates last-minute staff cover, cutting time spent on coordination.

Field Care Supervisor Agent: Pulls together visit and clinical info so supervisors can spot issues without digging through a pile of notes.

AI Retention Agent: Tries to catch staff who are about to quit, which is either supportive… or creepy… depending on how it’s used.

“Our AI agents remove paperwork so carers can get back to caring, and accelerate recruitment so patients get better care faster.”

Keep the agent outside the exam room. Let it do coordination and admin. Give nurses and carers more minutes with patients, not more tabs to click.

This also builds on Cera’s earlier AI work, including predictive models that have cut hospitalizations by ~70% and patient falls by ~20%, while helping ease pressure on the NHS through faster discharges.

The part I think people miss is that healthcare outcomes hinge on operations as much as on “genius medicine” (as idolized in medical dramas like Dr. House). Home care is a logistics problem. If agents can make the care visit more dependable, that’s when patients feel the difference.

#5 1,000 Games. One Agent

Games are hard for agents for the same reason the real world is. You can’t just memorize rules. You have to move, react, recover from mistakes, and keep going when things don’t work.

That’s the problem NitroGen is aimed at. Researchers at NVIDIA showed a way to train a single agent across 1,000+ different games without building custom simulators or hand-labeling actions.

Source: NitroGen

Instead of instrumenting game engines, NitroGen learns by watching people play (also how most of us learn a game).

An AI system tracks controller inputs directly on screen and extracts player actions from roughly 40,000 hours of real gameplay footage.

Source: NitroGen

When dropped into a brand new game it’s never seen before, NitroGen’s success rate jumped by up to 52% compared to agents trained from scratch. The biggest improvement shows up right at the start, where agents usually fail.

My first instinct was to put this in the usual “AI learns games” bucket. We’ve seen that story before with DeepMind’s SIMA, which focused on learning what to do across virtual worlds by following high-level demonstrations.

So I had the same question most people will: what’s actually new here?

NitroGen is after something different. It’s much more physics-first. Instead of learning intent or task semantics, the agent learns how to act when the environment pushes back. Movement, timing, recovery, coordination. The low-level interactions that break agents before goals even come into play.

The breakthrough here is not just generalization across games. It’s showing that control itself can be pretrained from videos on the internet.

I’ve lost count of how many times I give an agent a task, watch it make real progress, start to feel good about it… and then it stalls.

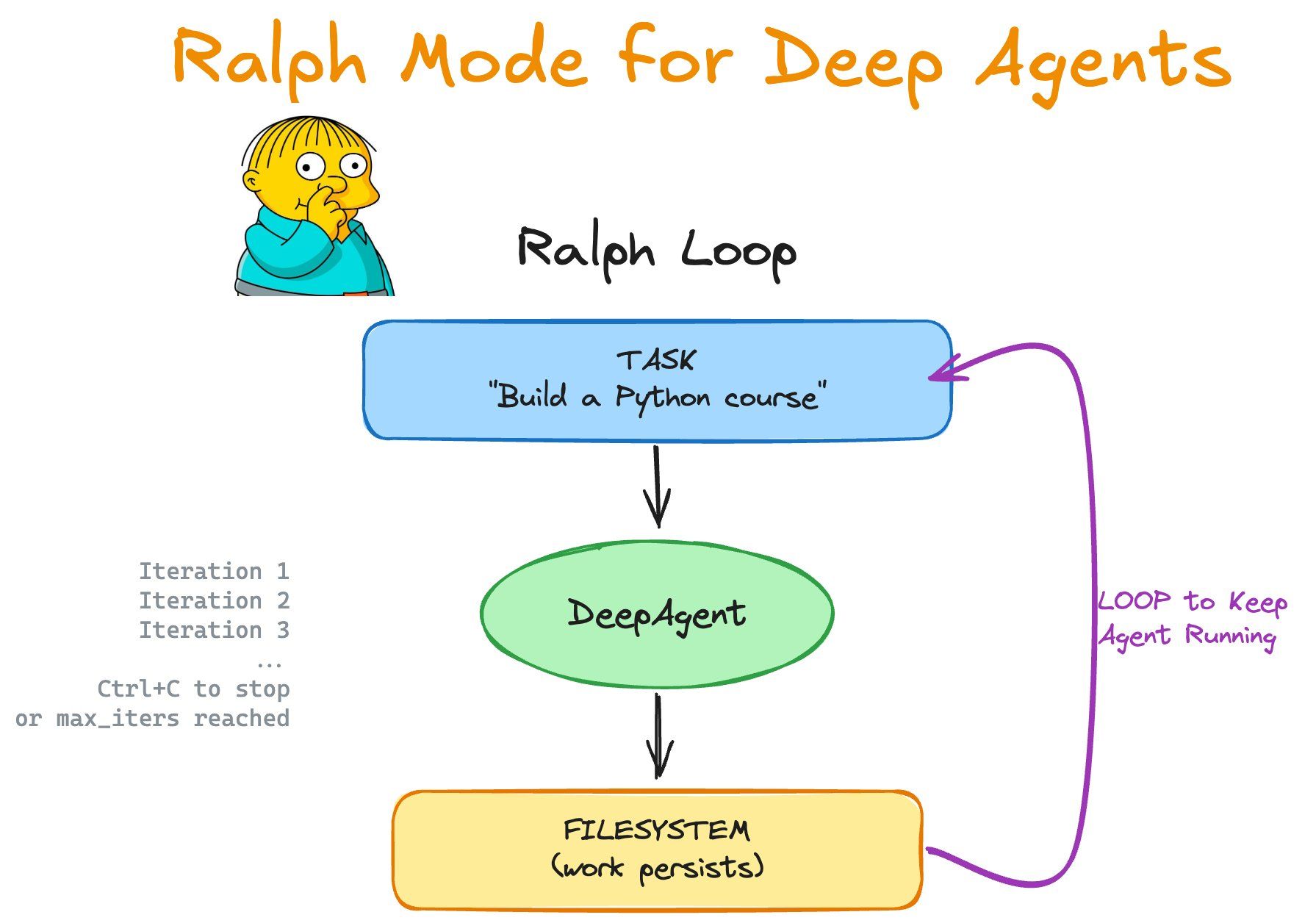

LangChain just shipped something that avoids this failure mode entirely. It’s called Ralph Mode. It’s similar to Claude Code for building software, but feels more like assigning a project, then leaving the room.

Source: Github

Ralph Mode runs an agent in a tight loop with fresh context every iteration. Instead of stuffing memory into the prompt, it uses the filesystem and git as the source of truth. You start it, walk away, and stop it when you’re done.

Examples of how you could use it:

# Unlimited iterations (Ctrl+C to stop)

python ralph_mode.py "Build a Python course"

# With iteration limit

python ralph_mode.py "Build a REST API" --iterations 5

# With specific model

python ralph_mode.py "Create a CLI tool" --model claude-haiku-4-5-20251001This is the simplest way I’ve seen to keep an agent moving without babysitting it.

Catch you next week ✌️

Teng Yan & Ayan

P.S. Know a builder or investor who’s too busy to track the agent space but too smart to miss the trends? Forward this to them. You’re helping us build the smartest Agentic community on the web.

I also write a newsletter on decentralized AI and robotics at Chainofthought.xyz.