Decart’s bet: Make interactive simulation/world models cheap enough to serve in real time, then stack products on top of it.

In a Tel Aviv office, a grainy photo hangs on the wall. It's a photo of an early-stage investor who told Decart's founders that they were attempting the impossible. "Israelis don't know how to train models”, she said.

She meant it as a deal-breaker. Decart’s founders took it as a challenge. They trained the models anyway. This year, they hit a $3.1 billion valuation and started getting shout-outs from Elon Musk.

She was right about the hard part. But that’s the whole point. Difficulty became their moat.

This is what Decart figured out first: the next wave of digital worlds won’t come pre-scripted inside a game engine. It will be simulated, frame by frame, by models that learn physics, causality, and player behavior.

Glitch in the God Machine

There’s a seductive idea in philosophy: we’re not living at the base layer of reality. Your morning coffee, your student loans, that recurring dream where you’re late for a test. All is just data rendered by an incomprehensibly powerful computer.

Philosopher Nick Bostrom put it cleanly: If a civilization ever becomes capable of building realistic ancestor simulations, they won’t stop at one. They’ll spin up billions. For science, for nostalgia, for fun.

If billions of simulated civilizations exist, but only one “base” biological civilization exists, then almost all conscious observers with our experiences would be living in simulations rather than the original reality. Statistically, that makes us almost certainly a copy. We could be version 2,700,000 of someone’s post-human thesis project.

The sales pitch for this future of simulations is, I admit, pretty sweet. You could upload your mind and just... not die. Edit out death, pain, and bad haircuts. Build cities that exist just for you and feel the wind that was coded into existence a millisecond ago. It’s the ultimate techno-utopia.

But then comes the compute bill. Creating a universe that feels free, infinite, and responsive is, for now, one of the most expensive and physically constrained endeavours in human history. A responsive world must operate in real-time. At 24 frames per second, one user needs 1,440 predicted frames per minute, each one computed from state and input.

On today’s H100-class hardware, that lands near $0.08 per user-minute at 720p once you add bandwidth. Two hours a day in the simulation pushes a single user toward hundreds of dollars a month. No consumer will pay it.

We call this the Simulation Tax. Cut it by an order of magnitude, and new products appear. This is what Decart does best.

From Parrots to Physics Engines

While everyone was throwing money at large language models, Decart took a left turn into uncharted territory.

What they figured out—and what their early investor completely missed—is that generative AI has advanced through a chain of “holy s*#t” moments. First, machines mimicked our words. Then they made images from prompts. Then stitched frames into 10-second clips.

We went from: “Write me a poem about a bird.”

To: “Show me a bird.”

To: “Show me a bird flying on Mars.”

To: “Here’s a bird. What happens if I give it a seed?”

That last question demands causality. The system must carry state across time, apply simple physics, and keep identities stable while scenes evolve. It requires a short-term memory for motion, a longer-term memory for goals, and a mechanism to update beliefs after an action. This is simulation. The prize is learned consequence, not prettier frames.

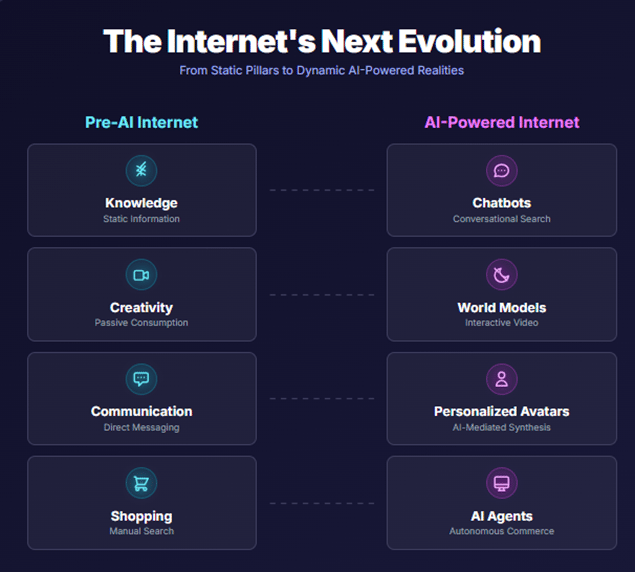

Decart’s founders frame it this way: the old internet rested on four primitives of Knowledge, Creativity, Communication, and Shopping. LLMs have claimed Knowledge. The next frontier is Creativity, because play and leisure become programmable once machines can model consequences.

The End of the Pre-Written World

Every world we’ve built so far, from Gilgamesh to GTA VI, has been authored. Even so-called “open world” games like No Man’s Sky eventually repeat. The terrain may change, but the logic stays fixed. You can choose a mission. You can’t convince a guard to quit and become a poet.

That limitation exists because building not just content but the logic of interaction is hard. Bringing the cost of dynamic experience close to zero remains one of computing’s unsolved challenges.

World models are a break from traditional open world games. They compute a world, frame by frame. Nothing exists until you observe it. Touch it and it reacts. Ignore it and it continues.

These actions have consequences not because some developer coded an "if-then" statement, but because the system has developed a general sense of how reality is supposed to work.Here, a guard doesn’t repeat a line. He grasps what guarding is. He might wander. Fall for another NPC. Start a side story you never see.

At that point, you’re not playing a game. It’s something closer to ethnographic fieldwork where the player studies and influences a simulated society that develops on its own.

What World Models Actually Are

Physics happens without permission.

Gravity pulls.

Energy fades.

Cause leads to effect.

For decades, video games imitated this logic using code. A wall breaks because a developer wrote a rule: if hit, then break.

World models are different. They don’t rely on hardcoded rules or physics engines. Instead, they’ve absorbed millions of hours of gameplay and video. When something that looks like a fist hits something that looks like a tree, the model predicts: this tree probably breaks.

In other words, a world model can predict “if the player does X, the world will do Y”. It has developed an instinct for consequence. This allows it to generate the next frame without a game engine.

A generative world model could, theoretically, just keep building the world out into infinity. No two play sessions need ever be the same, because the world is being imagined in real-time.

This is quite exciting. We’re on the edge of experiences that used to be impossible. You say something offhand to an NPC, and the storyline bends. The sky darkens, not on a script, but because the tension calls for rain. Or maybe the model gives you a sunset, because it feels like you’ve earned it.

It reminds me of French philosopher Jean Baudrillard’s claim: the more we copy reality, the more we drift from it.

First, we imitate directly (a portrait). Then we improve it (airbrushed photos, movie adaptations). Then we create things with no original (cartoon mascots). Eventually, we build entire systems that only reference other systems. They no longer point back to anything real.

He called this final state hyperreality. A world made of signs pretending to be truth.

The simulacrum is never that which conceals the truth. It is the truth which conceals that there is none

World models are exactly this. Hyperreality. They invent worlds that behave the way the model thinks they should. Physics feels smoother. Characters act with more consistency. Stories resolve more neatly.

The result is a world that runs better than reality because it’s optimized to be believed. My nagging worry is that as these models improve, we may find ourselves preferring the simulation over the actual experience. Because it’s more coherent.

War, PhD, and a Butcher shop

How does a former butcher and a computer science prodigy who nearly failed his first coding class build a $3 billion company in under a year?

Like this 👇

The prodigy is Dean Leitersdorf, who finished high school early, then collected a bachelor's, master's, and a PhD in computer science by 23. His dissertation was named

the best PhD in distributed computing worldwide. He did all that while serving in Israel's elite military intelligence unit 8200.

But he wasn’t always that kid. At 15, newly arrived in Palo Alto, he failed his first computer science exam with a 14 out of 100. His teacher marked him as “sick” to protect his GPA. For someone who had been writing RuneScape bots for years, it was a gut punch, and also the spark of a fire that’s still burning today in him.

Moshe Shalev’s path was nothing like that. He grew up ultra-Orthodox, worked in a butcher shop, and made a rare move in his community: he joined the military. Thirteen years later, he was chief of staff to the commander of Unit 8200.

Two wildly different lives. One was writing academic papers on distributed systems before he could legally drink. The other learned how to break down meat before learning how to break down networks.

In Israeli military culture, especially in Unit 8200, there’s a doctrine called rosh gadol (Big head), means you take full ownership, question assumptions, and solve the problem at its roots. The opposite is rosh katan (small head), which is doing only what’s asked, no more.

Decart operates with rosh gadol. They didn’t try to build a slightly better video filter on existing systems, but tackled the underlying roadblock of high cost and lag of real-time generative media. That’s why they rebuilt the entire infrastructure from scratch.

They met during a chance conversation in a hallway at their military base in 2021.

11-year age gap, opposite personalities, but somehow they clicked. By September 2023, they'd officially founded Decart. They spent weeks pitching ideas to each other, including cybersecurity, solar tech, healthcare. None of it stuck. Shalev was frustrated. Leitersdorf looked bored.

Then war broke out.

They were both called up for reserve duty after October 7. Away from the tech echo chamber, something shifted. When they returned, they didn’t want to build another SaaS tool. They wanted a “kilocorn”—their word for a trillion-dollar-impact company.

Their military background taught them that with the right people, physics becomes optional. Unit 8200 projects fail constantly, but when they work, as Shalev noted, they change how intelligence operates globally.

But this is the part that I personally liked the most. While every ambitious AI company was raising VC funding, Decart did something heretical.

They first sold something they already had.

Leitersdorf’s PhD work included GPU optimization software that made models run faster on Nvidia chips. They sold that to other AI companies. Within three months, they were profitable and closed a multi-million-dollar deal with a GPU cloud provider (rumored to be CoreWeave or Lambda Labs). By late 2024, it was pulling in over $10 million in annualized revenue.

Sequoia’s Shaun Maguire led their $21 million seed round. His reason: “Decart already figured out how to train models profitably. That’s unheard of in this space.”

They stayed in stealth through early 2024, refining their optimization stack while training their own foundation video model.

In August 2025, Decart raised $100 million in Series B funding from Sequoia and Benchmark at a $3.1 billion valuation. Just eleven months in, and they’d tripled unicorn status.

This was the same old Google playbook. AltaVista bought the most expensive servers money could buy. Google figured out how to run search on cheap hardware that should have collapsed under the load. Decart learned how to generate real-time video on hardware that shouldn’t be fast enough, because they understand GPUs to the level of electrons.

Leitersdorf mentions that they keep a 200-page internal document cataloguing every bizarre failure mode that occurs during model training. One training run kept crashing with missing lock file errors. It turned out that the real problem was a completely separate synthetic data process choking their network. You only find that kind of bug if you understand the hardware down to its soul.

That's their real moat.

Their consumer product, Oasis, hit one million users in three days. Faster than ChatGPT’s launch pace. By mid-2025, Decart landed on Forbes’ annual “Next Billion-Dollar Startups” list.

The Product Suite

Decart has four public products/ prototypes: Oasis, Mirage, Sidekick, and Delulu.

Oasis

Oasis is a real-time, open-world AI model. Your inputs flow into the model; it predicts the next state of the scene and renders it as video. The team trained it on Minecraft footage, so the model picked up simple regularities: blocks are solid, gravity pulls, fire gives light, water moves downhill. It is not a physics engine; it learned patterns of consequence from data and uses those patterns to advance time.

Most AI video generation works like this: either you get perfect quality with zero interactivity (Full-sequence diffusion models like Sora) or you get real-time responses that accumulate errors (autoregressive models). It's the classic speed vs accuracy, and everyone picked a side.

How Oasis thinks comes down to three ideas. First, compression and prediction. A vision transformer looks at the current frame and squishes it into a compact digital description, like a quick mental snapshot. The second, a Diffusion Transformer, takes that snapshot, checks for your latest keyboard command, and then predicts the very next frame of video. The loop repeats. Decart calls this pattern Diffusion Forcing.

Second, recovery. To prevent Oasis error accumulation, Decart trained it to fix its own mistakes. They used a method that intentionally corrupted past frames during training, forcing the model to learn how to recover and maintain stability over long sessions.

Third, early-frame stability. At generation time, a complementary technique called Dynamic Noising is used. A small amount of noise is briefly introduced in the first few frames. This acts as a pause, preventing the AI from locking onto a faulty detail too early. This noise is then gradually removed in later passes, allowing the model to "lock on" to faulty details from previous frames. The AI gets a moment to recalibrate instead of locking onto glitched details from previous frames

However, getting this to run in real-time is the main challenge.

Oasis’s real-time performance is due to its heavy GPU optimization. They wrote low-level assembly code for GPUs and developed single, large GPU programs that fuse multiple steps to minimize overhead.

They rearranged how tasks run on the chip to prevent data from moving around, which is a significant source of delay. This optimization reduced frame generation to 47 milliseconds on an NVIDIA H100 GPU, resulting in approximately 20 frames per second. For comparison, standard models would take hundreds of milliseconds. Decart essentially achieved zero perceived latency.

Partnership with Etched

Decart knew early on: general-purpose GPUs wouldn't scale. They're powerful, but expensive, energy-hungry, and built for flexibility, not focus.

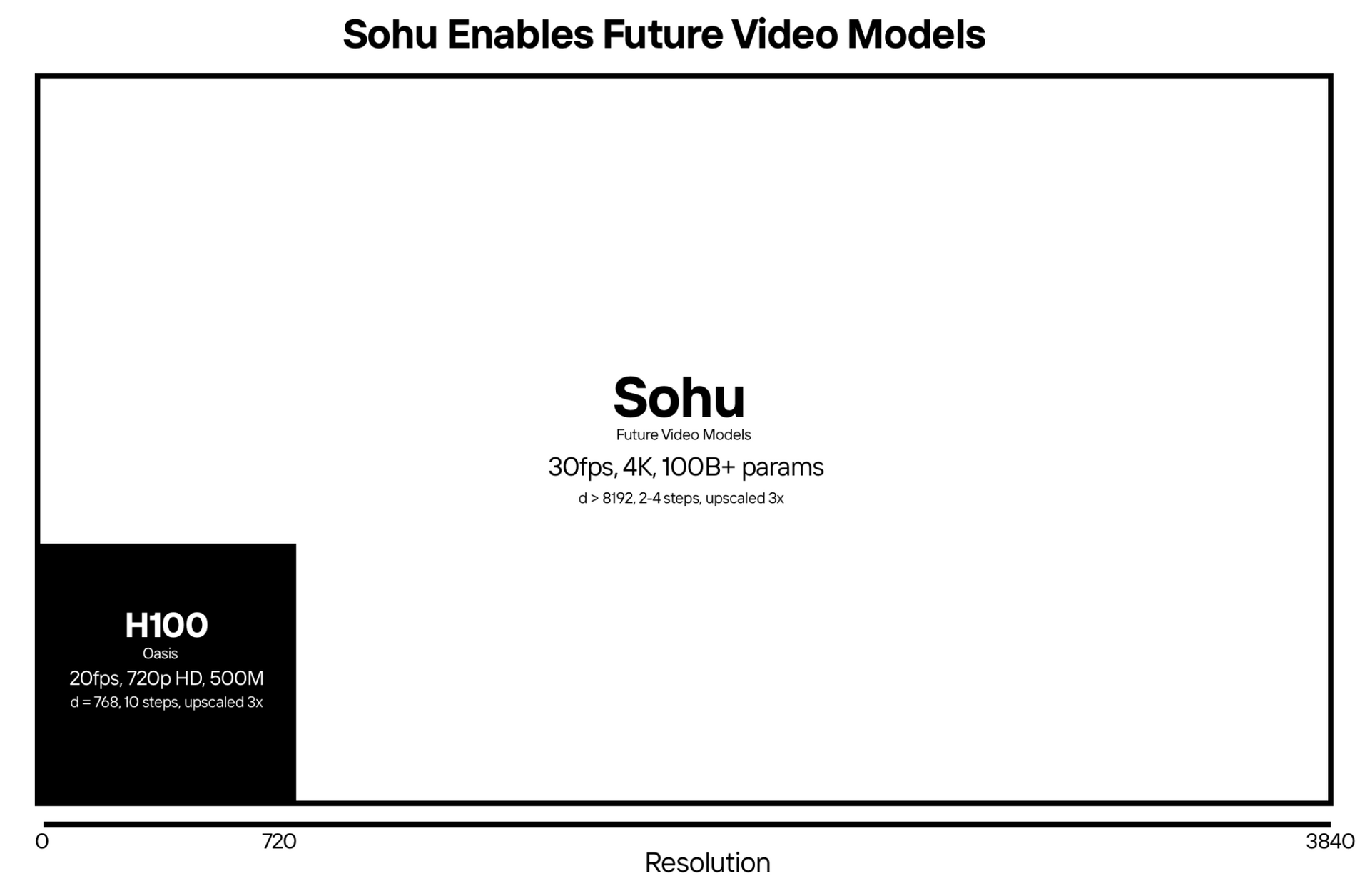

So, they partnered with Etched, a hardware startup that designed Sohu, a custom ASIC made for one job: running transformers. By stripping everything else away, Sohu reaches utilization levels that general-purpose chips can’t touch.

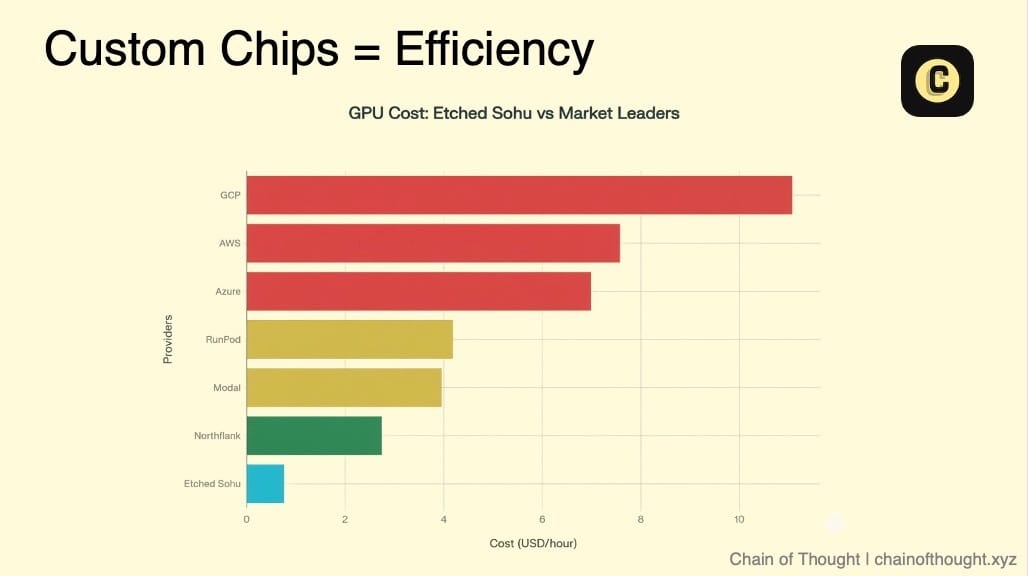

On an H100, Oasis runs at 360p. On Sohu, it could hit 4K. One Sohu chip, at the same cost and power as an H100, is expected to serve 10× more users with a larger model.

The Economics of Streaming a World

Serving one user at 24 FPS means rendering 1,440 unique frames per minute. Here's what that looks like in dollars:

Cost Component | NVIDIA H100 (per minute) | Etched Sohu ASIC (per minute) |

|---|---|---|

Compute Time | $0.075 | $0.0075 |

Bandwidth | $0.001 | $0.00 |

Storage | Negligible | Negligible |

Total (per user/min) | ~$0.0761 | ~$0.0086 |

Assumptions: One concurrent user consumes a full H100 minute at 720p real-time. Sohu ASIC delivers a 10× perf-per-dollar improvement versus H100 for this workload. 720p stream at 3 Mbps; bandwidth $0.05 per GB.

At $0.075 per minute, a single user playing for two hours a day costs nearly $300/month in compute. There’s no consumer business in that.

Sohu brings the number down to around $80/month. Still high, but now within range of premium consumer or enterprise pricing. The underlying bet: that transformers remain the dominant model architecture. If that holds, Decart’s cost of goods drops by 90%. Leitersdorf believes video generation can fall from hundreds of dollars per hour to just $0.25.

10x cost reduction with Etched Sohu ASIC versus current H100 options

It’s a very Google-esque move. Google built TPUs to accelerate its own models. Decart is essentially building the “concrete” for their road, not just the vehicle.

But custom chips are only half the battle. You also have to ship the data.

Each Oasis stream is unique. Netflix can cache a show once and serve it to millions. Oasis can’t. Every user sees something no one else ever will. No caching. No reruns.

At 720p, that’s 1–3 Mbps per stream. At 4K, much more. So Decart is going edge-native. Partnering with Fal, they’re placing models in data centers worldwide to generate content closer to the user, cutting latency and costs.

Launched on October 31, 2024, Oasis 1.0 was a proof-of-concept. Decart open-sourced a 500M parameter model (small enough to run locally) and hosted a larger version in the cloud. The demo ran at 360p, at around 20 frames per second, in a crude, pixelated sandbox with no objective.

But that didn’t matter. The point was to show that a world could be dreamed into existence, frame by frame, in real time. It was not the first airplane; it was the first flight.

While I was researching Decart, they released Oasis 2.0.

It is their most advanced AI model that transforms game worlds & styles in real time at 1080p and 30fps. Though some people claim that it’s not true as the experience is not smooth and much more like 360p.

You can try it now as a Minecraft mod or in a web-based demo. It’s not perfect, but for early users, that’s part of the charm. They’re watching something raw take shape. Going forward, Oasis is likely to evolve into more complex interactive worlds.

If Oasis is an “imagination from scratch,” Mirage is “imagination as a filter on reality.”

Debuted in mid-2025, Mirage LSD (Live-Stream Diffusion) is the first system to achieve infinite, zero-latency video generation on a live feed.

You feed it any live video stream (from your webcam, a game, a YouTube video – anything) plus a prompt or desired style, and Mirage will instantly re-render that video in the style of your choosing, continuously, at 24+ frames per second.

That’s me in my study room, reimagined in Candyland by Decart’s Mirage

Mirage uses some of the same building blocks as Oasis (like the same type of diffusion model that generates frames one after another). But as Mirage works with live video, it has to keep the real motion and layout of the scene intact while layering in whatever new visuals you ask for. A much tougher task than just free-generating.

To solve it, Decart added two key upgrades. First, a memory system that helps Mirage stay consistent over long stretches of video. So, if you turn a car into a dragon at the one-minute mark, that dragon will stay the same across the whole clip instead of warping or fading out.

Second, it can take new orders mid-stream without a stutter. You can be watching a live feed, type “make it nighttime,” and the scene changes within a frame or two. This works because the system was trained to adapt mid-stream without breaking flow. They also pruned the model and applied a technique called shortcut distillation to reduce computation per frame without losing quality.

The net effect was a 16× improvement in responsiveness over prior video diffusion systems, with a true zero-latency response (<40 ms; so short that your brain simply cannot perceive it).

Leitersdorf laughs about users grabbing broomsticks, whacking each other, and applying a Star Wars filter to create lightsaber fights. Kids are running around their homes turning the entire place into a blocky Minecraft world, punching furniture to see if it breaks.

Sidekick is a "talk-to-video" platform that enables real-time video conversations with AI characters. You can choose from a gallery of AI personas or even create your own. When you call them, you see their face (animated in real-time) and hear their voice. It’s essentially deepfake Zoom + ChatGPT, purpose-built for fun and companionship.

Sidekick allows private user-generated characters, but apparently some users saw their “private” creations appearing in public feeds or demos. Decart’s terms state that users own their content, but also that Decart can use the content to improve the platform.

At the moment, Sidekick is more of a cool demo than a polished product. But it shows their ability to generate talking heads in real-time. One can see Sidekick eventually integrating with AR glasses as well.

Delulu is a mobile app, launched in late 2025 on iOS and Android. It allows users to upload a photo and apply an endless variety of generative styles (comic book hero, 18th-century royal garb, etc.) to create fun images that can then be shared as stickers or posts.

Delulu” in slang refers to someone entertaining unrealistic fantasies (short for delusional). Decart leaned into that ironically – implying this app lets you live out your craziest visual fantasies.

It can also generate a short video clip from an image using an optimized model Lucy-5B, an accelerated Wan 2.2–5B model that Decart rebuilt for real-time image-to-video. It can produce 5-second 480p clips in 3.2 seconds (which is 6× faster than the original, at no quality loss).

Delulu’s targets the same dopamine-hit use case as other casual creative apps (like Prisma did for filters, or TikTok’s Dream effects). The big advantage is variety and infinity. Since the AI can generate endless variations, users can keep hitting refresh for new surprises.

Why Delulu and Sidekick Matter

Building world models needs two scarce resources: compute and data. Decart already has the first (via its GPU/ASIC optimization). Delulu and Sidekick are how they solve the second.

Delulu is a photo editing app that allows users to transform their selfies using various styles. Google Play

Sidekick offers personalized AI companions for entertainment, productivity, or emotional support—video/chat interaction with an AI. sidekick.decart.ai

Rather than depend entirely on licensed datasets or scraping, Decart uses these apps to collect real user-behavior data. Every style applied, every selfie edited, every Sidekick conversation becomes labeled data. That helps train and improve Decart’s models in ways that are both scalable and proprietary.

So Delulu and Sidekick are not just products. They’re data engines.

The World Model Arena: A New Great Game

Companies building real-time generative worlds will be judged on a simple scorecard:

Latency — how fast the world responds, ideally in real time.

Output quality & stability — how good the visuals are and how coherent the environment remains over time.

Control & alignment — preventing unsafe, biased, or off-brand outputs.

UX for creators — tools, ease, creative control.

Scalability — can you serve millions without costs exploding?

Google’s Genie 3

DeepMind’s Genie 3 (announced August 2025) is one of the cleanest exemplars. Given a text prompt, Genie 3 builds interactive 3D environments in real time (24 FPS at ~720p), preserving environmental consistency over several minutes.

Users can trigger promptable events via text while inside the simulation (e.g. “a wild animal appears”) and Genie 3 will insert that into the world. This is very similar to what one would imagine Oasis’s future iterations doing (and Oasis, in a limited Minecraft form, already allowed text prompts to influence content like spawning items).

The demos are stunning. Genie 3’s strength is the breadth of scenarios it can handle. Everything from Victorian London street to “Martian landscape cartoon. This is because it has been training the model on a massive corpus of 6.8 million public internet videos (30,000 hours).

Tencent’s GameGen-O

On the other side of the globe, China’s tech giants are moving fast, and Tencent is leading the charge with a dual strategy.

Internally, they built GameGen-O which can generate various game elements (characters, environments, actions) and respond to user inputs (like keyboard/mouse) to control what happens. It was trained on a dataset compiled from over 100 modern open-world games.

Externally, Tencent released HunyuanWorld 1.0, an open-source 3D world generator capable of creating explorable environments from text or a single image, with assets directly exportable to Unity and Unreal.

The strategy is clear: commoditize the base layer of world generation. If Tencent can make high-quality tools freely available, it undercuts the closed platforms of Western competitors and pulls independent developers into its ecosystem.

Netflix and EA

Legacy entertainment companies are circling the space, though not with conviction. Netflix briefly hired a VP of Generative AI for Games, only to see him leave four months later. The experiment fizzled, but it showed Netflix knows interactive, AI-driven content could eventually reshape storytelling.

EA has been more vocal. In 2023, its CTO demoed a “text-to-game” prototype called Imagination Engine. It was more concept than reality, but highlighted EA’s vision that AI-driven user-generated content. Since then, EA has posted AI job listings and begun rolling out tooling for creators.

Where does this leave Decart?

Well, I think of Decart as one of the most integrated players, as they have the full stack (model + inference engine + hardware + consumer products). They’re small but move fast. Their main challenges will be keeping the tech edge and converting that edge into business by signing deals and growing the user base.

Funding and Revenue

Decart has raised $153 million in under a year, culminating in a $100 million Series B round in August 2025, which pegged its valuation at $3.1 billion. All from tier-one backers like Sequoia, Benchmark, Zeev Ventures, and Aleph VC.

Decart's valuation growth trajectory compared to typical unicorn timelines

What makes Decart unusual is how little cash it has burned. The company says it has used less than $10 million of its investor money so far, covering much of its massive GPU bill through enterprise revenue instead.

That enterprise arm has two main pillars:

GPU Optimization Licensing — selling or licensing their software/system that makes heavy GPU / video workloads more efficient and less expensive. This generates “tens of millions” in revenue already. Which is pretty sweet. It’s rare for a startup to have a powerful revenue stream that can fund its R&D on cutting-edge products.

“Mirage” / Live Simulation Services — providing clients in sectors like gaming, real estate, film, and education with models that enable real-time visual interaction, simulation, and content generation.

This approach gives Decart a level of capital efficiency almost unheard of in foundational AI. Most peers burn hundreds of millions before reaching revenue. Decart is paying for its experiments as it goes.

The next step is obvious: the Mirage API. Instead of just custom deals, studios and developers will be able to embed Decart’s real-time simulation directly into their apps. That means recurring revenue at scale, with far lower customer acquisition costs than chasing consumers one by one.

Partnerships

Decart's partnerships are not random alliances. They have been carefully chosen to solve a key constraint

Speed: Decart teamed up with fal.ai as its exclusive launch partner for frontier video models. Their model Lucy-5B (image-to-video) is part of that collaboration, which can generate a 5s clip in <3.2s. This helps get high-performance generation closer to users for lower latency.

Scale: For scalable cloud infrastructure, Decart relies on Crusoe. This partnership proved vital during the viral launch of Oasis, allowing Decart to scale its capacity fivefold in a matter of hours to support over two million users in four days.

Talent: Decart is funding and collaborating with Technion, the elite Israeli university Dean went to, and set up a joint AI research center. This ensures access to top academic research, early exposure to students, and builds long-term talent pipelines.

A Rocky Road Ahead

Tech Limitations

Despite Decart's impressive technology, its path to becoming a "kilocorn" still has several technical and market risks.

The first is the Persistence Gap. Learned simulators still break on memory and object permanence. Objects flicker or morph when you turn away. This is why the Oasis demo can feel wonky at times.

The second is the Drift Problem. Frame-by-frame models accumulate mistakes. Decart trains by corrupting frames intentionally so the model learns to recover, a clever patch but not a cure. Drift still creeps in, and over time, it erodes coherence.

The moment they solve these, they are no longer in the entertainment business. A system that can run a persistent simulation is the holy grail for training embodied AI. Such a model provides an infinitely scalable and safe environment to train robots, autonomous vehicles etc.

This reveals a far more lucrative long-term market for Decart as the combined TAM for industrial, virtual, and surgical simulation is massive.

World Model vs. Asset-Generator

There is also a huge competitive threat from a more practical direction. Decart is building a new cathedral of interactive reality, but competitors like Tencent and Unity (Unity Muse) are simply selling better bricks. For developers, asset generators slot neatly into existing engines and pipelines. They’re cheaper, faster, and less disruptive.

Selling a better car is easier than convincing people to buy a spaceship. Decart’s risk is that its grand vision becomes a technical marvel with no market if the industry decides “good enough” assets beat “all new” worlds.

There is an out: If Decart’s consumer apps can capture that same cultural loop that Character.ai did on TikTok, they could have a real moat. Attention rewrites the rules

Ethical and Second-Order Risks

This is the part that unsettles me most. Mirage is a dream machine, but it’s also a deepfake machine. Leitersdorf has admitted it’s a “very, very tough problem,” and I don’t think anyone in the industry has a clear answer yet.

There are significant data privacy concerns. The company's privacy policy grants them the right to use everything users upload to train their models. That means personal photos from Delulu and video conversations from Sidekick are being fed directly into their AI. They are one data leak away from a consumer backlash or a visit from regulators.

And that’s before we even get to the Pandora’s Box of user-generated worlds. People will jailbreak, exploit, and push the system in ways the founders never imagined. Some will attempt to exploit it for harmful content; others will use it as a vector for malware. You can’t really control it once it’s in the wild.

The darkest possibilities haunt me: not doomscrolling but doom-inhabiting. Hyper-addictive worlds that pull people in. Bad actors are finding ways to weaponize the platform. Decart is building an open-ended power, and I’m not sure anyone can fully steer what comes next.

Beyond the Looking Glass

Decart is building a base layer for real-time, model-driven experiences. The goal is simple to state: lower the Simulation Tax until responsive worlds fit consumer price points, then let others build on that.

An ambition that big has to start somewhere, so gaming is the first proving ground.

Soon, we might see a plugin for a future Minecraft or Roblox that lets players generate infinite new terrain. Traditional game studios will have to adapt or die as small teams start producing endless content. This is similar to how mobile gaming and free-to-play upended console giants. AI-generated content could upend content pipelines.

This new reality also means content creation cycles could shrink from years to days. You wouldn’t wait for a sequel because the game would get fresh AI-generated material daily. This could change how game studios operate (how do you sell DLC when the AI makes free content? Maybe by selling specialized models or premium “themes”?)

In the medium term, this reaches beyond games. We might see a virtual environment where remote teams meet in an instantly generated “office” for productivity gains, like a brainstorming session in a serene forest. The obsolete distinctions between a movie, a video call, and a social feed might evaporate, leaving a single stream of inhabitable content.

This is the metaverse truly playing out. Meta was just a few years too early. This is the point where the internet stops being something you scroll through and becomes a place you are.

Despite fears, I don’t foresee AI fully replacing human creators. It means their job changes. AI becomes an infinite sandbox, and taste becomes a scarce skill. A designer might spin up a thousand quests to find fifty worth shipping. A writer could test ten film endings before choosing one. Out of this, new genres emerge, like a living movie that shifts tone based on your reactions in real time.

The economics shift too. Creators won’t just sell static videos, but rather, they will sell world experiences. A theme park could generate a one-of-a-kind ride for each guest. In 2030, a YouTuber might release a fully interactive world for fans to explore. At the same time, a counter-trend will probably rise. Authentic human-made content will become a luxury good, like vinyl records in the age of streaming.

This is the opening of what I’d call the Battle for the Leisure Loop. As AI automates away hours of drudgery, a trillion-dollar market for attention emerges. Decart’s long-term competitors are they’re TikTok and Netflix. The winner is whoever fills our free time with the most personal meaning. Why watch a fixed movie from a catalog when you can step into an experience that is never the same twice?

All of this sounds futuristic until you remember that every big change does. Early films were just jerky black-and-white clips of a train arriving at a station. Games generated by AI may feel soulless now, but so did cinema in 1895.

Decart today looks a little like early Apple or Tesla: designing chips, building products, licensing core tech, selling to enterprises. A “do it all” strategy that usually collapses. But if it works, it’s how you get to a trillion-dollar kilocorn.

Right now, the company is riding on hype, and hype cuts both ways. It could collapse under its own weight, of course. An ambitious attempt to boil the ocean that arrives too early. But if they succeed, Powered by Decart could be the new Intel Inside of the past, the invisible substrate for interactive reality.

Are we witnessing the birth of the AWS of generative experiences? We could very well be.

Cheers,

Teng Yan & Ravi

If you found this interesting, please share it with a friend. That’s all we ask for.

Want More? Follow Teng Yan & Chain of Thought on X

Join our YouTube channel for more visual insights on AI

Subscribe for timely research and insights delivered to your inbox.