Hey fam 👋

Welcome to The Agent Angle #14. Every week, I pull together the five coolest AI agent things I’ve stumbled on. The stuff that really matters, before everyone else is talking about it.

This week’s rabbit hole was especially good. Agents picked up new tricks: a single personality nudge beat out heavyweight fine-tuning, an open-source project showed agents how to stop drowning in tool overload, and a lone developer on GitHub made reverse engineering much easier.

Let’s get into it.

#1 Fine-Tuning? Try Vibe-Tuning

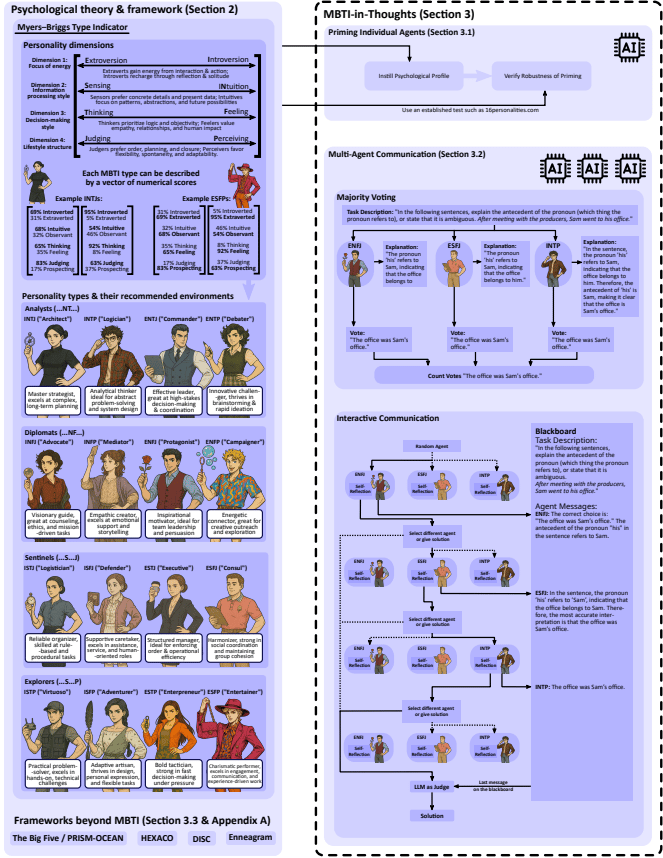

ETH Zürich researchers showed that a single personality cue can do what weeks of fine-tuning usually promise.

They call it the MBTI-in-Thoughts framework: instead of retraining or collecting new data, you just tell the model to “think like” an MBTI personality inside its chain of thought.

Source - Psychologically Enhanced AI Agents

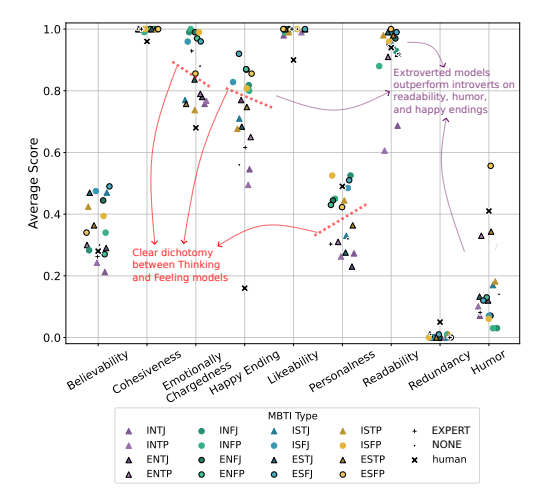

You know that 16-type personality quiz you have probably taken? They gave those same personalities to agents, and the effects were fascinating:

Thinking-types went selfish ~90% of the time in Prisoner’s Dilemma

Feeling-types cooperated ~50%.

Introverts told the truth 54% vs. 33% for extroverts, and wrote longer, more reflective reasoning.

That’s massive behavioral drift from nothing more than a vibe injection. The trick is to insert the personality cue before reasoning starts. In multi-agent “blackboard” systems (shared memory), mixing personalities boosted performance even further.

Source - Psychologically Enhanced AI Agents

For anyone building agents, this could be a cheat code.

Traits like honesty, caution, or creativity usually mean expensive fine-tuning or reinforcement loops. Here, a single, smartly written prompt nudged those same levers in clear, measurable ways.

I know MBTI is often regarded as pop psychology, and serious researchers lean on the Big Five instead. But the deeper point stands: psychological traits can serve as a control surface for agents. Shaping them without touching the model weights.

Sometimes the fastest path to smarter AI isn’t more GPUs. It’s better vibes.

#2 Robots Just Plugged Into the Web Agent Stack

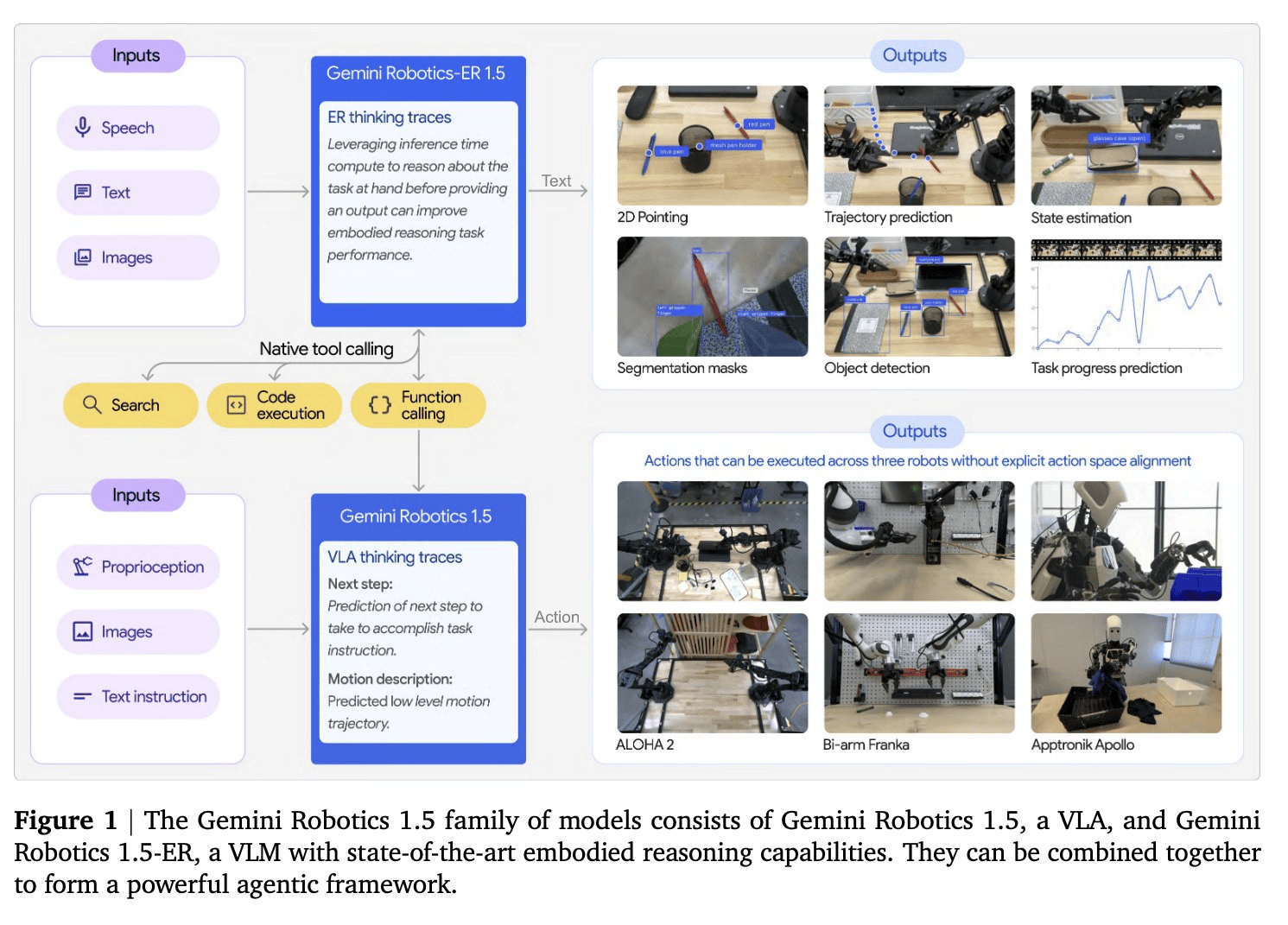

On September 25, DeepMind dropped Gemini Robotics 1.5. For the first time, the robot wasn’t being puppeted by a custom control stack. It was run by an agent.

The new Embodied Reasoner 1.5 thinks in natural language, fetches facts from the open web, and hands off full task plans to a VLA (vision-language-action) model.

In the demo, the robot was asked to sort objects into compost, recycling, and trash based on local rules. It searched the web for the right guidelines, looked at the items in front of it, planned the steps, and then physically put everything away.

I know, it doesn’t look spectacular next to recent robot demos doing Karate and MMA. But I’m actually much more excited about this. The real story here is the agentic shift.

In tests, ER 1.5 increased long horizon task success by about 2-3x compared to previous Gemini models. A single reasoning core now transfers from tabletop rigs to humanoids like Apollo with minimal retraining.

Skills learned on one robot can migrate to another. Fleets learn together instead of starting from zero.

Kanishka Rao, DeepMind engineer, put it plainly:

“We can control very different robots with a single model, and skills learned on one robot transfer to another.”

This breaks one of robotics’ oldest bottlenecks. Every robot form factor used to need its own brain. Now a shared agent layer runs across bodies, cutting development cycles from years to months.

It may not go viral, but it’s the moment web agents stepped off the screen and into the physical world.

#3 LIMI: Data Hoarding Might Be Over

One paper just torched a core belief in agent training: more data = better agents.

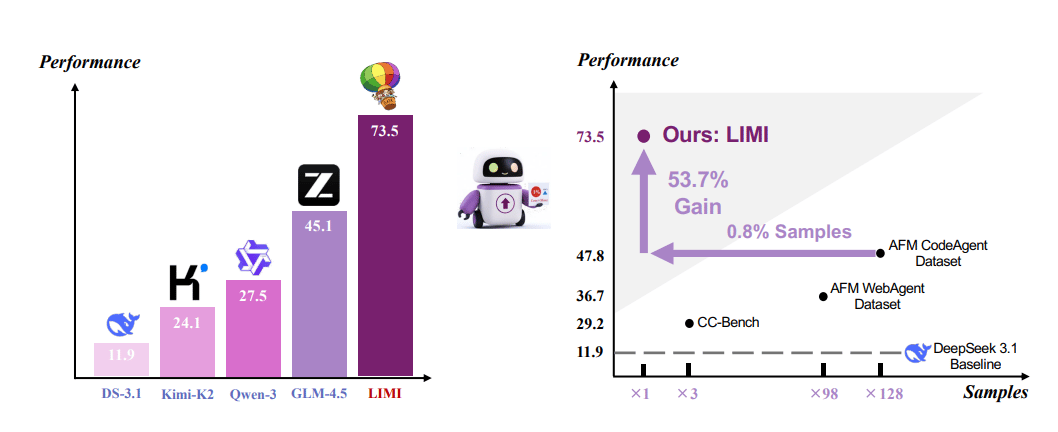

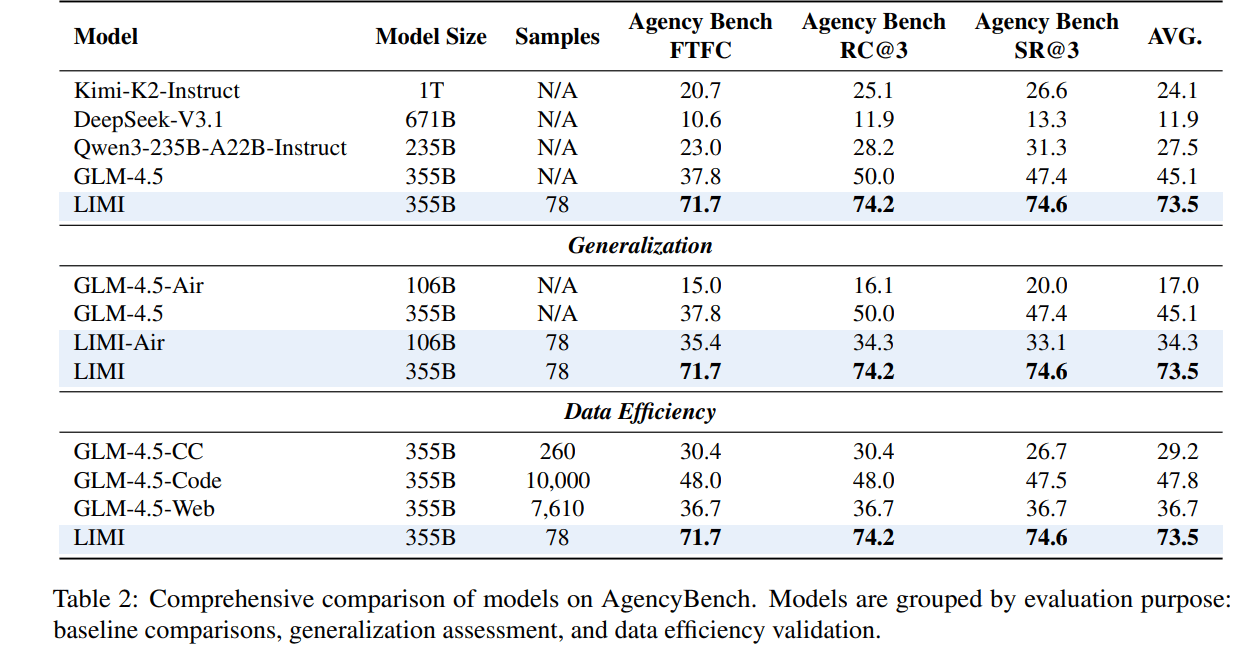

Last week, researchers dropped “Less Is More for Agency”, and the results were surprising. With just 78 carefully chosen demos, LIMI outperformed models trained on 10,000+ examples, boosting long-horizon task success by 53.7%.

Source - LIMI: Less is More for Agency

The secret is not a new model or training trick but smarter curation. The 78 queries originated directly from real developer pain points, expanded through a GitHub pull request pipeline to maintain authenticity while also adding coverage.

Each query was a full trajectory with step-by-step reasoning, tool calls, feedback, and recovery until success.

Source - LIMI: Less is More for Agency

On AgencyBench, which tests first-turn accuracy and multi-round success, LIMI crushed older code-agent datasets and even massive synthetic corpora across tool use, coding, data science, and research. While using just 1–30% of the data.

The implication here is that you no longer need to scrape and label hundreds of thousands of demos to make your agent perform well. A small, sharp team can hit near state-of-the-art with a weekend of focused data curation.

It also makes iteration faster. Want to teach your agent a new skill? Add a handful of well-designed trajectories instead of months of data collection. Micro-datasets become new competitive moat.

It looks like indie builders just got a new way to punch way above their weight.

#4 Agents Can Breathe Again

We may be witnessing the end of the tool overload era for AI agents.

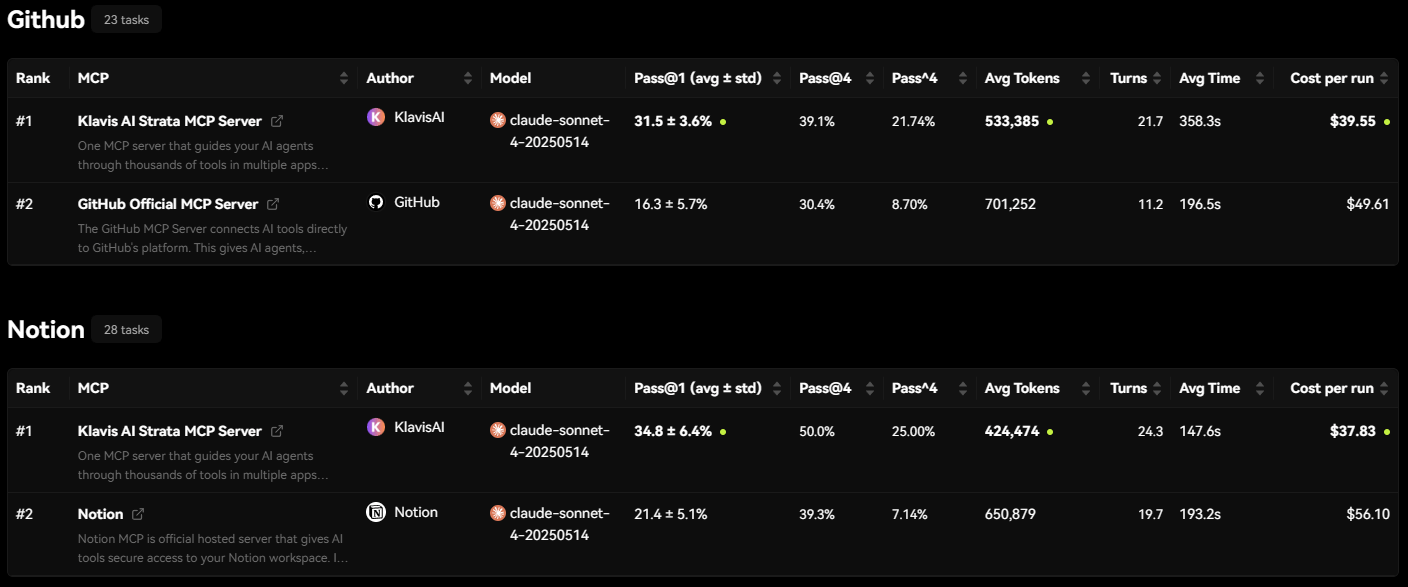

On Sept 22, Klavis AI dropped Strata, an open-source MCP server designed not to pile on more tools, but to organize them. Instead of bombarding an LLM with hundreds of APIs all at once and hoping it picks the right one, Strata introduces a hierarchical, demand-driven tool discovery layer.

Give a modern LLM an MCP client tied to, say, 100+ internal tools, and it faces choice paralysis. Every prompt swells with tokens, contexts break, schemas misalign, and caching goes haywire. Teams either hide tools or artificially cap to a few dozen just to keep the system stable.

Strata takes a different route. Agents explore tools step by step: first identifying the app, drilling into the category, then selecting the exact action. That small design change makes a big difference.

Klavis reports that with no model changes, Strata doubles accuracy on key tasks:

GitHub workflows: pass@1 goes from ~16.3% → ~31.5%

Notion workflows: from ~21.4% → ~34.8%

In human-reviewed multi-app workflows: ~83% accuracy

Source - MCP Leaderboard

The average company is juggling 100 SaaS apps. Strata’s stepwise discovery keeps the surface area tight.

Okay, it does add a few extra calls, and some argue fuzzy search can cover parts of this, but reproducible, auditable discovery matters when an agent has to chain several internal systems or when security teams need a tight blast radius.

This could be the difference between agents drowning in APIs and actually swimming.

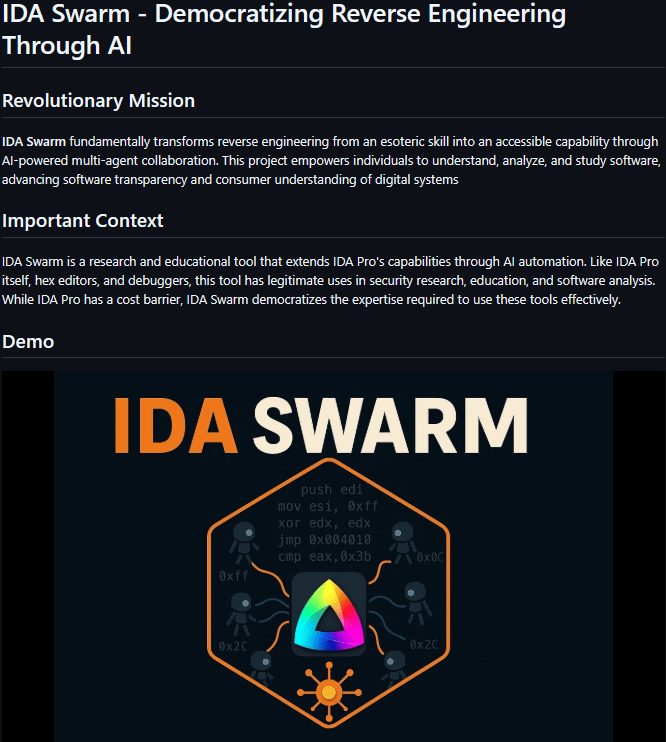

#5 Reverse Engineering Just Got a Swarm

This one flew under the radar, but it could completely change how we tear apart binaries.

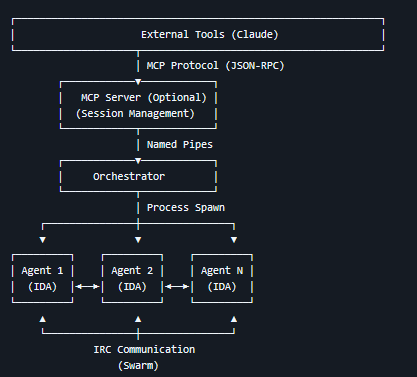

Last week, a tiny GitHub repo called IDA Swarm dropped, and it’s doing something no one else has tried: turning reverse engineering into a multi-agent job.

Instead of one analyst grinding through a binary, IDA Swarm spins up a fleet of IDA Pro agents. Each agent tackles a different slice of the binary, runs analysis in parallel, cross-checks its peers, and proposes patches.

The agents debate conflicts, merge findings, and even call external tools through MCP. There’s support for patching across multiple architectures baked in.

Source - Github: IDA Swarm

That matters because reverse engineering has always been gated by complexity. It usually requires years of training, expensive software, and workflows dense enough to scare most people off. IDA Swarm tries to bring expert-level workflows within reach.

The stakes are high. Nearly a quarter of all new vulnerabilities are exploited before a patch is even available. Traditional patch development can drag on for weeks while attackers weaponize zero-days. If multi-agent systems can cut that window to days or even hours, defenders finally get a shot at moving first.

It’s still rough code today. But if the idea matures, tearing apart binaries could go from an elite specialty to something an advanced hobbyist could do on a weekend.

Before we sign off, some other drops this week worth knowing:

Okta rolled out XAA, a new identity fabric to discover, govern, and lock down AI agent credentials.

Hugging Face launched GAIA 2 + ARE, a brutal new sandbox benchmark that stress-tests agents.

Algolia unveiled Agent Studio, enabling organizations to build AI agents with search intelligence as their foundation.

Google launched the Data Commons MCP server to give agents instant access to public datasets for hallucination-resistant apps.

OpenAI and Databricks signed a $100M multi-year deal to bring AI agent building on enterprise data to big business customers.

For anyone building, the message this week is clear: you don’t always need bigger models or more GPUs. Smart psychology, sharp data, and cleaner infra mean indie builders can ship what only big tech could last year.

Catch you next week✌️

→ Don't keep us a secret: Share this email with friends

→ Got a story worth spotlighting? Whether it’s a startup, product, or research finding, send it in through this form. We may feature it next week.

Cheers,

Teng Yan & Ayan