Hey fam 👋

News: I finally ChatGPT-pilled my significant other this week while we were vacationing in South Korea. She’d been loyal to Perplexity (annual sub and all), but after a few hours with ChatGPT she was shook. It helped her brainstorm branding ideas, then casually built an entire brand deck from a conversation.

Her disbelief was audible. Pretty sure many people are feeling the same way.

Anyway… welcome to The Agent Angle #18: The Trial.

Something in the air shifted this week. You could feel it in the feeds: everyone suddenly questioning what “intelligence” really means again.

Karpathy threw shade at agents. Huryn clapped back with 185 flawless runs. Claude started teaching itself new skills. And AURA showed up quietly to judge them all.

Let’s get into it.

#1 Slop Hits Back

The week started with a gut punch from AI’s own father, Andrej Karpathy.

The former co-founder of OpenAI and a respected voice in machine learning called today’s agents “slop.” He says they don’t yet really work.

Then he doubled down, saying true AGI is still a decade away. He argues the systems don’t have enough intelligence, multimodal ability, continual learning, or the ability to use computers reliably.

For most of the internet, Karpathy’s words are gospel. He built the damn thing. If he says we’re not close to autonomy, who’s arguing?

Paweł Huryn, apparently.

He spun up 185 autonomous GPT-5 agents, each given nothing but an objective. No scaffolds or predefined reasoning steps. Just figure it out.

And they did. All 185 out of 185 succeeded.

The agents created Kanban boards, searched the web, and executed full workflows with perfect reliability. And GPT-5 did it using fewer tokens than GPT-4o, thanks to better reasoning compression.

Huryn’s conclusion was simple: “True autonomy isn’t ten years out. It’s about twelve months away for most processes.” Although, complex processes still need orchestration.

Here’s my takeaway: If I were to bet on “agentic AI” as fully autonomous in one year across the board, I’m sure I will be poor.

But if I instead treat this as a rapidly stepping ladder, with some workflows flipping in 12-24 months while the full span of human-level generality takes 5-10+ years, I’m probably on the right wavelength.

#2 The Ten-Cent Publishing Revolution

Ten cents. Fifteen minutes. One swarm of agents. That’s now enough to publish a research paper.

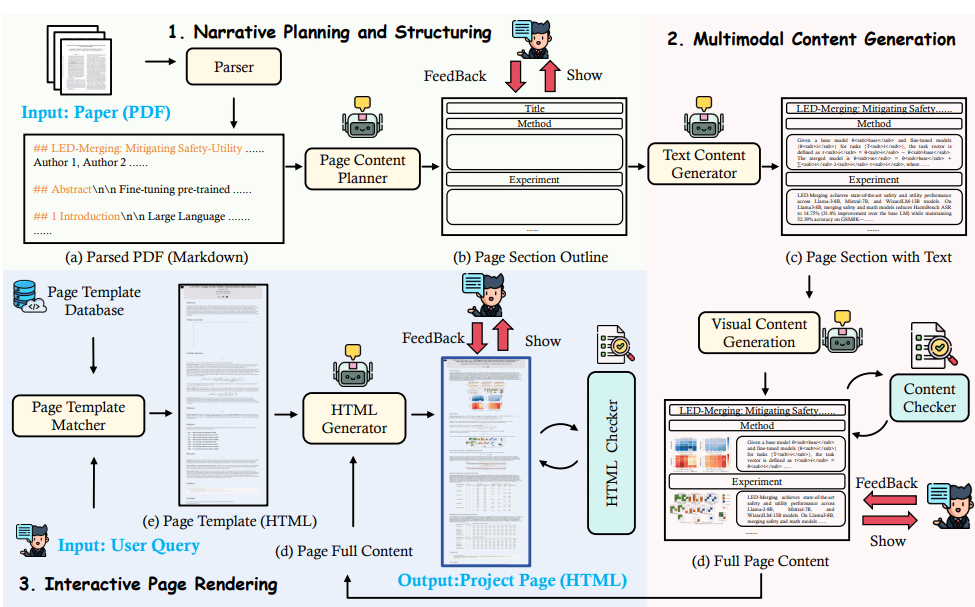

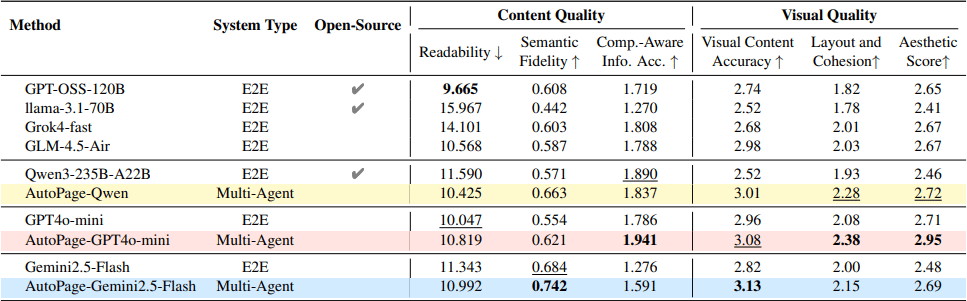

A team from Shanghai Jiao Tong University built AutoPage, a multi-agent system that turns static papers into interactive project sites with summaries, figures, and visuals.

It works like a newsroom:

A Planner breaks the paper into sections.

A Writer translates dense text into readable copy.

A Designer builds the site and aligns the visuals.

A Checker reviews everything against the source.

The result is a professional site that costs less than a tweet.

Across 1,500 papers, AutoPage outperformed GPT-4o, Gemini 2.5, and Qwen3 in accuracy, readability, and layout quality while staying under ten cents per page.

The issue with science is that brilliant work dies in PDFs that no one reads, cites, or even understands.

I am super bullish on this. If we accept that discovery is only half the job, then the other half, dissemination and comprehension, is now automatable for pennies. That means smaller labs and individual researchers can amplify their work with near-zero friction.

Turning PDFs into structured, machine-readable pages makes it easier for models like GPT-5 or Semantic Scholar’s S2 Agent to read and synthesize findings instead of hallucinating them.

Of course, automation can’t fix peer review or bad methodology. What AutoPage really optimizes is access, not accuracy. At ten cents a paper, it’s hard to argue that the publishing bottleneck needs to exist any longer.

#3 Claude’s First Skill Tree

Claude just unlocked a skill tree. Like, literally.

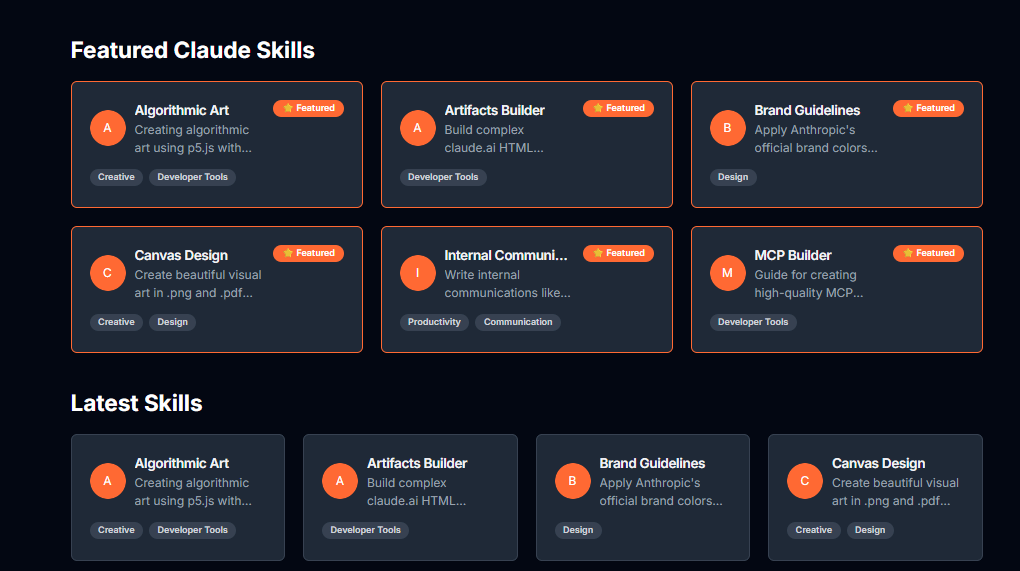

Anthropic just rolled out Agent Skills, a system that lets Claude pick up new abilities on demand, from writing Excel formulas to running scripts or following brand guidelines, without retraining or fine-tuning.

Each skill is a folder with instructions, code, and context. Claude checks what’s needed, loads it, then drops it when finished. It’s modular intelligence built from plain files.

Within days, a Skills Marketplace appeared online, collecting official, community, and open-source modules. You can browse them like apps, install a document tool or a design pack, and Claude will use them automatically.

Source: Claude Skills Hub

That’s a fundamental rewiring of how models evolve. Instead of retraining giant brains for every new task, teams can compose and share skills like Lego blocks of intelligence. It also breaks learning wide open. Anyone can extend Claude’s reasoning and publish it for others, the way web devs once traded early browser plugins.

DeepMind called this years ago: modular, skill-based systems are the most scalable path to continual learning because they separate reasoning from memory. Now it’s happening in real time.

If you’re building with Claude (or thinking about it), ask yourself: which skills do I need to write once, deploy many times? The faster you answer that, the faster you’ll be ahead of the pack.

#4 The Internet Just Got Hunted

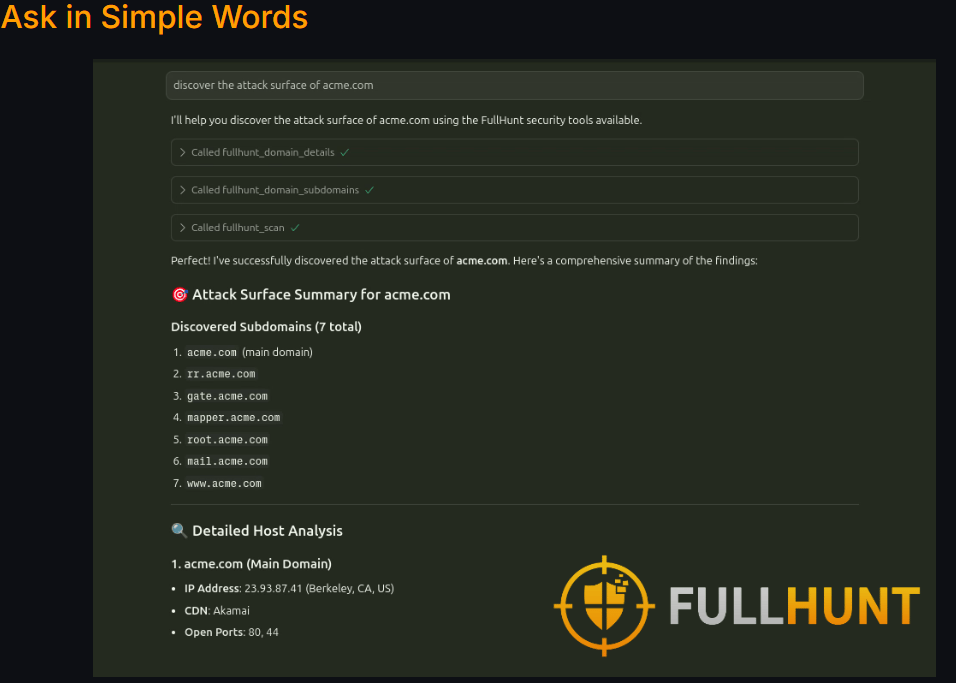

Every company has a shadow version of itself: old servers, forgotten logins, stray APIs. FullHunt just built an AI that can find them all.

Their new Agentic AI for Attack Surface Intelligence can map a company’s entire internet footprint from a single prompt.

It uses the Model Context Protocol to orchestrate more than 40 security tools simultaneously. Domain scanners, exploit databases, dark-web scrapers, and certificate monitors all act like parts of the same system.

Ask it to inspect a company website or flag exposed admin panels, and it runs discovery, fingerprinting, vulnerability checks, and breach correlation in seconds.

The result looks like something a senior analyst would take days to build.

Source: FullHunt.io

In reality, tools like this won’t replace analysts. But it compresses grunt work so we humans can focus on judgment, not data wrangling. Even the product copy admits it: someone still needs to verify results, filter false positives, and choose what to fix.

The real race now is about situational awareness. Knowing what’s exposed before another agent (benign or malicious) finds it first. Teams still leaning on annual pen tests or scattered dashboards are already behind.

If I were running a security ops unit, I’d treat this as a must-adopt-soon moment.

#5 The Autonomy Auditor

We’ve nailed making agents do things. Now we have to teach them when to chill.

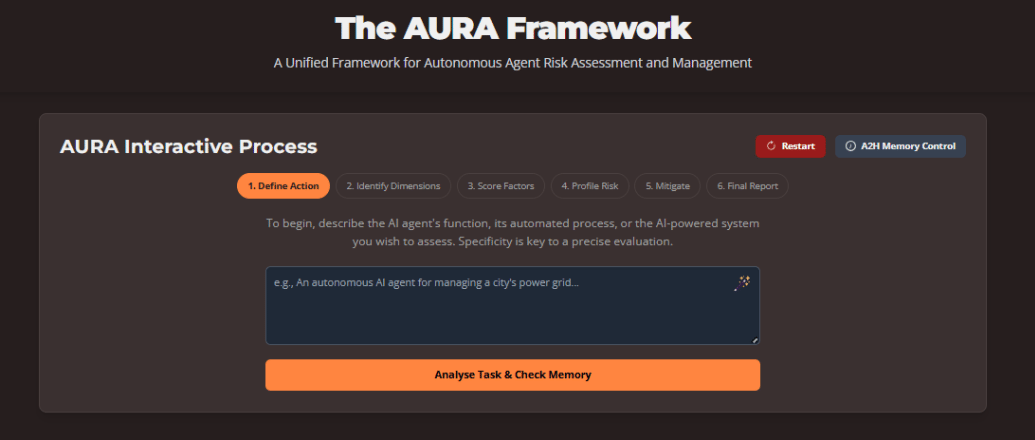

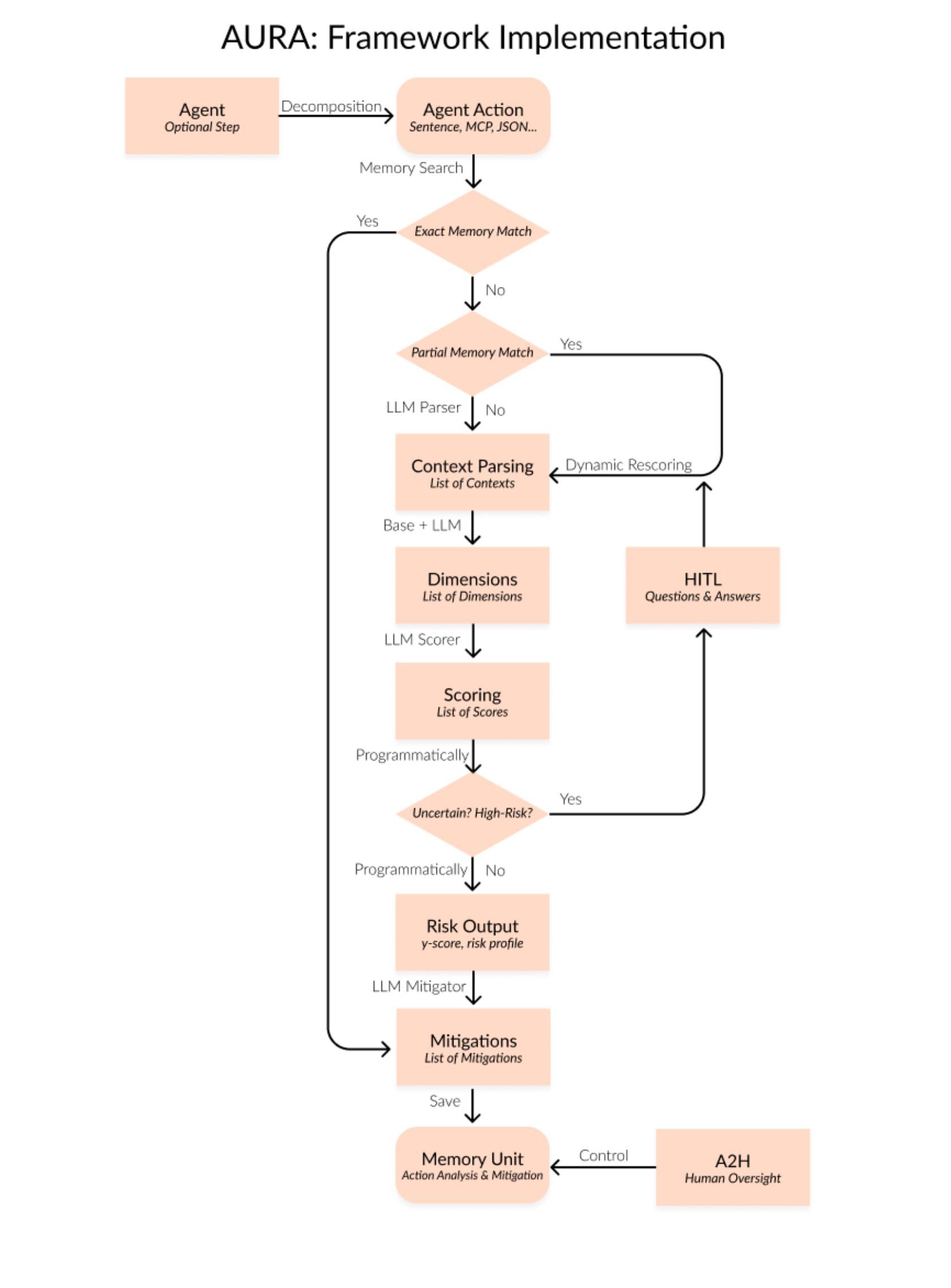

Two researchers from the University of Exeter just dropped a paper introducing AURA, a governance layer for agentic AI that acts like a built-in conscience. Every action of an AI agent gets a “gamma” (γ)-score that quantifies how risky it is.

Low gamma → go ahead.

High gamma → pause, explain yourself, or route to human oversight.

The framework breaks down an agent’s workflow step by step, scores each move, logs it, and over time builds a memory so the agent (or system) recognizes when a pattern is off

We’re living in the era of “agents do stuff”. Booking travel, running servers, interacting with customers, automating monitors. But we don’t yet have a robust way to say: “Yes, this agent’s move is safe.” Enterprises know it. Less than 10% of organizations have any real governance framework for agentic AI, according to the authors.

AURA plugs directly into agent systems through a Python package or UI, connects with standard protocols like MCP and A2A, and spits out a traceable audit trail. It’s basically a risk manager for your AI agents.

Most current guardrails operate at the workflow level. AURA drops down to the action level, producing explainable risk scores tied to familiar regulatory buckets like privacy, bias, and security.

Is it perfect? No. Turning risk into a number is messy, and overcautious scores could slow agents down. But this is the first serious attempt to measure autonomy instead of just trusting it.

If agents are going to run real systems, they need something deeper than “trust the process.” They need traceable, quantitative accountability. AURA looks like the first draft of that future.

🖐 Just in case you missed it, my deep dive earlier this month was on OpenMind, the open-source stack wiring every robot on Earth into one collective intelligence.👇

Let me know if there’s any particular company you’d like me to cover next!

A few other moves on the board this week:

Google AI launched VISTA, a self-improving multi-agent system that improves the quality of text-to-video generation.

Codi, backed by a16z, launched an AI agent that fully automates office management, including vendor management, restocking, and logistics.

Huawei launched HarmonyOS 6 with a new AI agent framework and cross-device sharing with Apple.

Hyro raised $45M to expand its AI agent platform for healthcare, building administrative, operational, and clinical agents to improve patient access.

RUC-DataLab released DeepAnalyze-8B, an open-source agentic LLM that completes the entire data science pipeline.

The trial isn’t over. It never is. Next week, the questions get harder. Catch you then ✌️

→ Don't keep us a secret: Share this email with your best friend.

→ Got a story worth spotlighting? Whether it’s a startup, product, or research finding, send it in through this form. We may feature it next week.

Cheers,

Teng Yan & Ayan