Hey fam 👋

Welcome to The Agent Angle #18: Parallel Motion.

You know that moment when the intern starts doing things you didn’t assign? Erm..that’s where we’re at with agents right now.

The pattern this week is independence. They’re moving around, setting up their own workspaces, and even grading each other’s code.

Let’s see how far they got this week.

#1 The World Just Got Programmable

A drone just followed a moving car through traffic after a single line of text. No joystick, no code. Just words.

That demo came from a new startup called Dimensional, which slipped out of stealth this week. One Python install, and you can talk to machines the same way you talk to software.

Developers are already testing it in homes, offices, construction sites, and underwater ROVs. The platform is open source and runs on cheap hardware like $1.6K robot dogs and $5K humanoids. Personally, I’d use it to tell my robot dog to go play fetch.

Then came the mic drop: Spatial Agents.

Most AI agents only act inside software. They open browsers, run commands, send messages. Spatial Agents extend that behavior to the physical world with simple calls like navigate_to, move, track, servo. The same reasoning loops that click a button on a webpage can now move a robot across a room.

Dimensional knows this might be a stopgap. Visual–language–action models could soon predict movements directly, making today’s command architecture feel ancient. What they’ve built is a bridge: a working layer that lets builders script hardware through language right now, without waiting for the next model breakthrough.

Basically, a practical way to deploy physical intelligence out in the wild today. The line between thought and action is blurring. Still, it’s early. The proof will come from large-scale deployments.

#2 GAP: Agents Go Multicore

I thought this was an interesting paper. Not a breakthrough, but a solid sign of steady, meaningful progress in AI.

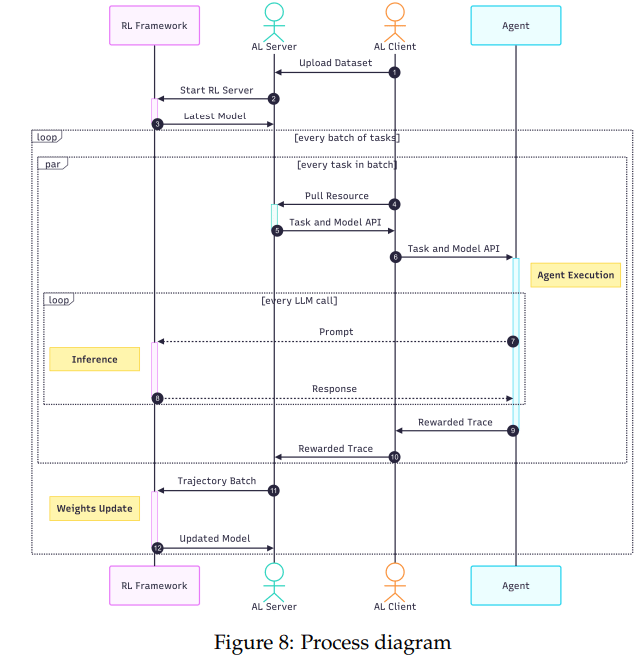

Researchers from NUS and Carnegie Mellon dropped a paper that breaks the rhythm of agents thinking in a single lane: reason, act, repeat. It’s called Graph-based Agent Planning (GAP), and it teaches agents how to think in parallel.

Source: GAP

Instead of following a single reasoning chain, GAP maps out a dependency graph of sub-tasks. It figures out which steps depend on each other and which can run in parallel. That simple shift means an agent can reason across multiple branches at once, then merge its results when they connect.

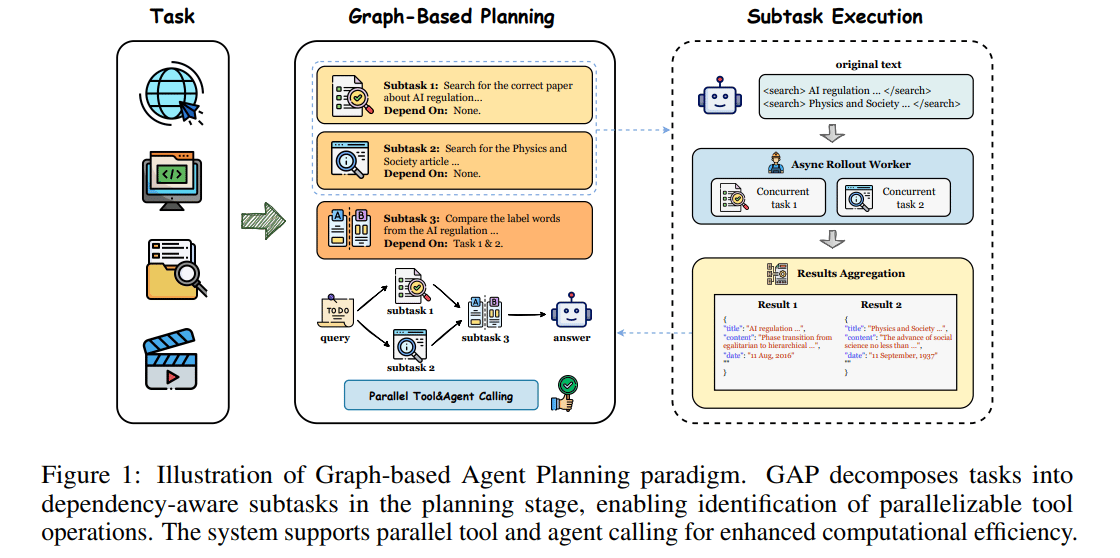

In tests on multi-hop reasoning benchmarks, GAP cut reasoning turns by a third and response length by a quarter while slightly improving accuracy. Not huge gains, but meaningful ones.

Source: GAP

It looks like the first sign of agents learning how to coordinate multiple threads of thought. Parallel cognition. They can decompose, synchronize, and merge reasoning paths dynamically instead of waiting in line.

The big question is scalability. Parallel thinking works when sub-tasks are independent, but most real-world problems have fuzzy overlaps and shared context. At some point, coordination overhead will start to drag. Still, the direction feels right.

#3 Agentic Operating System

Whoever owns the OS owns the universe. OpenAI knows it. That’s why it launched Atlas, its experimental “AI browser” last week.

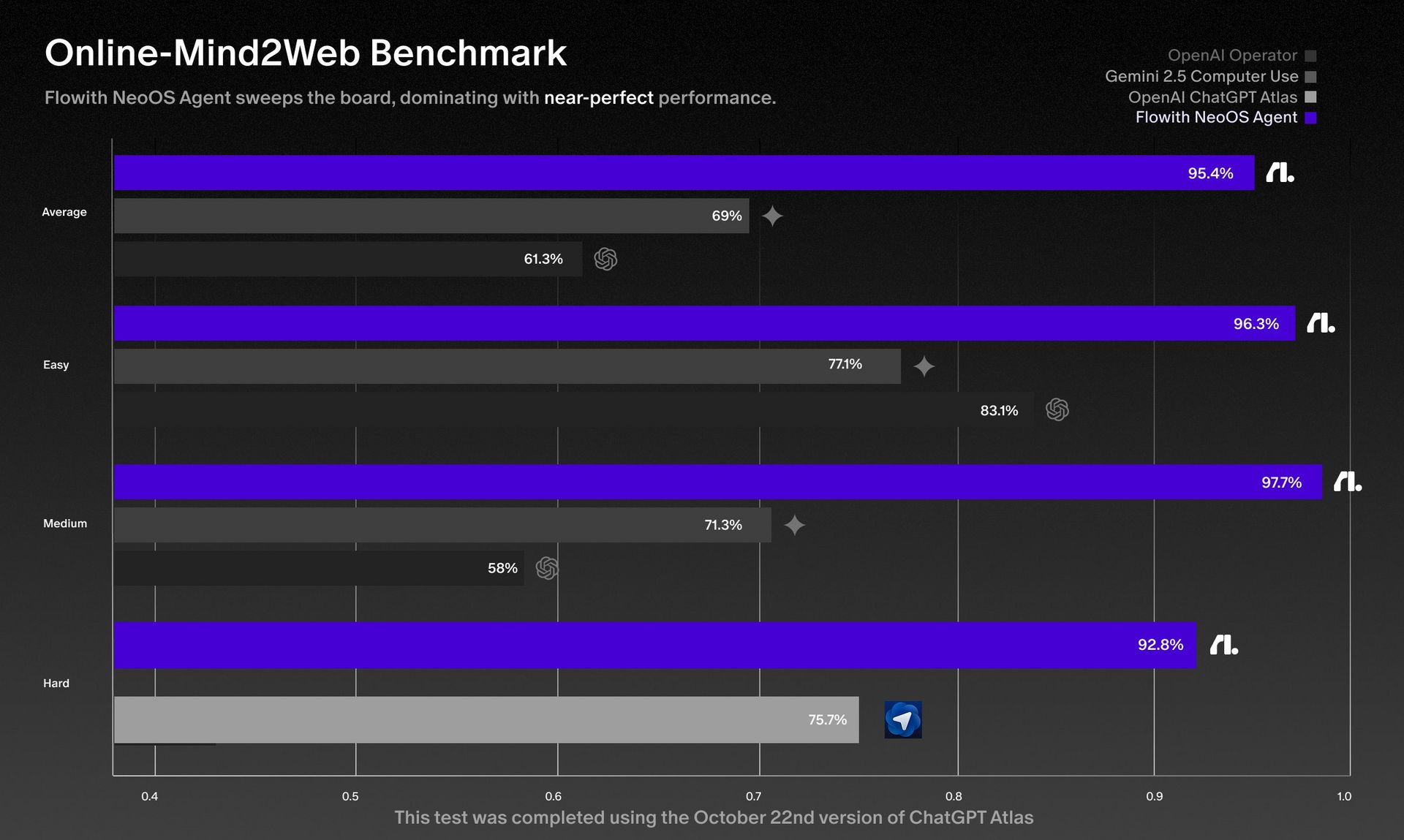

But I came across a new contender. FlowithOS is building something more radical: an operating system designed natively for AI agents.

Instead of clicking through tabs or firing off API calls, FlowithOS lets agents coordinate full workflows across the internet. Think of a pipeline that runs research → analysis → draft → publish. Humans and agents working together on the same shared canvas.

Under the hood, a reflective memory system sits. Every interaction updates the model’s internal state, shaping how it plans, what it prefers, and how it adapts. Over time, it learns your patterns and optimizes around them. It’s almost like a “teammate that evolves.”

In early benchmarks, it reached a 97.7% execution rate and 95.4% average accuracy, outperforming every major AI browser. On the hardest benchmark level, it scored 92.8, where ChatGPT’s new Atlas topped out at 75.7.

Source: X

To be fair, calling it an “operating system” today might be a stretch. We don’t yet know how it performs at scale, how it recovers from failure, or how well its memory holds up over time. But it’s a thoughtful step toward more human-style collaboration.

I’m convinced the team that cracks “agent orchestration + memory + workflow” for teams will be the next major disruptor. The race for a new great operating system of the AI era is already underway, and the stakes are high.

#4 Lightning Strikes the Agent Loop

Usually, making your agent smarter means tearing it apart and retraining.

Microsoft just decided that’s dumb.

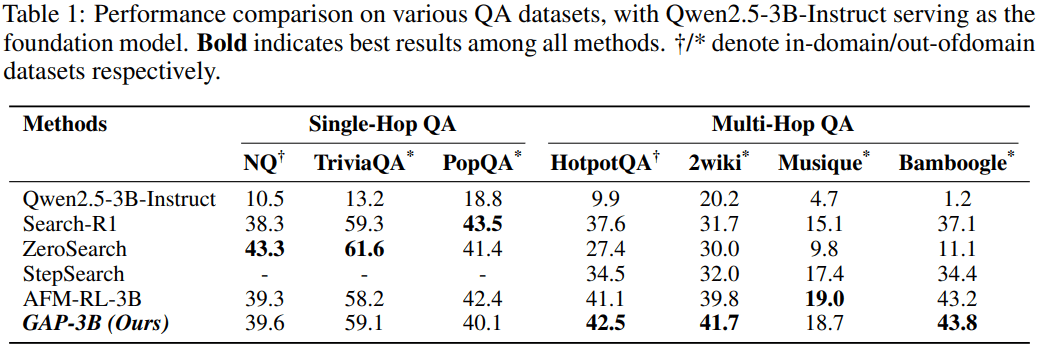

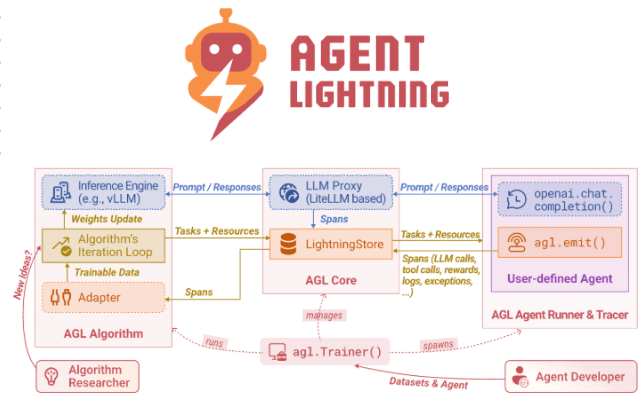

They launched Agent Lightning, a full-stack training framework that lets any AI agent learn from its own experience. The framework plugs reinforcement learning, prompt optimization, and fine-tuning directly into existing agents, removing the need for any code changes.

Source: Github

Under the hood, Lightning treats each agent run as a Markov decision process, mapping every state, action, and reward into structured traces.

These traces feed into a shared LightningStore, where reinforcement and optimization algorithms continuously update the model. Its hierarchical RL system, LightningRL, manages credit across multi-step reasoning so agents learn from both success and error without changing their core logic.

The key implication here is that agents become products that get better as they run.

You can drop Lightning into systems like LangChain, OpenAI Agents, or your own setup, and it starts optimizing on its own. That’s a big deal for teams that don’t have time or resources to rebuild their stack every time a model improves.

I should add the caveats: so far, most of the testing is in controlled domains like SQL generation or document retrieval, and human oversight is still needed to design rewards correctly.

But the real story here isn’t RL at all, it’s integration. The smartest teams will stop treating their agents as fixed products and start treating them as living systems that grow sharper with every interaction.

#5 The Code Critic

Every good coder has that colleague. The one who scans your pull request, sighs and says, “You forgot to run the tests again.” Quibbler is that colleague, but for your coding agents.

Built by Fulcrum Research, Quibbler quietly shadows your AI developers, watching every line they touch.

Skip a test? Hallucinate a function? Break a style rule? Quibbler calls it out before it ever hits commit.

You can run it in Hook Mode for real-time oversight (already used with Claude Code), or in MCP Mode, where any agent such as Cursor, ChatGPT, or your in-house stack calls Quibbler’s review_code tool mid-session for inspection. It’s open source, lightweight, and already lives on GitHub.

Over time, it learns your project’s quirks (like naming conventions), then begins enforcing them automatically. The longer it runs, the more it feels like a senior engineer quietly guarding your repo.

IMO, this kind of oversight is about to become essential. Studies have shown that agent hallucinations frequently creep in mid-task rather than at the final output. Quibbler catches those mistakes while the agent is still thinking. If you’re running long-lived coding agents, adding a critic like this is quickly becoming table stakes.

Zooming out, I can see the bigger pattern: we’re entering the Agent Oversight Era. First, we built agents to generate code. Now we’re building agents to govern them.

What I’m Testing

I’ve been pondering this question: What happens when every AI agent gets its own debit card?

Here’s how I see it: Neo-banks + AI agents + stablecoins = the new financial stack.

Agentic payments are starting to happen. Yet traditional banks can’t even imagine this new architecture. I’ve been testing Tria after a great chat with the founder last week. With a Tria card, you can spend anywhere Visa works. It feels like Revolut, except you actually own your money.

Tria’s offering 20% off card prices with my code (I get a small rev share). The base card is $20, and the Premium card unlocks up to 6% cashback and access to airport lounges. I paid for a Premium card myself and am waiting for it to ship. Will share my experience as it matures.

To end off, a few other pieces in motion this week:

Cursor launched 2.0 with a multi-agent coding interface and its new Composer model.

OpenAI introduced Aardvark, an autonomous GPT-5–powered security agent that scans, validates, and patches vulnerabilities.

Researchers unveiled DeepAgent, a reasoning system that lets AI agents discover and use tools on demand from more than 16,000 options.

SS&C launched an AI Agent Store offering prebuilt agents for finance, compliance, and legal workflows.

Treasure Data introduced a “Super Agent” that coordinates multiple marketing agents for end-to-end campaign automation.

We are entering a phase where the world reacts to software that thinks. The interfaces are disappearing. A new class of autonomy is forming in front of us. I want this community to stay early and stay sharp. Catch you next week ✌️

→ Got a story worth spotlighting? Whether it’s a startup, product, or research finding, send it in through this form. We may feature it next week.

→ Don't keep us a secret: Share this email with your best friend.

Cheers,

Teng Yan & Ayan