Hey fam 👋

Welcome to The Agent Angle #22: Shadow Learning

It's been a really exciting bumper week for AI fanboys (like me). My verdict: Google’s Gemini 3 should be your new daily driver. It’s fast and a monster all-round. Seriously. I use it for everything.

GPT 5.1 is good for backend coding and deep research. Otherwise, it falls behind.

Grok 4.1 wins on creative writing

Nano banana pro released -> RIP Adobe Photoshop

And while the big labs fought over benchmarks, the real breakthrough happened in the dark: agents are now teaching themselves without us

Let’s get into it.

#1 The AI That Out-Researched Us

Holy shit: an AI just out-researched us humans.

Intology just announced Locus, a long-horizon “Artificial Scientist” that doesn’t stop after an hour and resets. It carries its hypotheses forward, runs experiments continuously, and updates its reasoning.

After a full 64 hours, it beat human experts on the benchmark they built to test just this kind of work: RE‑Bench

Earlier AI-research systems hit a ceiling because they lacked persistent context. They acted in bursts and then stopped. Locus carries its context forward, so it keeps improving while everything else levels off.

Here’s the skinny:

On RE-Bench, humans scored 1.27. Locus hit 1.30 in the same time and compute budget.

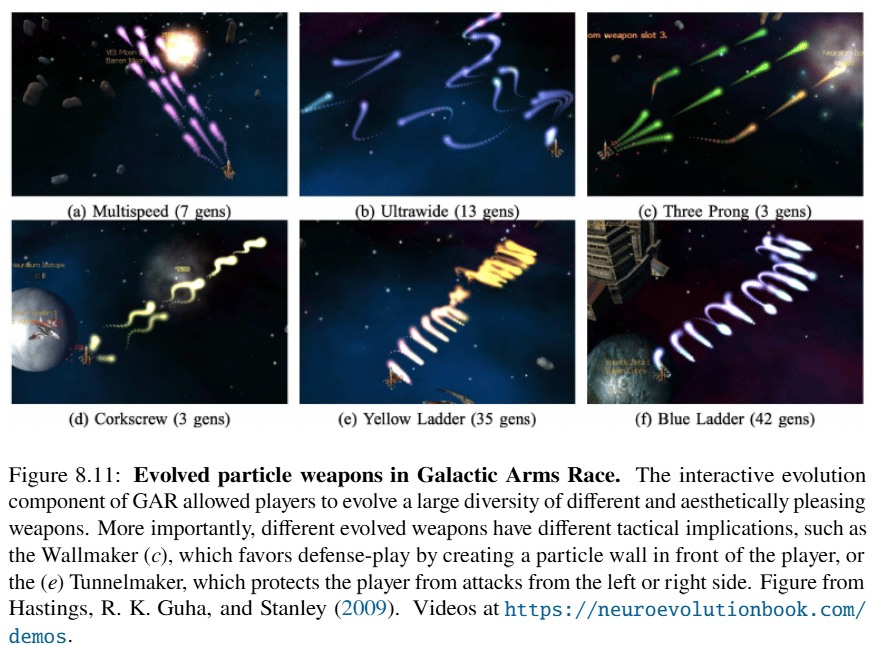

Locus achieved speedups from 1.5× to 100× compared to standard PyTorch/CUDA baselines. In other words: it wrote GPU code faster than the people who specialize in GPU code.

It reads like a dream run, which is why some folks immediately started questioning it:

Now: sure, there are caveats. The benchmarks are still artificial (8-hour & constrained tasks instead of open real-world research) and independent replication is still pending.

But the implication is massive: AI accelerating AI. Humans may no longer be the bottleneck!

#2 The CAD Co-Pilot Arrives

Ask any engineer what ruined their weekend, and it was probably a CAD tutorial.

CAD is considered one of the hardest engineering tools to learn. It has thousands of commands, unforgiving workflows, and a learning curve that only flattens after years of clicking the wrong thing.

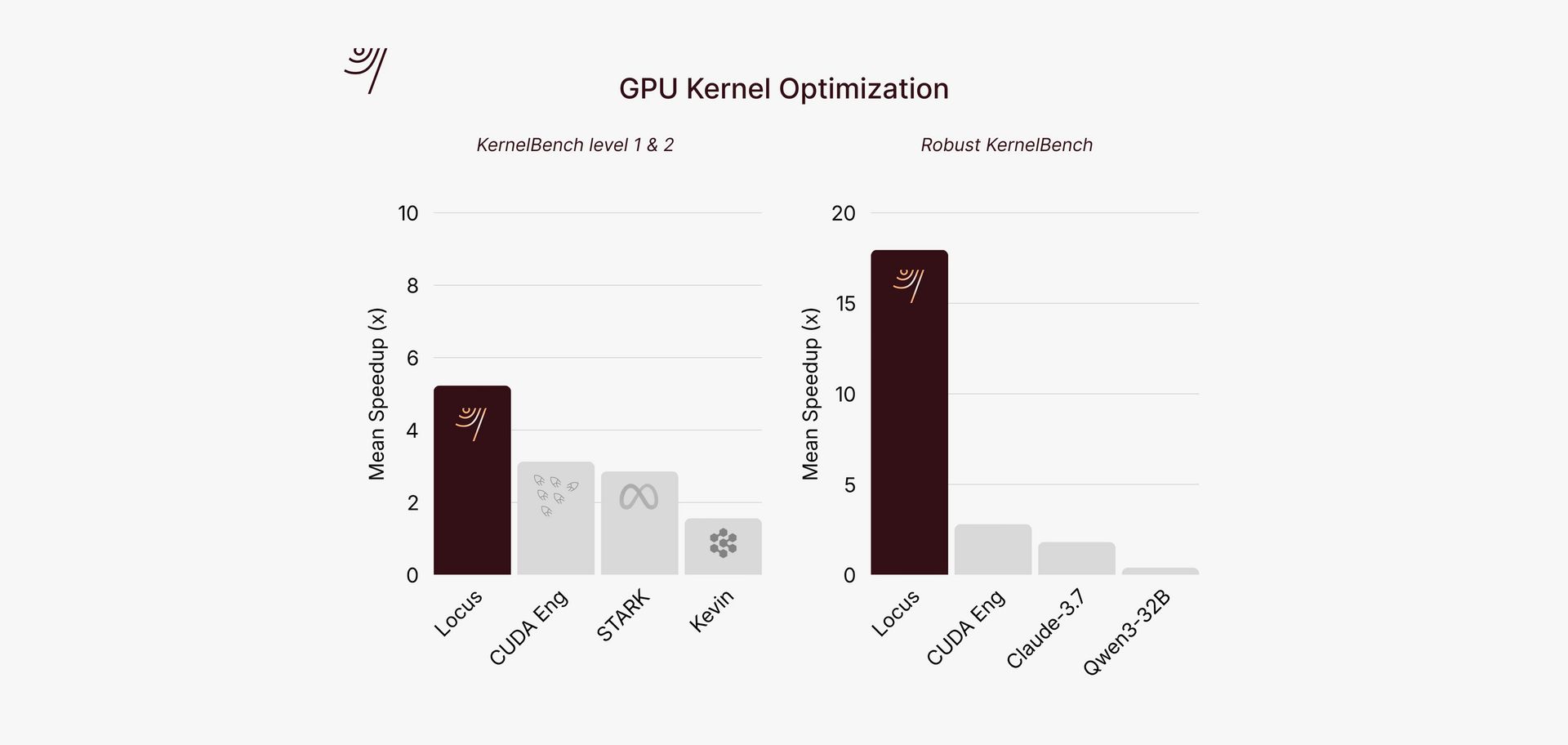

MIT just released something to ease that pain. It literally watches how humans use real CAD software and then drives the interface itself. VideoCAD, the dataset behind it, contains 41,000+ real screen recordings showing every click, drag, zoom, and tool choice that goes into turning a simple sketch into complete 3D models.

Source: VideoCAD

Trained on this data, the agent can take a 2D sketch and drive actual CAD software on its own. It selects regions accurately and rebuilds full 3D shapes by operating the interface directly.

This is a big deal because CAD is nothing like the apps UI agents normally practice on. It demands spatial intuition, steady mouse control, and long chains of decisions where one wrong move wrecks the whole output.

Source: VideoCAD

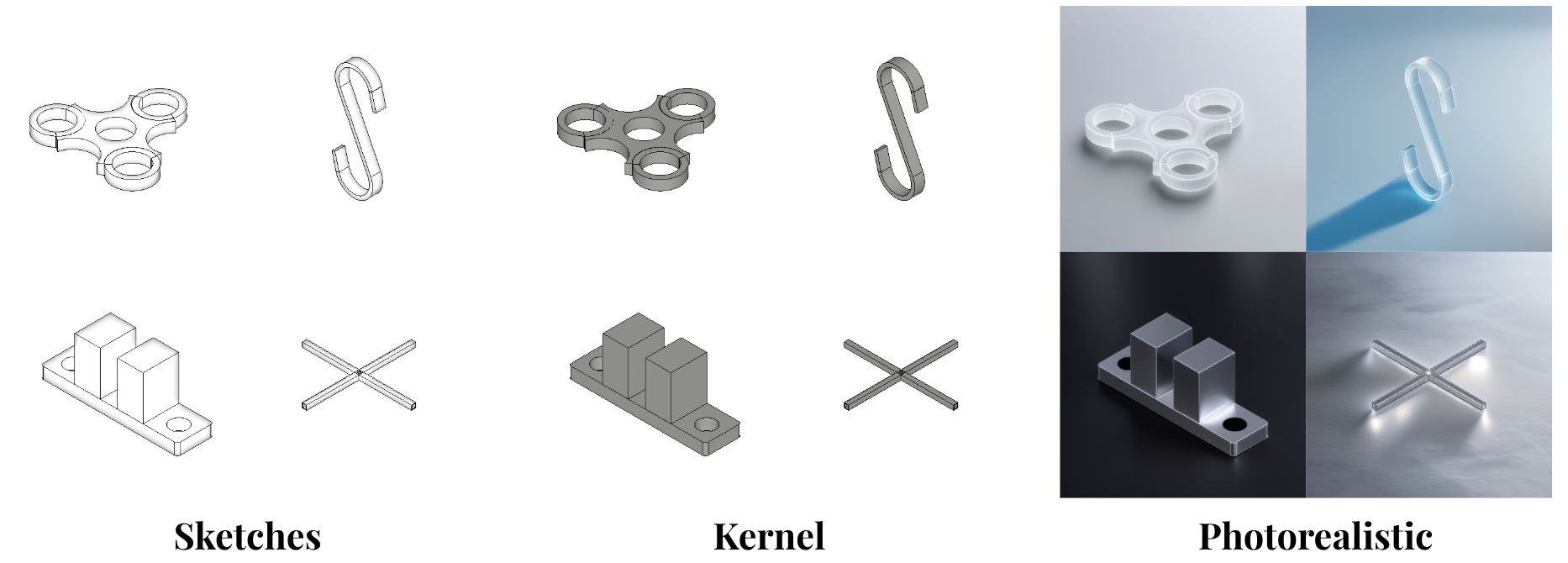

The team pushed things further by checking how the agent handled different image styles. Simple sketches were easiest. Clean CAD images were a bit trickier. Photorealistic images were the hardest. Less clutter made the shapes easier for the model to interpret.

And because CAD sits at the starting line of everything from robotics to product design - and is also the slowest, most painful part of hardware design - any speedup here compounds hugely.

#3 Smarter by Natural Selection

In case you missed it, MIT just dropped a press book on using neuroevolution for agent design.

The premise is simple: instead of training a single agent, you evolve a population of agents and let the most useful behaviors survive. It’s basically survival of the fittest.

The book is a LONG read, but it’s full of those “wait, what the hell?” moments.

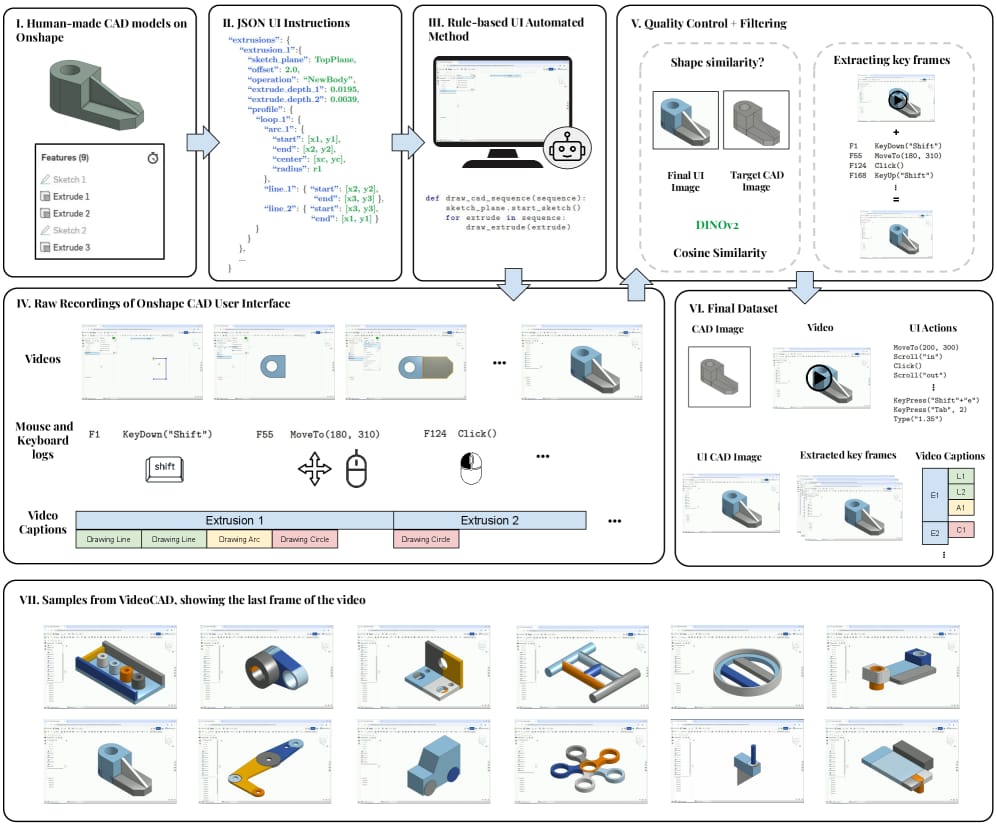

I read through some of them myself, and one that stuck with me was the Galactic Arms Race experiment: It’s the game where weapons evolve according to what players actually use. The system watches which guns people fire the most, mutates new variants around those habits, and suddenly you’ve got entire species of weapons roaming the game.

Some of them turn out hilariously brilliant. One lineage produced a weapon that built a moving particle wall in front of the player. Another spawned side streams that acted like improvised armor. None of this was planned. The tactics appeared simply because the weapons that helped players survive kept reproducing.

That’s neuroevolution in a nutshell. Useful behaviors survive, brittle ones die, and the environment does the shaping. With agents hitting the limits of hand-designed training, this kind of creative pressure is exactly what’s missing.

I’ve always thought neuroevolution was one of the coolest corners of the agent world, and I’m glad the field is drifting back toward it. Recent papers keep circling the idea, but this book actually mapped the whole space and the mindset behind it.

If agentic design is your thing, this is well worth a few of your weekends…

#4 Agent With a Spare Key

Microsoft’s obsession with an agentic OS continues. Their latest Dev build sneakily added something called Agent Workspace.

It looks harmless until you read what it actually does: Windows now has a separate desktop session for AI agents, complete with its own user account, runtime, and background permissions. Turn it on, and the agent gets access to every single file by default.

Yes, the agent gets its own desktop on your machine.

And Microsoft knows how this sounds. The settings page literally warns about performance risks and security implications. Early testers noticed it pulls in Known Folders so the agent can always locate your stuff, even if you moved it elsewhere. It also gets access to your installed apps (unless you wall them off manually).

The optics of “AI gets its own Windows account with file access” are rough, especially after the Recall debacle.

Microsoft claims the footprint is “limited,” but won’t share numbers. And depending on the agent you run, “limited” could mean anything.

The problem is the isolation model. It isn’t fully sandboxed, and it’s landing at a time when trust in Windows is…. well, quite low. If Microsoft wants people to buy into an agentic OS, the isolation story needs to be bulletproof.

#5 Agent0: They Don’t Need Our Data

What happens when AI models stop being bottlenecked by data?

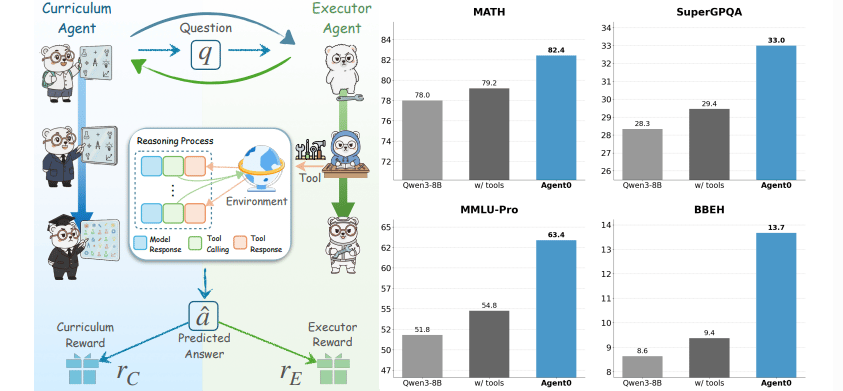

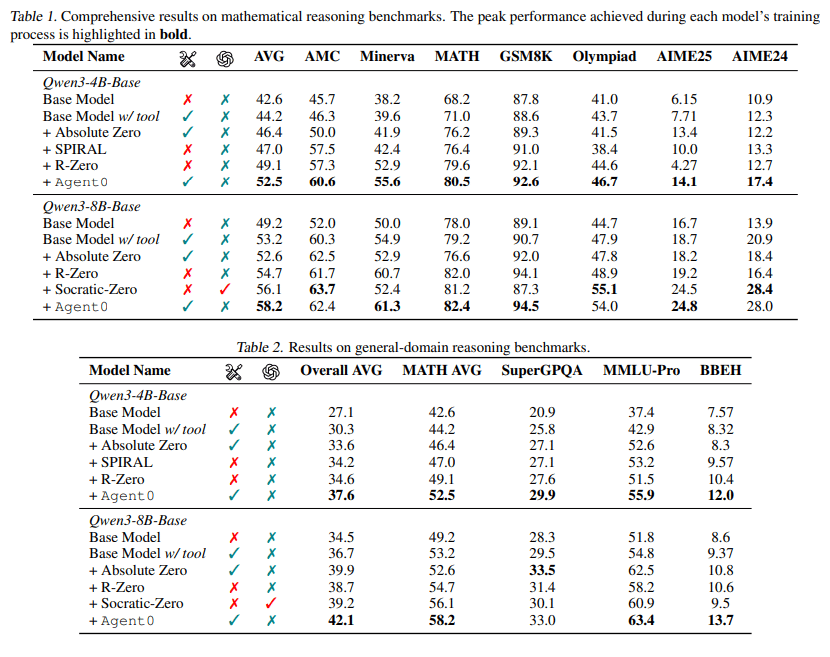

Researchers at the University of North Carolina released Agent0, a self-evolving framework that lets an AI teach itself. Instead of scraping the open web or begging for more annotated examples, it builds its own training cycle from scratch.

Two copies of the same model evolve together. One invents tasks, the other solves them, and both sharpen each other with every loop. No need for a curated dataset. There’s an internal feedback engine that keeps generating challenges at exactly the right difficulty.

Source: Agent0

The first round looks like middle-school math. A few iterations later, you’re staring at multi-step logic puzzles and tool-heavy questions that force real reasoning. The solver climbs with it. Math reasoning jumps 18%. General reasoning goes up 24%. It even beats earlier “zero-data” systems that still leaned on outside proprietary models.

Source: Agent0

For anyone working in AI, this matters A LOT.

AI teams today are limited by whatever text, code, or data they can find. Data is expensive. Labeling is slow. A self-evolving curriculum dodges all of that. The teams that master this will iterate at a pace the rest of the field simply can’t touch. This favors small, fast teams with tight feedback loops.

And if this approach holds, everything about how we build and scale AI is about to jump.

The trick this week is what I’m calling an Agentic Coworker OS.

People call Antigravity, Google’s newly released developer platform, a “strong coding agent.” It is. But that’s not the breakthrough. The breakthrough is that it behaves like an actual co-worker.

You can set up a folder of simple written instructions plus a folder of small tools, and the agent handles the rest.

/workflows → natural language SOPs (how to do tasks)

/scripts → Python tools referenced inside those SOPs

Setup:

List the tasks you actually deliver

Write each one as a short step-by-step file (

proposal_generator.md,scrape_leads.md)Let the agent generate the Python tools each workflow needs

Store them in

/scriptsand reference them inside your instructions.

You can do that for scraping, reporting, content prep, onboarding, anything. As soon as you hit enter, the agent reads the workflow, grabs the tools, and runs the job end-to-end.

A few other moves on the board this week:

Catch you next week ✌️

Teng Yan & Ayan

PS. Did this email make you smarter than your friends? Forward this email to them so they can keep up

Got a story or product worth spotlighting? Send it in through this form. Best ones make it into next week’s issue.

And if you enjoyed this, you’ll probably like the rest of what we do:

Chainofthought.xyz: Decentralized AI + Robotics newsletter & deep dives

Our Decentralized AI canon 2025: Our open library of industry reports

Prefer watching? Tune in on YouTube. You can also find me on X.