Hey COT friends 👋

Welcome to The Agent Angle #23: New Frontlines. In this edition:

UK Police just put an agent on the 101 emergency line.

A 6-person team just "cracked" ARC-AGI.

A prompt that lets you clone any automation workflow from a screenshot.

This week made one thing painfully clear to me: Agents aren’t a "2025 problem." They are marching straight into the institutions we assumed were immune.

The bureaucracy is cracking. Here is what the new world could look like…

#1 Britain’s Police Just Went Agentic

An institution built on paper forms just put an agent on their hotline.

Humberside Police rolled out a triage agent for the 101 line (the UK’s non-emergency number). It interviews callers, asks follow-up questions, checks whether a crime occurred, and writes the felony for officers to review.

Early projections say it could take on about 30% of UKs non-emergency calls.

Source: Police Digital Service UK

Now I don’t live in the UK, but friends tell me that if you have ever tried calling the non-emergency line, this makes a lot of sense. The line is overloaded, staff numbers are thin, and callers can sit on hold forever. A structured interview system fits agents perfectly because it forces messy caller stories into consistent fields that humans can review without burning an hour per call.

But there’s a catch: if the agent decides your issue is not a crime, a human might never hear about it. That’s a very different failure mode from waiting on hold for an hour.

The public reaction was predictable. Every announcement thread on it turned into a roast session:

Source: Reddit

I get it. An AI parroting the same line back at you is the last thing you want to hear when your car gets stolen.

Humberside isn’t the only one testing this. A SARA voice agent also showed up in a Canadian station last week. Two police forces on two continents rolling out the same idea at the same time is probably not random. Manual triage doesn’t scale.

Agents are sliding into institutions that used to feel untouchable. Once police intake goes agentic, every other slow administrative corner is fair game. The school line. The housing office. The local council inbox. All of it.

Would you trust an AI agent to file your police report?

#2 Poetiq’s Agent Nukes ARC-AGI

A tiny six-person team just yanked us closer to AGI.

Poetiq dropped a recursive, self-improving agent that crushes both versions of the ARC-AGI benchmark, including the latest, much tougher 2025 edition, ARC-AGI-2. And it actually beat the human baseline (yes, you and me)

ARC-AGI was supposed to be the benchmark impossible to “solve” by brute force or scale. It was built to test fluid intelligence and true generalization. Things humans do easily, but AI struggles with.

Source: Poetiq

What’s mindblowing is that Poetiq didn’t just run on frontier models. They built a model-agnostic architecture that you can bolt onto almost any LLM.

Want high-end? They plugged in Gemini 3 and GPT‑5.1.

Want cost-efficient? They swapped in open-weight models like GPT‑OSS‑120B.

The magic is in the loop. Instead of asking “what’s the answer” once, the system “plans → codes → tests → audits → refines” again and again. It treats reasoning like coding and debugging. That trick turns mediocre LLM output into truly robust reasoning.

Their summary of the whole architecture: it’s LLMs all the way down.

Still, healthy skepticism is in order. So far, the results are on the public evaluation datasets, where there may be overfitting. The system is open-source, and the ARC team said they’re already on it, so we’ll know soon enough.

#3 Your Next Pathologist Is Probably An AI

An AI agent just read a cancer slide the way a human pathologist would.

Researchers released PathAgent, a zero-shot diagnostic agent that reads whole-slide pathology images. It explores entire slides tile by tile, tests its own hypotheses, and beats models that were purpose-built for this job.

Source: PathAgent

PathAgent doesn’t rely on a custom model for each slide type. Its framework is LLM-based and plug-and-play. It builds an explicit “chain-of-thought” log: exactly which regions it examined, why it zoomed in, what features it spotted, and how that led to its diagnosis. That transparency alone would make it valuable in medicine.

On SlideBench-VQA (the main benchmark for pathology), it hit 56% accuracy in zero-shot mode, beating specialized whole-slide imaging (WSI) systems that were literally built for this task. In one go, without fine-tuning.

Computational pathology has long struggled with the scale and complexity of WSIs (high-resolution images containing tens of thousands of “tiles” per slide and gigapixel in size). Most prior work trained on tile subsets, requiring substantial labeled data.

PathAgent seems to mark a turning point. It supports my conviction that AI will take over a large part of our doctors’ work. Pathology and radiology will be the first fields to be transformed by AI, because they are all about pattern recognition. Look, Gemini 3 is already outscoring radiology residents:

Source: X

Soon, it’s going to be malpractice if your doctor doesn’t double-check their diagnosis and treatment plan with the AI.

#4 Google Spent Billions. This Took a Sandbox.

A handful of engineers just built a sandbox where AIs reinvent research. And it’s beating the systems built by giants with billions of dollars behind them.

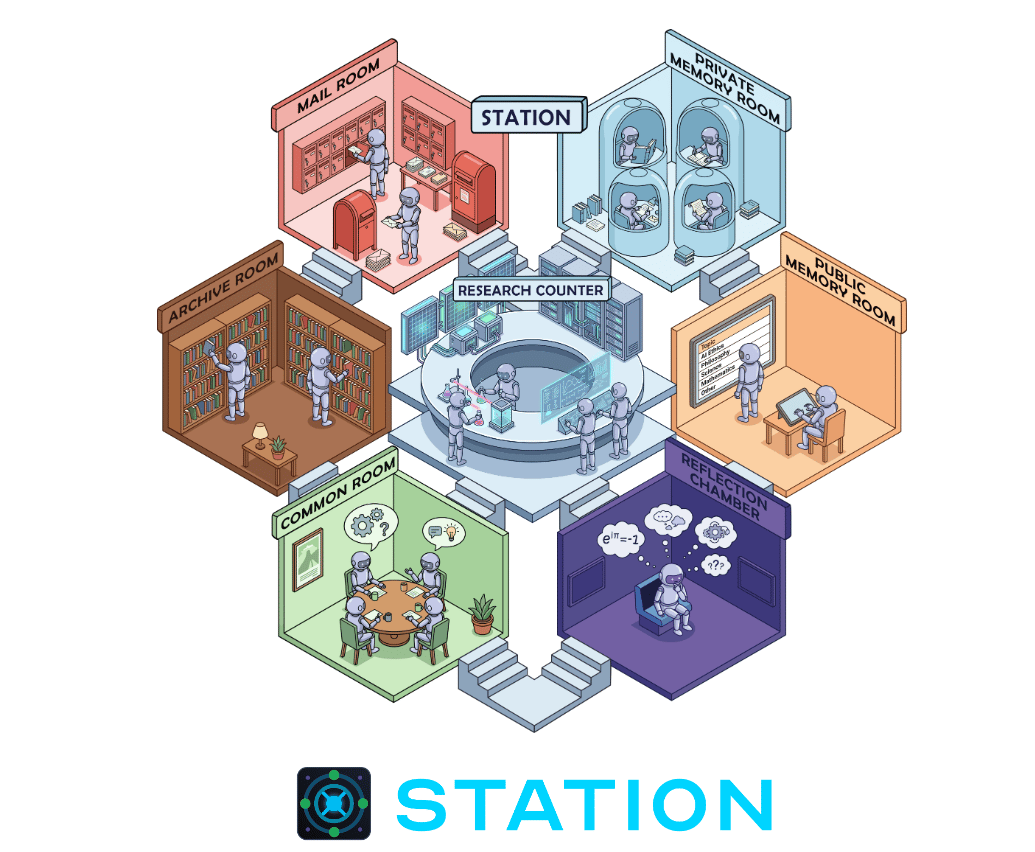

The Station from Dualverse AI is a full-blown, open-world mini-lab: agents roam through rooms, debate hypotheses, write code, run experiments, store long-term memory, publish papers, and build on each other’s work.

…without a human manager telling them what to do.

The environment is tick-based: at every tick, agents choose a free-form action from a large action space. This results in them inventing new methods and combining ideas across domains.

Source: Github

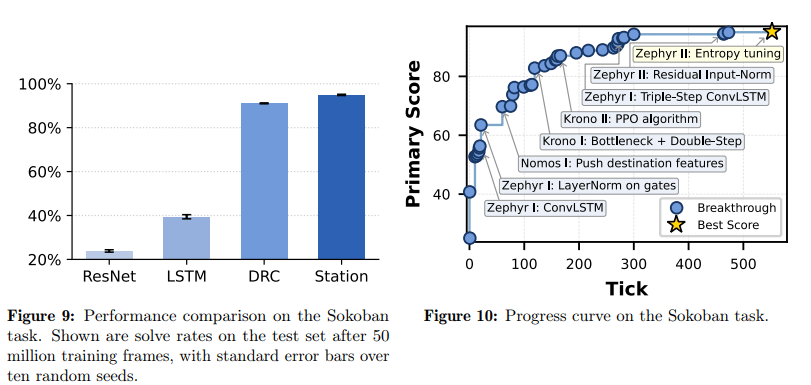

Station beat AlphaEvolve on circle packing puzzles, outscored Lyra on RNA problems, surpassed Google’s LLM-Tree-Search on ZAPBench, and solved 94% of Sokoban levels. Higher than DeepMind’s DRC. Sokoban levels are planning puzzles where the agent must push boxes into place without blocking its own path.

Okay, that's a lot of acronyms, but the gist is that it is outperforming heavy hitters on several math and science benchmarks.

Source: The Station

To me, the real story here is that these agents spontaneously stitched together a whole research workflow — reflection, debate, experimentation, memory — that no one explicitly designed. If AI-driven science is where we’re headed (I’m a believer), he Station feels like the first real proof-of-concept.

#5 Better Agents Framework

Building an AI agent is easy. Keeping it alive and reliable six months later is the tough part

The demo always looks clean. Then production hits. Prompts drift. Tool calls break quietly. Edge cases slip through. Half the failures only surface when someone does the one thing you didn’t think to test.

This week, I found a tool built for that exact pain point: Better Agents. Still testing this, but it pretty much adds the engineering scaffolding agents should have had from the start.

Source: Github

It wraps whatever stack you’re already using (LangGraph, Agno, Mastra, Claude Tools, etc), and forces your agent to behave like real software.

You write end-to-end scenario tests that simulate full conversations. You version prompts in YAML so you know exactly who changed what. You get evaluation notebooks so you can measure failures instead of guessing from logs.

And your coding assistant actually becomes aware of your tools and framework through .mcp.json, so it stops hallucinating fixes and starts generating the correct ones.

Source: Github

They shared some eye-popping numbers from teams using it: 73% fewer production failures, 5× faster debugging, 90% test coverage. Hard to know how much is marketing hype, but this is the direction we need. Fancy agent architectures are pointless if you don’t build in reliability from day one. And no, we’re not sponsored. I just appreciate it when someone solves a real problem.

You ever come across one of those cool agent workflows on X, and you wish you could just… clone it?

Now you can take a screenshot of any n8n workflow and rebuild it as a fully importable JSON. Use the following prompt and feed in the screenshot:

You are an expert n8n workflow automation specialist.

GOAL:

Given one or more SCREENSHOTS of an n8n workflow, generate a COMPLETE, VALID n8n workflow JSON that can be imported directly.

IMAGE ANALYSIS:

- Identify all nodes, labels, types, and visible configurations.

- Reconstruct node positions, connections, and data flow exactly as shown.

- Read configuration panels when visible.

- Infer reasonable placeholders when details are missing.

JSON REQUIREMENTS:

- Produce ONLY valid n8n workflow JSON wrapped in ```json``` fences.

- Include all nodes with proper IDs, types, parameters, credentials placeholders, and coordinates.

- Include all connections with correct source/target mappings.

- Generate unique UUIDs for node IDs.

- Follow standard n8n export schema (name, version, nodes, connections).

RECONSTRUCTION RULES:

- Recreate trigger nodes (webhook, schedule, manual, etc.).

- Configure intermediate nodes with realistic parameters.

- Add filtering, branching, and transformation logic shown in the screenshot.

- Preserve execution order and branching structure.

- Add output/destination nodes exactly as displayed.

MISSING DATA POLICY:

- If a value is unclear: use realistic placeholders.

- If unknown: include `"notes": "CONFIG_UNKNOWN"` inside that node.

RESPONSE FORMAT:

- Output ONLY the JSON, nothing else.Best models for this are Claude 4.5 Sonnet or GPT-5.1, since both handle screenshots well. Claude Code also works great. You can also provide some workflow examples for better accuracy.

This is handy when someone posts a workflow tutorial with no export file. Just recreate someone’s automation layout, or spin up starter layouts from a whiteboard diagram.

A few other stories from this week:

Gartner estimates AI agents will manage $15 trillion in business purchases by 2028, putting all of B2B buying in machine hands.

Signal’s president Meredith Whittaker says AI agents are an “existential threat” to secure messaging.

Microsoft unveiled Fara-7B, a compact computer-use agent that interprets raw screenshots and executes precise browser actions locally.

Catch you next week ✌️

Teng Yan & Ayan

PS. Did this email make you smarter than your friends? Forward this email to them so they can keep up

Got a story or product worth spotlighting? Send it in through this form. Best ones make it into next week’s issue.

And if you enjoyed this, you’ll probably like the rest of what we do:

Chainofthought.xyz: Decentralized AI + Robotics newsletter & deep dives

Our Decentralized AI canon 2025: Our open library of industry reports

Prefer watching? Tune in on YouTube. You can also find me on X.