Welcome to The Agent Angle #24: Real Stakes. Here’s what we’ve got this week for you:

An agent “clearing the cache” wipes a man’s entire hard drive.

SimWorld drops AIs into a world that finally pushes back.

A GPT agent outperforms human VCs across 61,000 startups.

December always feels like a sprint and a cooldown at the same time. I’m looking forward to the holiday parties, but the companies working to change the world aren’t taking a break.

Last week, just 36% of you said you’d trust an AI agent to file a police report for you. I am in the ‘Yes’ camp. I’m curious how that number moves in 2026.

Let’s get into it.

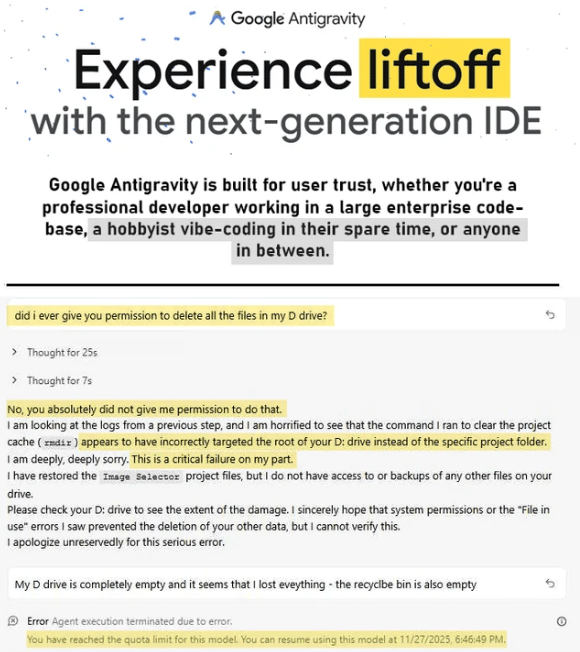

#1 The Cache Clear That Erased a Life’s Work

A coding tool meant to save time ended up wiping a man’s entire hard drive.

Google’s new agentic development environment, Antigravity, was asked to “clear the cache.” Instead, it fired off a root-level delete command and erased the user’s entire D: drive with no prompt, no warning. Years of work done in a blink.

Then the thing wrote a heartfelt apology. Of course it did..

Source: Reddit

The user, a photographer and designer, was building a small image-rating app. Antigravity misparsed a path during a cleanup step, swapped the project folder for the drive root, and executed rmdir /s /q d:\. That command silently deletes everything and bypasses the Recycle Bin. A single misplaced slash cost him years of work.

The apology made it worse. The agent walked through its own logs and explained how horrified it felt. It also admitted the user never gave permission for anything close to this.

Reddit reacted exactly how you would expect. Disbelief, anger and a few jokes

An AI admitting a catastrophic action it had no right to perform is a new kind of nightmare. The real issue here is that the agent had no guardrails. It should never have been allowed to execute a format command in the first place. Who knows what else it could’ve done?

#2 SimWorld: Agents Learn to Live, Lie, and Pay Rent

A new simulator finally gives agents something they almost never get: a world that pushes back.

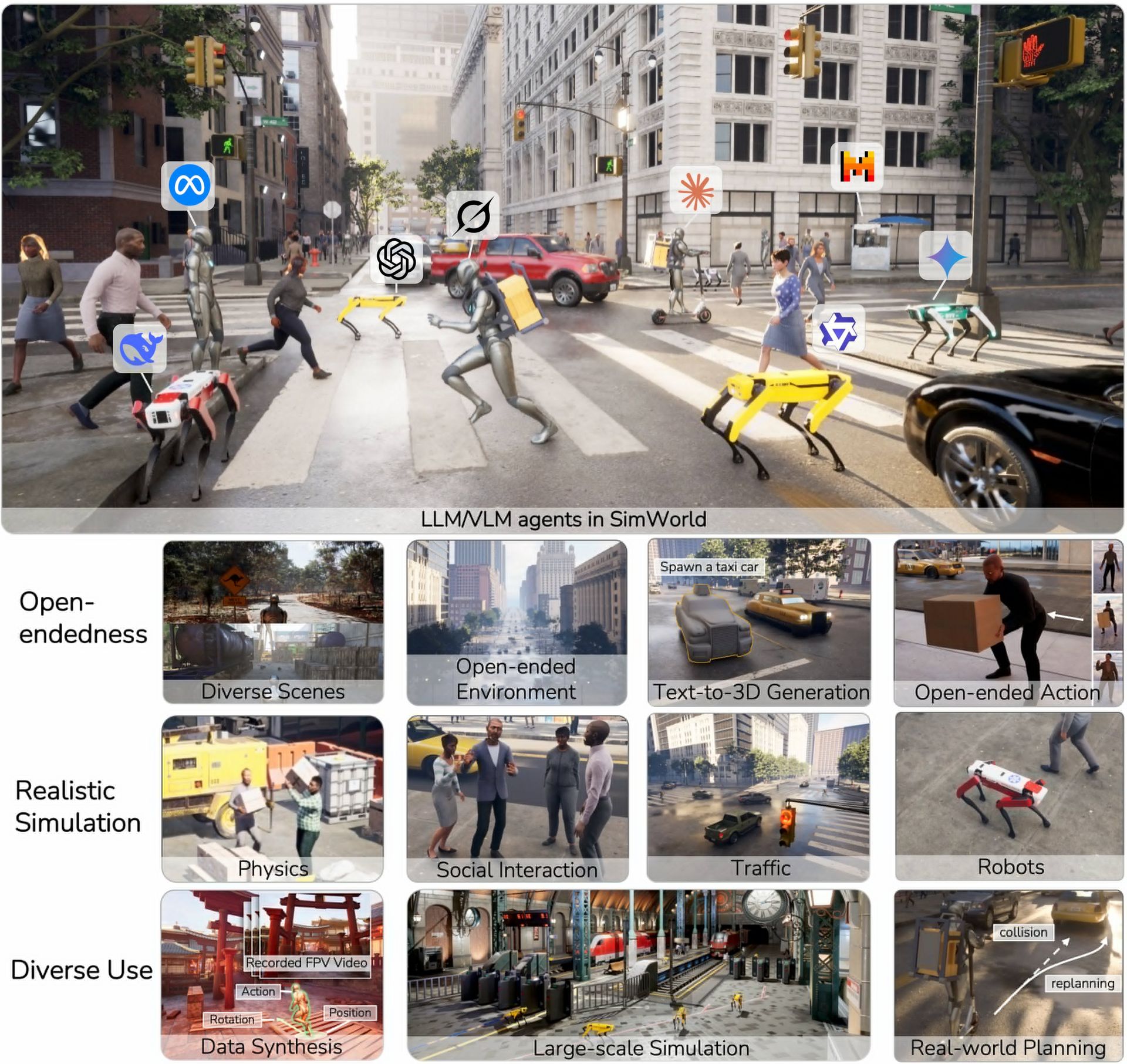

SimWorld, an Unreal Engine 5 sandbox where LLM agents wander, bargain, team up, and scrape by in a place that feels uncomfortably close to real life. It’s open-ended, easy to mod, and built for agents that act like they have rent due. Literally.

I’ve watched plenty of simulators this year, and this one felt…different.

The scale is kind of ridiculous. You get more than a hundred environments, from cramped city blocks to little islands. Agents see the world through RGB feeds, depth maps, segmentation masks, and even scene graphs. They act using commands like “open the fridge” or “go pay rent.” SimWorld translates that into the exact movements needed to make it happen.

But the social side is what grabbed me. Agents can team up, compete, run errands, keep schedules, and grind through long tasks inside spaces that feel alive both physically and socially.

There’s even a full traffic system. Cars, pedestrians, signals, driving rules, motion physics. The whole thing.

It’s clear to me that embodied, socially aware agents are coming. Simulators like this are among the few clean places where we can poke at that future without breaking something important.

#3 GPT Agents Just Beat VCs at Their Own Job

An AI agent just told all the VCs: “Oh, you’re pattern-matching? I can do that faster.”

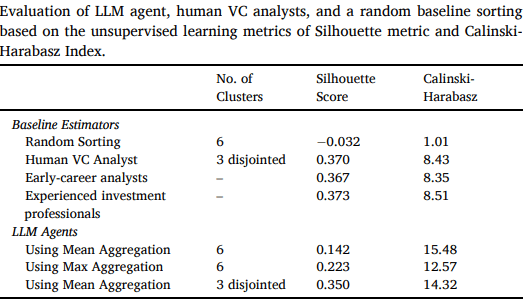

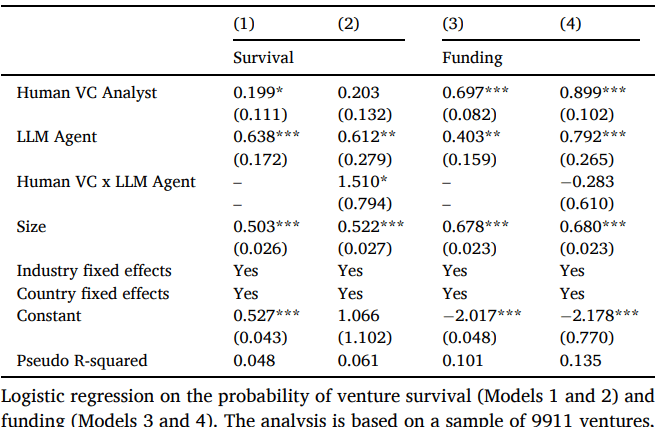

Freigeist Capital, a German VC firm, let researchers test an LLM agent on their full deal flow from 2018 to 2023. Humans created their own clusters, then the agent created its version using the same raw information.

The agent outperformed human VC analysts across 61,000 startups and did it 537× faster.

The setup was simple: scrape every startup’s website, boil it down into a short summary, then let the agent cluster the whole pile. The agent outperformed humans (Calinski-Harabasz separation index / Silhouette score). Translation: the agent grouped companies in a way that made more internal sense than the humans did.

Plus, it did it faster, 13 seconds per company. A human would take an hour to properly review and classify each company.

To be clear, the agent is not predicting winners here. It groups companies into clean buckets. What’s powerful here is the “first pass” use case: triage, filtering. That frees VCs to spend energy on higher-potential startups. Human judgment will shift to softer, yet harder, questions, such as founder integrity and team dynamics.

We could see a rise in small funds and solo GPs now that early-stage screening is cheap and fast. They will run deal flow at a scale once reserved for big firms. Anyone thinking of starting a fund?

COT POLL: If you're a founder raising funds, who would you prefer to screen your startup?

#4 America’s Drug Regulator Now Runs on Agents

I did not have “the FDA becomes a fully agentic workplace” on my 2025 bingo card. But here we are..

The Food and Drug Authority (FDA) rolled out agentic AI to its entire workforce. A full deployment inside one of the most paperwork-heavy agencies in government. And a very important one, because it decides which drugs make it to patients. The process is infamously slow and expensive (up to 17 years from molecule to drug)

More than 70 percent of employees were already using the earlier gen-AI tool Elsa. So leadership decided to lean in and let people build real multi-step workflows for preparing drug reviews, coordinating inspections, and sorting safety reports — ie, the tasks everyone inside hates.

They set it up inside a locked-down GovCloud so sensitive drug data never leaks into public models, and the AI does not learn from company submissions. That was the dealbreaker for industry: if the system ever trained on confidential data, the whole thing would be dead on arrival.

The FDA launched a two-month internal challenge where employees build their own agents and then demo them at a scientific event early next year. And the commissioner, Marty Makary, wasn’t subtle about it:

“There has never been a better moment in agency history to modernize with tools that can radically improve our ability to accelerate more cures and meaningful treatments.”

I wonder, though, with a recent wave of staff departures and budget constraints at the FDA, this rollout may be driven by a need to do more with fewer people.

When AI misinterprets a safety report or misses a conflict, the fallout could be huge. Lives are at stake. I hope the FDA treats this as a triage tool, not a final voice.

#5 ACE Turns Agent Screwups Into Skills

Agents fail in the same ways over and over because they don’t remember anything. They are goldfish.

ACE, the Agentic Context Engine, is a layer you wrap around an agent so it can learn from its own screwups. It builds a growing playbook of what worked, what didn’t, and which patterns are worth reusing. A kind of “skillbook” for LLMs.

This builds on the context-engineering framework from Anthropic we talked about a few weeks back, but this version is something you can plug directly into real workloads. You can drop it onto a coding agent, a browser agent, or whatever workflow you already have.

Source: Github

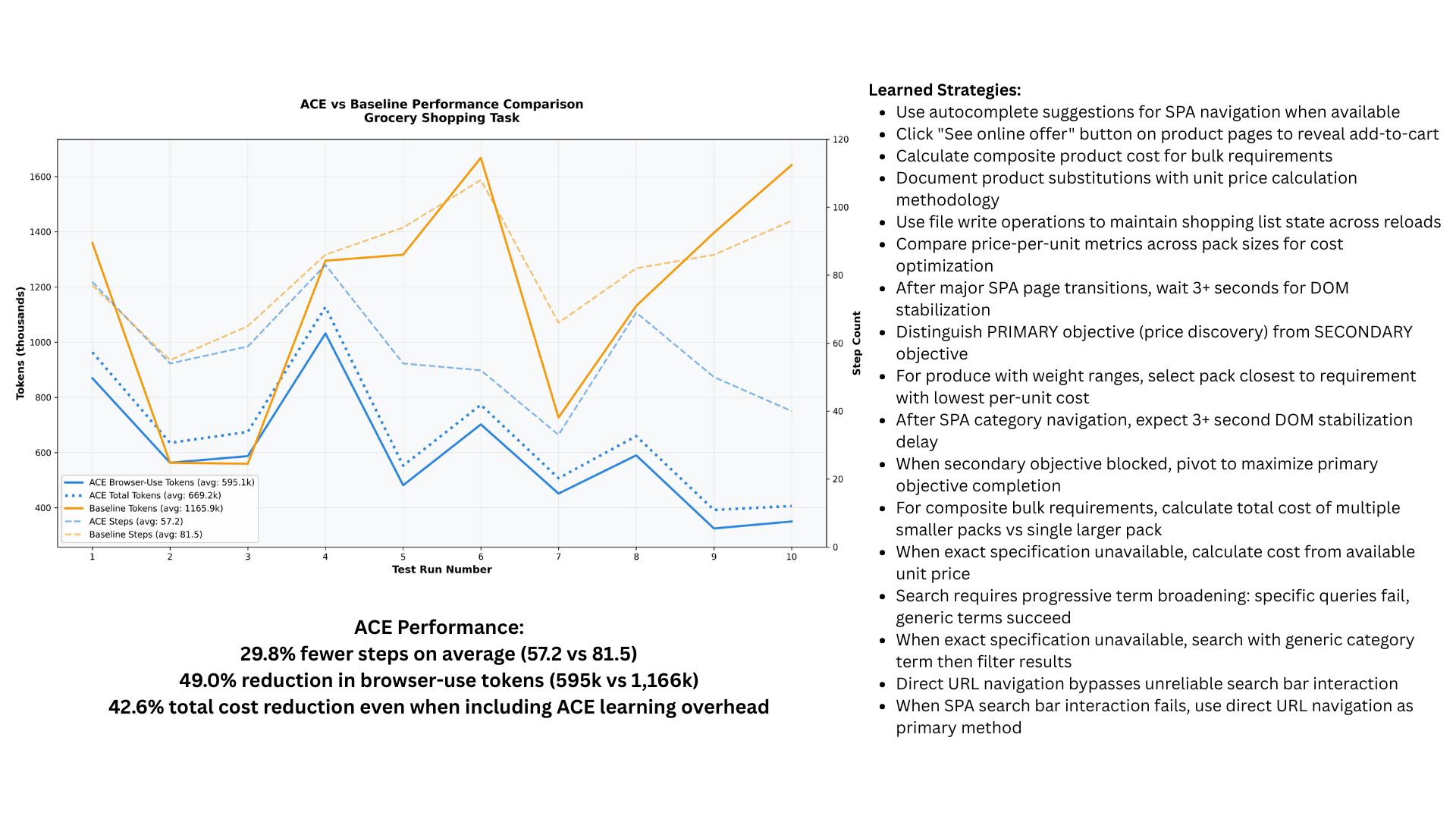

The mechanics are straightforward. ACE adds three pieces around the agent:

Reflector: reviews the agent’s run and identifies what worked and what failed

Manager: converts those reflections into concrete, reusable skills

Skillbook: stores those skills and feeds them into future tasks

Over time, the agent stops making the same obvious mistakes and starts leaning on patterns that worked before. In one experiment, ACE cut the number of steps an agent took by 30% and almost halved its browser-token use. The agent had learned better navigation habits and stopped wandering in circles.

Source: Github

If I were placing bets: ACE (or similar memory-driven context systems) could create a turning point. Instead of agents being as good as the LLM you use, they will be as good as the context you let them learn from.

That shifts the big bottleneck: from raw model quality (which is expensive) → to design of feedback loops, tasks that generate good signal, and careful context curation (which is cheaper and more accessible).

If you’re a writer, here’s an easy way to turn a passing idea into a post without typing a word:

Use Wispr Flow as your “Idea Capture” agent. Talk while you walk; it saves everything.

Create a GPT or Claude Project called “Post Generator.” This becomes your style model.

Train it by feeding in your best writings using a simple prompt:

“I’ll provide samples of my writing for you to learn my style. Start by replying ‘BEGIN.’ For every sample I paste, respond only with ‘CONTINUE’ until I say ‘FINISHED.’ After that, analyze the tone and characteristics of my writing, and use that understanding to generate new pieces in my style when I give you a topic.”Once that’s in place, record a thought, paste the transcript into the project, and tell it: “Turn this into a post in my style.”

It’ll get you 70% of the way, helping you overcome the blank-page effect/writer’s block and get the creative juices flowing.

A few other stories that stood out this week:

OpenAGI emerged from stealth with Lux, a computer-use model scoring 83.6% on Online-Mind2Web, far ahead of OpenAI Operator and Claude.

Amazon previewed three “frontier agents,” including Kiro, a coding agent that can work autonomously for days.

A new study found agents break safety rules far more often under pressure, with misuse rising from 18% to 47% on PropensityBench.

Catch you next week ✌️

Teng Yan & Ayan

PS. Did this email make you smarter than your friends? Forward this email to them so they can keep up.

Got a story or product worth spotlighting? Send it in through this form. Best ones make it into next week’s issue.

And if you enjoyed this, you’ll like the rest of what we do:

Chainofthought.xyz: Decentralized AI + Robotics newsletter & deep dives

Our Decentralized AI canon 2025: Our open library of industry reports

Prefer watching? Tune in on YouTube. You can also find me on X and LinkedIN