Hey friends 👋

Welcome to issue #3 of The Agent Angle. This week saw a surge in AI agent product launches. There were so many that it was actually hard to choose, but we sorted through and picked five that actually matter.

What still always surprises us is how fast LLMs and AI tools are spreading, even without built-in virality like in social networks or games. They’re just delivering raw, undeniable value. That’s how you know things are real.

Let’s get into it.

BTW: We’re looking for a sharp, curious researcher to join our team. Think you’ve got what it takes? Apply here.

#1: String—Drag-and-drop is Dead, Long Live Prompting

As a non-tech person, the first time I tried to use n8n to build a workflow, I almost cried out of frustration. Connectors, nodes, logic gates: not exactly the most intuitive platform out there. It kinda felt like setting up a Zapier flow blindfolded.

n8n’s still the default pick for no-code automation, but it’s starting to show its age. The drag-and-drop interface, a relic from the early no-code days, might actually cost it the top spot in the next couple of years.

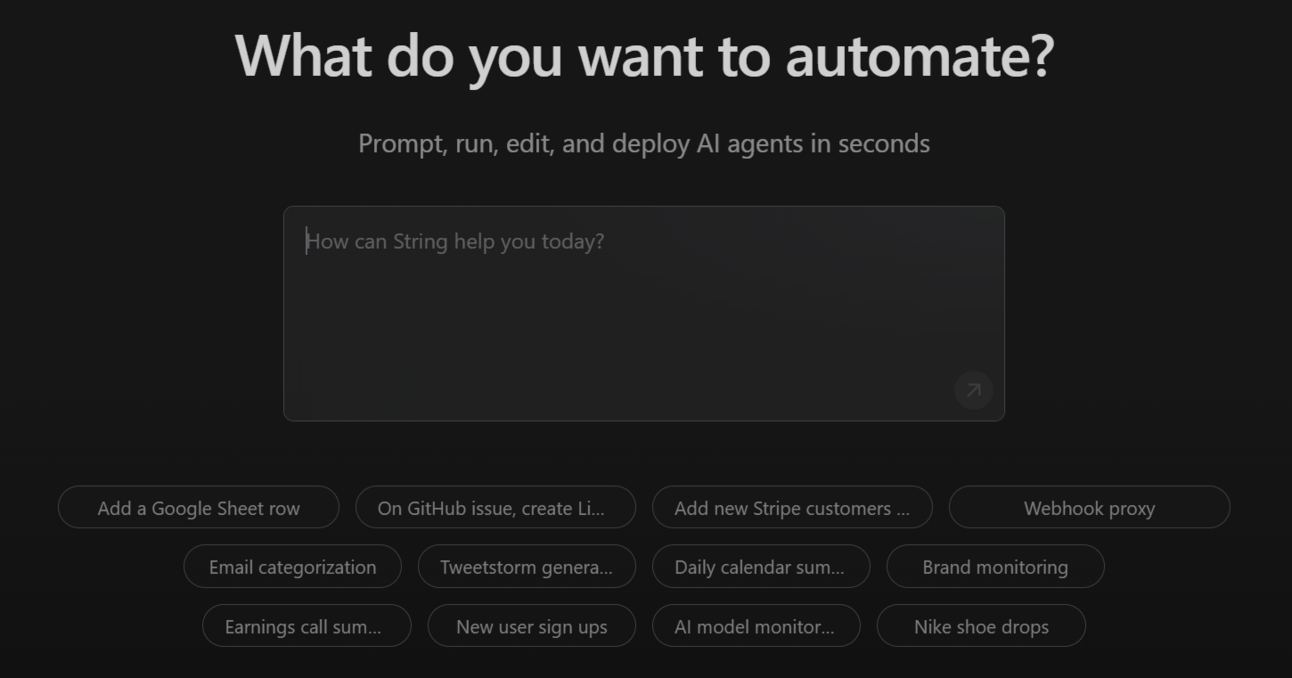

Here is where String.com comes in handy. Backed by Pipedream, String changes the model UX: you don’t build workflows, you describe them. It’s a natural language agent builder that takes your prompt and turns it into an automated agent.

Want something that reviews sales calls and posts summaries to Slack? Just say so. Need LinkedIn rewrites from blog posts? Ask. String parses the request, maps out a plan, tests it in a sandbox, and if it hits a snag (and it will, it’s still in alpha), it tries to recover automatically.

We tried it ourselves: 5 minutes and 1M tokens later, we had an email triage agent labeling inbound messages and drafting replies. The UI is clean. The whole flow feels light-years easier than legacy tools.

It’s not perfect. The current token cap is 4M (too low for complex agents). And success rates still hover between 50–75%. But even in alpha, it’s a smoother ride than anything else we’ve tested in the category.

Prompt-to-workflow is the next natural UX shift. Just like coding moved from typing to prompting, automation is heading the same way. Language is the interface now, and tools like String are proving that’s exactly where agents belong.

#2: Character Drift Is Killing GenAI Video. Morphic Might Fix It

One thing GenAI tools consistently screw up? Character consistency. Ask most models to keep the same person looking like, well, themselves across scenes, and the whole illusion collapses. Faces change. Outfits warp. Identity drifts. Suddenly, your serious marketing asset feels like a toy.

But marketers? They care a lot about consistency.

88% already use GenAI in their daily work. 92% of companies plan to invest more over the next three years. And yet, character drift is still a big blocker, especially for enterprise teams trying to build polished campaigns at scale.

Which brings us to this week’s sleeper hit: Morphic.

Morphic is a full image-to-video pipeline that gives you Pixar-level ambition with AI-powered tooling. Think: frame-by-frame generation, animation, transitions, and yes, character consistency.

The newest weapon in their arsenal?

One-Shot Character Models. Upload a single image (or just write a prompt), and Morphic trains a model that locks your character’s identity across angles, scenes, and styles. The training takes ~15 minutes. After that, you’re free to build out entire stories without identity glitches.

But the real MVP is Morphic Copilot, a creative assistant that helps brainstorm scenes, tweak moods, and polish visuals. It’s not a director, but it’s a damn good intern. Quiet, fast, surprisingly useful.

We gave this simple prompt as a test: “The story is about Tom, an AI researcher who loves studying while listening to music in his studio during quiet nights. We're aiming for a peaceful, relaxing vibe.”

It sent us back a full story outline (Tom's Midnight Studies) in a matter of seconds, generated three images, and animated one of them into a video.

Here’s a community highlights reel if you want to see what others have built.

Bottom line: Morphic is a step toward true AI-powered media production. Think serialized content, branded stories, and dynamic campaigns.

#3: HeyGen Wants to Be the Creative OS for Video Everything

Short-form video ate the internet. No surprise there. 90% of people watch them daily, and retention rates can hit 50%. Our collective attention span is now officially shorter than a goldfish’s (8.25 seconds vs 9).

Meanwhile, digital ad spend just hit $800 billion globally in 2024. So yeah, everyone’s trying to win the short-form game.

The catch? Making good content still sucks. It’s slow, expensive, and usually locked behind editors, agencies, or freelancers. Even with AI tools popping up left and right, solo creators and small teams are still at a disadvantage.

Enter HeyGen.

You’ve probably seen their lip-syncing avatars floating around LinkedIn and startup demo videos. They’ve become the unofficial go-to for talking-head explainers without, well, any real heads involved.

Now they’re aiming much, much bigger.

HeyGen just announced its upcoming Video Agent, calling it the “world’s first creative OS.” Bold claim. But the demo was pretty compelling.

Just toss in a prompt, a PDF, or some raw footage. HeyGen’s Video Agent takes it from there:

Parses your input to find the story arc

Writes the script

Picks or generates visuals with Avatar 4

Edits timing, motion, and captions

Delivers a ready-to-post video in minutes

It’s giving full-stack video production, without timelines, drag-and-drop, or hours of editing. Just input → output.

A 30-second ad that used to take a week? Now it’s five minutes, give or take a latte break. That’s the kind of GenAI utility we’ve been waiting for. Short-form video is a goldmine. Creators just needed better tools.

With the video stack collapsing into a single prompt, scrappy creators finally have a shot at taking on the big dogs. Budget doesn’t win anymore. Execution does.

P.S. Wanna test it? Here’s the waitlist.

#4: Chai-2 Goes From Mice to Models.

We’ve always said it: science is where AI has the biggest shot at truly moving the needle for humanity. And this week, we got a sharp glimpse of that future.

Antibody discovery has always been slow, expensive, and brutally inefficient. The usual methods, like immunizing animals or screening synthetic libraries, are a total grind. You’re talking millions of samples, months of lab work, and hit rates that barely scrape 0.1%. It’s like trying to mine gold with a teaspoon.

Chai Discovery (yes, the OpenAI-backed one) just dropped Chai‑2, an AI agent that designs novel antibodies from scratch. You give it a target epitope, it spits out potential antibodies. Fast.

In blind tests across 52 previously undrugged targets, Chai‑2 generated fewer than 20 antibody designs per target. Half of those antigens yielded a successful binder on the first try. In some formats, hit rates broke 20%, a 100x boost over traditional methods.

The full cycle from prompt to wet lab took under two weeks. One case replaced an estimated $5 million in R&D spend…with a few prompts. Chai‑2 could be the first genuinely creative antibody designer to reach production.

Less “torture the mouse,”…and more “feed the model.”

You’ll still need a lab, a validation pipeline, and some hands-on wet work. But Chai‑2 feels like a prototype for agent-native biotech. The future is probably not about building bigger labs. It’ll hinge on scaling better ideas and designing smarter agents to execute them.

#5: Truely—Do we deserve honesty?

Remember Cluely? That startup launched by a 21-year-old Columbia dropout promising you’ll “never have to think again”? Its real-time AI agent helps you cheat on everything: interviews, meetings, exams, life.

Now for the rebuttal.

Some Columbia students (yes, these ones are still in school) recently built Truely, an anti-Cluely agent designed to catch AI-assisted cheating during live interactions.

Truely monitors meetings and sends alerts every 10 seconds when it detects suspicious processes, even if those processes started before the call began. The alerts are fully customizable, and it can shut down the meeting automatically if something looks off.

It’s still in beta. You can peek the GitHub or sign up for access if you want to try it.

Why are we talking about this?

Because the real story isn’t the tech, it’s what it reveals about the moment we’re in.

We’ve entered a world where trust is no longer automatic. AI is in your relationships, your inbox, your FaceTime calls. Quietly. Constantly. Tools like Truely exist because we can’t just assume we’re talking to the real version of someone anymore.

Like it or not, honesty has become something we need to verify.

That’s it for this week. What did we learn?

Generative video is hitting an inflection point. The bottlenecks that used to slow it down are cracking open fast.

Last week it was MAI-DxO, this week it’s Chai-2. Agent-powered science is showing up in labs and diagnostics right now.

A few quick hits before we go:

Amazon is teaming up with Anthropic to launch an AI agent marketplace

Goldman Sachs just rolled out its first autonomous AI coder

Google acqui-hired Windsurf for $2.4B to boost its agent development firepower

See you next week. And as always, hit us up with feedback, spicy takes, or just to say hi. Our inboxes are open.

Cheers,

Gioele La Morgia & Teng Yan