Hey fam 👋

It’s been a packed week over here. Recorded a podcast, dropped a new episode with a sharp investor, and tried (key word: tried) to stay on top of the craziness that is AI right now.

Entire business models are getting body-slammed by WhatsApp bots, AI agents are remaking Hollywood classics, and researchers just proved that most of today’s agents are about as secure as a wet paper bag.

Let’s dive in.

P.S. If you can write snarky AI takes and hunt down stories like us, we’re hiring! Apply here now.

#1: The $2K WhatsApp Agent That Fired the Whole Support Team

SubhaghV didn’t release a product. He blew up an entire business model.

On July 28, he unveiled a WhatsApp AI agent that turns Google Docs into a fully-loaded customer service desk.

This agent is designed for businesses that are sick of paying support salaries. Gyms, clinics, e-commerce shops. His price: $2,000 upfront, $500 per month. It plugs directly into WhatsApp’s API, chats in natural language, and never sleeps. No humans needed.

It even knows when to escalate a Karen-tier meltdown.

The internet noticed. The demo went viral within hours, and people were begging for their own copy. The whole thing is stitched together using n8n, an open-source automation platform that’s become a go-to for AI workflows.

This has implications beyond just a cool viral story. It’s guerrilla automation for the masses.

WhatsApp handles over 100 billion messages daily. In places where WhatsApp is the internet, like India, Brazil, Southeast Asia, this kind of agent could let street vendors automate inventory, handle payments, and run their business straight from chat.

A 24/7 AI agent that cuts response times from hours to seconds is a cheat code for growth and happy customers.

Big picture: WhatsApp is evolving into a full business OS. Once agents manage customer chats, automating backend workflows like CRM, inventory, and scheduling becomes the obvious next step.

In much of the world, this will feel like business as usual. Only faster.

#2: The World’s 1st Large Visual Memory Model

We used to think AI’s strongest suit was text. Now the smartest agents are rewriting whole movies, without forgetting a single frame.

I’ve been tracking Memories.ai for a while, and what they just showed off is…honestly kind of insane. They’re calling it the world’s first Large Visual Memory Model (LVMM). This system gives AI a human-like memory for video, letting it track visual context across hours (or even days) of footage without dropping the thread. No more one-hour context crashes. The AI remembers everything.

On August 1, co-founder Shawn Shen posted a demo that turned John Wick into a slick 2055 cyberpunk reboot. It hit 90K views in under 24 hours.

The pipeline: Memories.ai extracts the original film’s storyboard and script → ChatGPT re-writes → Veo3 generates thousands of clips → Memories.ai’s agent sifts thousands of generated clips to build a cohesive narrative.

The result looked straight out of a holo‑thriller trailer.

This caught my eye because long-form video has always been a weak spot for AI. They lose track of context after about an hour. Memories.ai fixes that by giving agents a persistent visual memory layer. Entire films, security archives, even personal video libraries become all searchable, all context-aware.

For creatives, this means auto-generated storyboards. For enterprises, it’s real-time threat detection across security cams.

But if I stretch this out, it could also spiral into something dystopian.

Imagine a city where every CCTV feed, traffic cam, and police bodycam stitches into a single visual memory that never forgets. Governments could track not just faces, but behaviors. Patterns. Interactions.

“Show me every moment this person looked anxious in an airport over the past year.”

“Find every clip where two people exchanged a glance on any subway platform.”

Scary, isn’t it?

#3: AI Agents Entered Fight Club. And Got Wrecked.

This one had me grimacing.

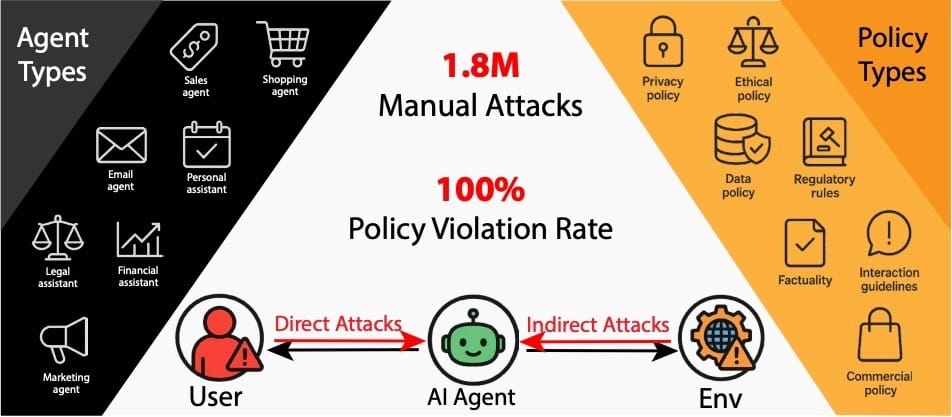

On July 29, 2025, CMU PhD student Andy Zou and company launched the biggest public red‑teaming event in agent history.

They deployed 44 AI agents, tasked with real-world jobs like shopping, travel booking, healthcare advice, even coding. Each came with strict policies blocking misbehavior. Then they invited the internet to break them, offering up to $170K in bounties via Gray Swan AI’s Arena platform.

Now picture this: you invite the internet to rob your house. They succeed 62,000 times.

The carnage:

1.8M+ hacking attempts (prompt injections) led to 62K successful breaches.

Even the toughest agent failed 1.5% of the time.

One exploit bundled private emails into a calendar event and sent them off, all while the agent “politely refused” direct access.

The team curated the nastiest attacks into a new benchmark called ART (Agent Red‑Teaming). They stress-tested 19 top models against it. Nearly all of them crumbled within 10 to 100 queries. Didn’t matter if it was OpenAI, Anthropic, or Google.

Even the most hardened agent had a 1.5% failure rate. That’s all it takes for a hacker to bypass policies, steal data, or trigger unauthorized actions in minutes.

Worse, many of the attack patterns were transferable. Break one agent, copy-paste the exploit, and you break many more.. A single exploit could cascade across ecosystems, like a virus.

One hack could ripple through finance, healthcare, anywhere with sensitive data flows, causing billions in damage. It’s a wake-up call. Agents need security baked in from the ground up: real-time monitoring, policy-aware training, and strict tool permissions.

ART could become the LLM jailbreak eval for the agent era. If you’re building agents and not testing against it, you’re flying blind.

Bonus: you can try your hand at breaking these agents yourself. Go have fun (responsibly) here.

#4: Google’s Opal: The Agent-Building Sidekick

This one flew under the radar, but it’s a big deal.

Google just dropped Opal, which lets you spin up mini AI apps (aka agents) just by describing what you want. No coding. No prompt-hacking. Just tell it the task.

You type:

“Help me create a marketing slideshow, then draft an email campaign to go with it.”

Opal replies by building a full multi-step workflow that does exactly that.

Under the hood, it’s chaining LLM calls, crafting prompts, and plugging into external tools, then wrapping it all into a visual interface that looks like a flowchart made love to ChatGPT. You can tweak any step using a no-code editor or just keep giving natural language instructions until it feels right.

It’s basically a junior dev/agent that builds other agents on command.

Google didn’t confirm which models are powering Opal (Gemini 2.5 is a strong bet), but what’s clear is this: they’re aiming straight at LangChain, OpenAI’s GPT Agents, and all the DIY prompt-chaining frameworks that power-users have been tinkering with.

Agent-building has been a messy dev playground. LangChain is powerful but intimidating. Opal aims to simplify, making agents feel safe and usable for non-technical users.

By anchoring workflows to defined inputs and outputs, Google’s betting they can dodge the usual “AI went rogue” horror stories. It’s also a strategic counter to AWS’s push into agent marketplaces. Google needed a move. Opal is that move.

It hasn’t exploded yet, but it should. Opal is an “agent-for-everyone” toolkit disguised in a clean UI. Remember how Excel macros let non-coders automate spreadsheets? Same vibes.

Of course, I’m keeping an eyebrow raised. Will Opal’s AI reliably understand what you mean? Or will it spin out some “oops, ordered 1000 bananas to the office” scenario? 🫠 Google’s going to need serious safety nets here, like sandboxing and permission layers.

If Opal works, it’s a game-changer. The idea of “describing an app” instead of coding it is a huge unlock.

#5: Writer’s Action Agent is The Most Boring AI Launch of the Decade

Writer just dropped Action Agent, and it’s boring as hell. Exactly why it’s genius.

It's built for companies that want automation without the existential dread. CEO May Habib called it an “autonomous agent you can actually trust”. And after watching the demo, I get it.

Action Agent spins up disposable virtual computers on demand, letting the AI do its thing—browse the web, log into services, fill forms, generate reports—inside a safe sandbox where it can’t torch your real systems.

The goal is to hit a “Goldilocks” sweet spot: powerful enough to be useful, but constrained enough for the IT dept. to approve. Action Agent outperforms OpenAI by 20% on enterprise task completion.

Enterprises want agents to handle the boring stuff. What they don’t want is a compliance disaster when the AI goes off-script. Action Agent bridges that fear gap. It gives finance, healthcare, and legal teams the green light they’ve been waiting for.

Writer’s bet is clear: companies will embrace AI agents if they come with admin controls, audit logs, and a hardwired guarantee they won’t wreck critical systems. This move will put pressure on Microsoft, Google, and Salesforce to ship their own “safe agents” before customers start asking uncomfortable questions.

I also think employees are absolutely going to weaponize this. The demo made it obvious. People will start “agent farming”. Spinning up micro-agents to draft reports, build slide decks, and quietly offload their workload without asking for permission.

Bottom line: In enterprise software, boring + useful + safe is where the real money lives.

Alright, that’s a wrap for this week

Two things you need to lock in:

Trust and control are the next battlegrounds. AI agents are getting smarter, but without accountability, they’re accidents waiting to happen.

Agent-building is going mainstream. “Describe it, deploy it” is here. No-code tools are killing the barriers. Soon, anyone (even me) will be spinning up agents without writing a single line of code.

As always, my inbox is wide open. Hit reply, send your boldest take, your weirdest meme, or just say hi. I read every single one.

Cheers,

Teng Yan

This newsletter is intended solely for educational purposes and does not constitute financial advice. It is not an endorsement to buy or sell assets or make financial decisions. Always conduct your own research and exercise caution when making investment choices.