Most of the best ideas in AI happen mid-conversation, when builders and researchers are thinking out loud.

I’m one of those annoying people who always have their AirPods in their ears and a podcast on. Driving, on walks, at the gym. I’ve learned that staying close to sharp thinkers is huge leverage, and what still surprises me is how much access we have now. 10 years ago, most of these conversations happened behind closed doors.

But the constraint is always time. There are simply too many good conversations and not enough hours in the day to listen to them all.

So, I built a solution.

Earlier this year, I deployed an AI workflow to track and summarize key takeaways from new episodes as they drop. I checked the database this morning: it has now processed over 1,436 podcasts!

You can access it too - all the podcast notes are there, updated daily, and I will keep them as a public good. Feel free to poke around. And if there’s a podcast I should be tracking and I’m not, tell me.

For this post, I went back through everything and pulled 40 of the most critical insights for investors, researchers, and builders to prepare for 2026.

Probably…Sama

Three dominant themes emerged:

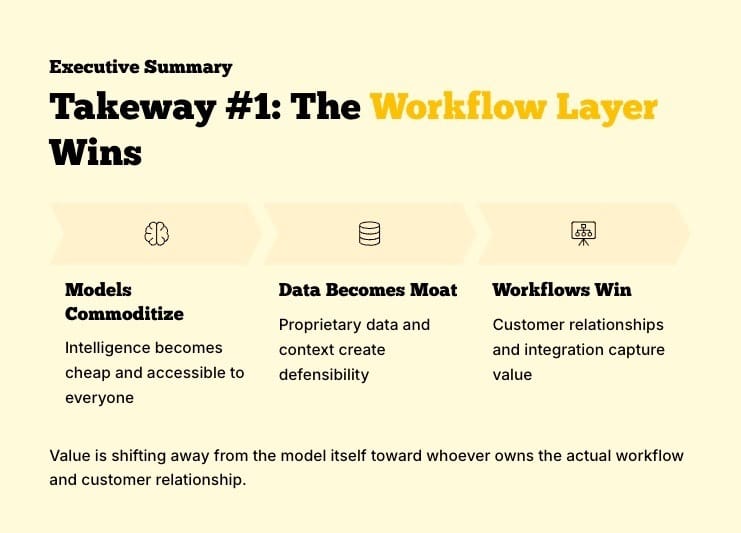

IQ is a commodity. Models are cheap. Proprietary data and workflows are the new moat.

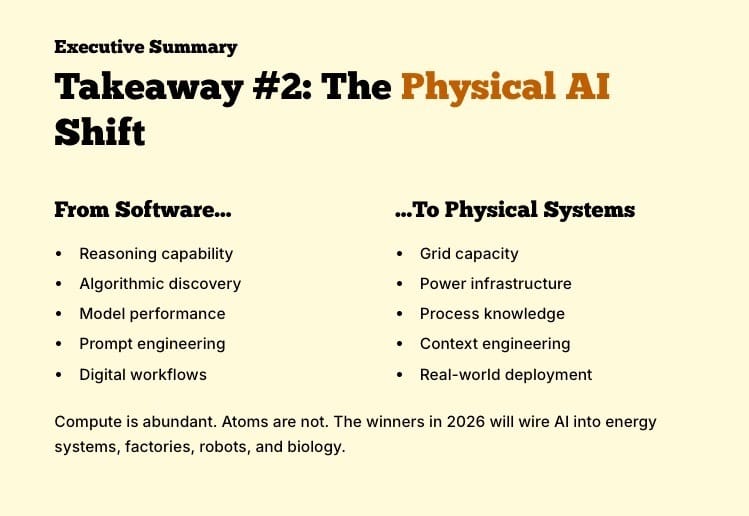

The fight moved to the physical world. Power, grids, and nuclear energy are back in focus.

Agents are turning AI into labor. We are moving from selling software "seats" to selling outcomes.

If you are building your thesis for 2026, this is the map. Happy holidays and I’ll see you in 2026!

Teng Yan

Theme #1: The Economics & Market Structure of AI

Teng’s Note: When ChatGPT first dropped, my mental model was quite simple: own the models, win the game. At the time, only a handful of teams could even build foundational models, so that made sense to me. Fast forward 2+ years, and I was clearly wrong. IQ is a commodity with near-zero marginal cost, with almost every major tech company (Microsoft aside) launching their own frontier models.

I can see the value is shifting toward the Physical Layer (power and chips) and the Workflow Layer (whoever owns the actual customer relationship). If you are investing, I’d look for "Bottleneck Businesses". The ones owning the physical or data chokepoints that can't be solved with more code.

#1 Intelligence is Becoming Deflationary

DeepSeek demonstrated a 46x reduction in training costs and 90% lower inference costs. This creates massive deflationary pressure on "intelligence," transforming it into a commodity that will collapse software's cost structure.

Nvidia is winning right now, but 90% gross margins never last forever. Major customers (Amazon, Google, etc.) are building their own chips. At the same time, more efficient models like DeepSeek mean you need fewer GPUs to get the same work done. This challenges the "infinite demand" thesis

Jeffrey Emanuel / Delphi Digital Podcast

#2 Power, Not Chips, is the New Bottleneck

We are entering a phase defined by physical realities rather than by algorithmic discovery alone. The main constraint in the AI race is now securing gigawatts of power and the physical infrastructure to deploy it.

Henry Ellenbogen / Invest Like the Best

#3 Strategic Leverage of Low Token Cost

Low token cost is a weapon. When it drops far enough, it lets companies squeeze everyone else. As new chips like Nvidia’s Blackwell push costs down, the advantage will be longer thinking. Models can afford to reason for more steps, run more checks, and handle messy, multi-stage work like sales.

Gavin Baker/ Invest Like the Best

#4 The "Seats" Business Model is Dead

Early adopters of general-purpose AI in physical industries (robotics, logistics) are establishing 15-20% annual cost reductions. These advantages compound, creating "power law businesses" with cost structures that late-coming competitors cannot match, even with equal capital.

AI agents sell labor. When an agent does the work, charging “per user” no longer makes sense. Companies will have to price around outcomes, not logins. A lot of SaaS math will get rewritten here.

Henry Ellenbogen / Invest Like the Best

Teng’s Note: This must really hit a nerve, because my tweet on “results-as-a-service” for AI agents went viral last week

#5 The “data wall” strategy will intensify as models commoditize

As models become easier to copy, data becomes the chokepoint. The most valuable companies will keep high-quality data within their walls while still squeezing value from models. Leverage moves from model vendors to data owners.

Bill Gurley & Brad Gerstner / Bg2 Pod

#6 Google’s Ecosystem Moat

Startups can move fast, but Google is a behemoth. They can wire Gemini into Docs, Drive, Search, and everything else people already use. Google’s edge is having the model knowing your files, your emails, and your workflow. Context beats cleverness.

Peter Diamandis / MOONSHOTS

#7 Sovereign AI Clouds

Governments are realizing they cannot rely on foreign AI infrastructure. This is driving a trend toward "Sovereign AI," where nations build domestic compute clusters and foundation models to ensure national security and cultural alignment.

Salim Ismail / MOONSHOTS

#8 Model commoditization shifts surplus toward workflow owners

As strong models become easily accessible, value moves away from the model itself and toward whoever owns the workflow. Customer relationships, proprietary data, and deep integration are what truly matter.

Sarah Guo / No Priors

#9 The Fast, Emergent Product Cycle

The current AI product cycle is the fastest in tech history because models keep showing new behaviors no one explicitly designed for. It feels less like software and more like biology. Capabilities show up, builders notice, and suddenly a dozen people are testing weird new uses at the same time.

Anish Acharya/ The a16z Show

#10 Reinforce Business Models, Not Just Cost Cuts

AI products really take off when they add to how a company already makes money, not when they just cut costs. And when you capture outcome data end-to-end, the product gets better over time in ways competitors can’t easily copy.

Angela Strange/ The a16z Show

#11 The “AI trade” is not one bet; it is a stack of second-order exposures

Instead of betting only on big tech, a more durable exposure can be through power, cooling, datacenter services, networking, and software layers that monetize every incremental unit of AI demand. These layers don’t move in lockstep, which matters if you care about risk and correlation.

Citrini / Forward Guidance

#12 Markets Misprice Persistent High Growth

Public markets often underprice companies with persistent high growth (>30%) because it is unnatural to model such long, multi-year trajectories. This can make high-growth AI companies less risky than low-growth buyouts at reasonable multiples.

David George/ Invest Like the Best

Theme #2: The Future of LLMs & Reasoning

Teng’s Note: In the past, prompting felt like a parlor trick. You’d poke the model just right and hope it gave you a good answer. I kept lots of prompt templates + examples for tasks I want the AI to do.

That phase is ending. Models are getting so good. Prompt engineering is fading, and context engineering takes over. The real work is deciding what information the system sees, how it’s structured, and how long the model is allowed to think.

#13 AGI as a Deployed Learner

Future AGI may not be a "finished mind" but rather a deployed learner that improves on the job. Superintelligence will look less like a breakthrough moment and more like steady compounding in production.

Ilya Sutskever/ Dwarkesh Podcast

#14 Continual Learning as the Primary Driver

Similarly, Dwarkesh believes that further leaps in AI capability will be driven by continual learning rather than a software singularity. Achieving human-level on-the-job learning may take another 5 to 10 years of incremental progress.

Dwarkesh Patel/ Dwarkesh Podcast

#15 Solving Coding is the Path to AGI

Coding is the ideal testbed for AGI because it provides a verifiable, closed-loop environment where an AI can write, execute, and correct its own output. Solving the reasoning required for complex software engineering is a proxy for solving general reasoning.

Misha Asin / Latent Space

#16 The "Folklore" Bottleneck

AI struggles with "folklore knowledge". That’s expertise known to practitioners but never written down in the literature. This implicit knowledge remains a major barrier for AI in specialized scientific fields.

Subbarao Kambhampati / Machine Learning Street Talk

#17 Agentic Objectives are Driving the Next Performance Leap

The recent jumps in models like GPT-5 aren’t only about more data. Training now rewards completing multi-step tasks, not single replies. That pushes models to plan, execute, and recover when things go wrong. The result feels less like a chatbot and more like a junior employee.

Isa Fulford & Christina Kim / a16z

#18 World Modeling Proven via Othello

Research into simple games like Othello proves that LLMs build internal representations (models) of the board state and rules, suggesting they truly "understand" the world they are simulating.

Nicholas Carini / Machine Learning Street Talk

#19 Synthetic data only helps if you can measure when it is lying

Synthetic data helps coverage, but it also sneaks in model bias. If you can’t measure when it drifts from reality, it quietly poisons your training set. The real advantage is knowing exactly when that data helps and when it hurts.

#20 Surveillance, Not Sentience, is the Main Risk

The most immediate risk from AI is Orwellian surveillance and censorship rather than an AGI takeover. Protecting privacy and preventing the state-level abuse of AI are more urgent concerns than sentience.

David Sacks/ All-In

#21 AI Is Ushering in an Entirely New Economic Paradigm

AI is a deflationary force so powerful it makes traditional economic metrics (like CPI/GDP) obsolete. In an economy where intelligence cost trends toward zero, the only inflation will be in assets that AI cannot reproduce (energy, land, trust).

Jordi Visser / Forward Guidance

Theme #3: AI Agents

Teng’s Note: I’m certain 2026 will be the year of the agent. 2025 was a bit of a hype, but we’re finally seeing agents show up in actual products. I expect to see new use cases pop up across all industries.

Agents will make mistakes. Of course they will, they’re not perfect just like us. There’s going to be a "Managerial Inversion" where the cost of labor (agents) is near $0, but the cost of Liability (agent error) is infinite. Building systems that can audit and check them is going to be crucial.

#22 Productivity Boost Without New Hires

Salesforce pulled off something most companies only dream about. By rolling out internal AI tools, they increased developer productivity by 30% in a year and moved 9,000 support engineers into new roles, rather than hiring more people.

Marc Benioff / MOONSHOTS

Teng’s note: I hear Uber is training drivers to be data labellers as self-driving cars start becoming ubiquitous

#23 The Death of the IDE

By 2026, the role of a software engineer will transition from writing syntax in an IDE to managing a fleet of AI agents. The primary skill will shift from coding to architecture and agent orchestration.

#24 Agents as "Self-Improving Recipe Books"

Advanced agents now operate like self-improving recipes. You seed them with initial instructions, and they try variations, score the results, and rewrite their own prompts to "hill climb" toward better outcomes without human intervention.

Natalie Serrino / Latent Space

#25 AI Agents as High-Value Attack Vectors.

Once agents can run code and move money, they become serious attack surfaces. Guardrails based on simple permissions stop working. Security now has to reason about what an agent is trying to do, not only what it’s allowed to touch.

Sunil Pai and Rita Kozlov / Latent Space

#26 Distribution-First Strategy for Agents

Companies like Cognition (Devin) proved a new go-to-market strategy: generate massive viral attention with a "translucent overlay" UX that feels like magic, acquire users rapidly ($1M ARR in 10 weeks), and use that data moat to iterate faster than incumbents.

Natalie Serrino / Latent Space

#27 No-training” policies become a product moat

Harvey’s (Legal AI) GTM lesson is that enterprise adoption moves fastest when you can make a credible promise that customer data will not be used for model training. It changes procurement timelines and lowers the odds that the rollout dies in security review.

Aatish Nayakz / a16z

#28 Unlocking Trapped Institutional Memory

AI research tools can unlock decades of "institutional memory" trapped in old digital documents. One biotech customer avoided eight months of redundant mouse experiments after an AI tool found relevant prior internal studies.

Sajith Wickramasekara/ No Priors

Theme #4: Research, Benchmarks & Innovation

#29 Benchmarks are Saturating.

Most AI benchmarks no longer work. Models hit the ceiling so fast that static tests stop telling us who’s better. What we need are moving targets: stitched-together tests and statistical views, like Epoch AI’s S-curves, that show progress over time instead of one-off wins. Otherwise, we’re reading noise as signal.

#30 2-3x Efficiency Gain Per Year

To hit the same capability level, we now need 2–3x less compute every year. That gain is coming from algorithms, not chips. Stack that on top of hardware improvements, and the pace of progress effectively doubles. This is why forecasts keep missing on the low side.

#31 Model Stealing is Trivial

It is shockingly easy to "steal" a proprietary model by querying its API and training a smaller model on the outputs. This effectively commoditizes proprietary weights. "Moats" based solely on model performance are weaker than investors think.

Nicholas Carini / Machine Learning Street Talk

#32 The Shift from Prompting to Proactive Teammates

The provisional "prompt box" interface will soon give way to proactive AI teammates that observe work and propose actions. Users will spend more time approving smart suggestions than crafting prompts themselves.

Marc Andrusko/ The a16z Show

#33 Pushing Compute to the Limits of Physics

We are running into a thermodynamic wall. “Beff Jezos” proposes a shift to "thermodynamic computing". Instead of fighting noise and entropy, this approach uses them. Hardware is designed to compute probabilistically, not deterministically, which fits how modern AI actually works.

Guillaume Verdon / Machine Learning Street Talk

Theme #5: Specific Applications & Verticals

#34 What I Learned at the Frontier of Tech in 2025

The next phase is Physical AI. We are moving from software that thinks to systems that act. The constraint is no longer reasoning capability. It is grid capacity, process knowledge, and the friction of the real world. Compute is abundant. Atoms are not. The winners in 2026 will be the companies that can wire AI into energy systems, factories, robots, and biology.

Azeem Azhar / Exponential View

#35 Gaming - Infinite Narratives

AI lets games write themselves as you play. Instead of fixed story branches, quests, and dialogue shift based on your choices in real time. That makes worlds feel alive and replayable without studios hand-authoring every path.

Tin Nguyen / Delphi Digital

#36 Material Science Revolution

AI models like Microsoft’s MatterGen are doing for materials what AlphaFold did for biology. We are entering an era of "generative matter," where AI discovers new materials for batteries and solar panels tailored to specific properties.

Peter Diamandis / MOONSHOTS

#37 Bottom-Up EdTech Adoption

Tools like Magic School are seeing explosive growth (5M users) not through top-down district sales, but via bottom-up adoption by teachers. When AI delivers such immediate ROI from administrative drudgery, users will adopt it without permission.

Adeel Khan / Change Order Podcast

#38 Nuclear Energy Renaissance

The energy demands of AI data centers are single-handedly reviving the nuclear industry. Tech giants are now looking at Small Modular Reactors (SMRs) as the only viable solution to provide carbon-free, baseload power for training clusters.

Marc Benioff / MOONSHOTS

#39 Decentralized Intelligence Markets

Networks like Bittensor are creating "free markets for intelligence," where different AI models compete to provide the best answers and are rewarded in tokens. This incentivizes a diverse ecosystem of specialized models rather than a single monolithic winner.

Brody / Ventura Labs

Teng’s note: I’ve been watching Bittensor closely, writing about it here. I expect to see some really cool startups emerge from it

#40 ZK Proofs for Content Authenticity

As AI generates infinite content, proving human origin becomes critical. Zero-Knowledge (ZK) proofs are becoming fast enough to work in real time, enabling content to verify its source without revealing identity. That creates a trust layer that the internet has been missing.

And one final one from me: we’re going to see more consolidation and M&A activity with AI agent companies in 2026. As I was writing this, news just broke that Meta has acquired Manus for $2B+ (a company I wrote about a few weeks ago).

Which of these 40 shifts are you betting on for 2026?

I’m looking at AI agents and data networks right now, and what they mean for the Machine Economy. I’ve been studying this for almost 2 years now.

If one insight here changed how you think, hit reply and tell me which one!

Cheers,

Teng Yan

PS. If you’re building or investing in AI agents, this is the stuff people usually learn the hard way. Forward this to the one person on your team who should be thinking about this.

I also write a newsletter on decentralized AI and robotics at Chainofthought.xyz.