In the mountains of Tokushima, a man built a paradise. A cedar inn beside a quiet river, where the walls smell of cypress and the menu follows the rhythm of the seasons.

His name is Takeshi Kanzaki, and the inn is called WEEK Kamiyama. It’s the kind of place off-road travelers will want to find.

About a year ago, I first saw it while researching places to stay in Japan that hadn’t been colonized by hotel chains. It almost feels like something you can only find by accident and talk about for the rest of your life.

I would love to “work and stay” here

But there was one problem. Inn’s website was ten years old. Pop-ups blocked the screen at the wrong time. The calendar made no sense. The booking form fails. It wasn’t listed on Expedia or Booking.com. Adding it there meant learning an inventory system written in English, designed for chain hotels rather than wooden guesthouses in Tokushima.

So the website stayed broken. And the inn stayed empty. For a long while. Still, Takeshi kept the lights on anyway. He built this refuge for people who needed silence and green hills.

Then, one day, messages started arriving from Korea, Germany, and Canada. Reservations showed up without anyone touching the site. Travelers were booking their rooms. Not because the website changed. Because something else changed around it.

And that something is…well, you might have guessed, is Tinyfish AI.

TinyFish had scraped WEEK Kamiyama’s public inventory, translated the structure, and relayed it to Google Hotels. Its agents read the page the way you and I would. They saw that “川側の部屋” meant riverside room. They recognized a form from the 1990s as real availability. What couldn’t be rewritten was now reinterpreted.

I began this essay with this story because I wanted to show how fragile our assumptions have become. The web contains beautiful, useful things that no longer connect. Japan alone has over 45,000 small hospitality properties locked out of global distribution. Multiply that by every industry where services sit behind outdated forms, and the true scale of the problem comes into focus.

Takeshi’s small inn is a microcosm for something much larger: how the web was built for humans, but businesses run on machines. And the mismatch is hurting more than just guesthouses

And one can see this issue clearly in Fortune 500 companies as well, where they rely on workflows that pull data from thousands of public sites. One layout change, and the entire system fails. So companies fall back on people, clicking, copying, rechecking what machines were supposed to handle.

As consumers, we’ve developed a sort of learned helplessness. We’ve learned to accept the friction. Booking a room should take <15 minutes. Comparing prices means we have to open tabs. We’ve normalized the idea that the web is supposed to be difficult.

TinyFish is going to change that.

How? By building agents that use the internet like a real person would, but across thousands of flows. It follows human instructions, such as “find the blue button” while working through slow pages, random pop-ups, and misplaced labels.

The Automation Gap

The internet has evolved like a living system. Its massive growth followed the same math that explains how neurons fire in our brain: irregular patterns that somehow produce coherent thought. In neuroscience, that is called the edge of chaos, a zone between total order and total randomness where complex behavior emerges.

The web hit its edge of chaos around 2008. Chrome launched, and the browser wars escalated.

I’m old enough to remember the pre-apps web in the late 1990s. Early websites were static. You could scrape them with five lines of code. Then came e-commerce, SaaS, and social media. The web splintered into a million interfaces for a million contexts. Content moved behind logins. Pages personalized themselves. What you see on Amazon isn’t what I see, and the web is designed to keep it that way.

In a way, Internet Darwinism changed how we surf online.

Automation Challenge for Enterprises

Web automation is no longer optional. In the U.S., usage jumped from 3.7% in 2023 to 9.7% in 2025. Eighty-five percent of companies now use agents in at least one workflow.

But with infinite scroll, anti-bot rules, and elements that only load after clicks, the transaction cost of pulling structured data from the web often exceeds the value of the data itself.

The chart below shows the growing gap between web complexity and legacy automation capabilities. The widening gap is the automation gap: modern websites are outpacing what older tools can handle.

Blue line = Median kilobytes of JavaScript an average desktop webpage now carries, rising from ~150 KB to ~580 KB as sites get heavier and more interactive. Red line = Legacy automation capability that depends on fixed page layouts, staying nearly flat.

Enterprises feel this friction every day. That’s because their workflows depend on reviewing thousands of external websites. Competitor pricing. Supplier inventory. Regulatory filings. All these tasks are high-frequency, continuous operations.

And each site adds risk. For them, the surface area for failure is huge. When automation fails, companies either give up the data or send humans to do the job by hand. Both cost time. Both drag down margins.

Humans built a $7 trillion digital economy on an interface that violates the second law of information theory: every day, it takes more effort to extract the same amount of information.

All in all, the web is messy. The needs are massive. And this is where TinyFish begins its work.

Consumer Agents vs Enterprise Agents

Okay, but don’t we now have consumer browser agents like ChatGPT’s agent mode, Manus AI, Genspark, etc? So why don’t enterprises just use them at work?

Note: We recently wrote a deep dive on Manus AI.

It’s a fair question. Especially when these tools feel like magic in demos. But that magic thins fast under enterprise load.

Consumer agents are built for vibes. Enterprise agents are built for guarantees.

Consumer agents behave like Daniel Kahneman’s System 1: fast, intuitive, probabilistic. They don’t need to be right, just convincing. I call this satisficing theater. The goal is to feel correct to the human on the other end. When it goes off the rails, you rewrite the prompt and move on.

Enterprise systems don’t have that luxury. They live and die by predictable outcomes. When a workflow runs a thousand times a day, a 1% hallucination rate is a disaster. No CIO builds on top of dice rolls, especially when the dice are hidden behind an API.

There are 3 major problems why consumer agents aren't suited for enterprises.

#1: The Medieval Governor Problem

Most consumer agents will attempt a task, skip a step, or misinterpret context, and there’s no visibility into what went wrong. You just see the final answer.

Enterprises can’t operate on faith. They face the same problem medieval kings faced with distant governors: How do you trust an agent acting in your name when you can’t watch them?

Enterprise AI needs logs, metrics, traces, screenshots, and clear error states. It needs to say why it failed and hand control back to a human when confidence dips below a threshold. If you can’t explain it, you can’t audit it. And if you can’t audit it, you can’t deploy it.

#2: Security as a Gate

Consumer agents ship data to third-party servers. That’s fine when you’re generating a travel plan, but not when you’re processing payroll data or patient records.

Enterprises need data vaulting, short-lived credentials, per-tenant isolation, regional data residency, and redaction baked in. Anything less is a non-starter.

Even security leaders are starting to say it out loud. Nikesh Arora put it bluntly:

“I think unless there are controls built into agentic browsers, which are oriented around credentials and enterprise security, they’re not going to be allowed in enterprises in 24 months”

Consumer agents can’t cross that line without changing their architecture.

#3: The Scale Wall

Consumer agents run inside a single browser tab. They’re clever, but small. Ask them to automate across hundreds of sites, and they crumble.

Enterprise agents need to run at a planetary scale. Thousands of concurrent sessions, each with deterministic outcomes and strong isolation. I like to think of one as a sculptor’s chisel and another as a CNC mill. Both shape stone. But only one can run 24/7 in a factory.

“We want to sell to Expedia, not to Jodi.”

Tinyfish's goal is more than helping one travel planner. They want to give Expedia the power to simulate 100,000 Jodis booking vacations simultaneously from 50 different countries.

TTT: The Tinyfish Trio

You can usually tell what a startup will become by watching its founders together in a room. TinyFish’s three co-founders, Sudheesh Nair, Shuhao Zhang, and Keith Zhai, don’t seem like they should occupy the same room. And yet, when they do, chemistry happen naturally.

They started TinyFish in 2024, and if you trace their professional DNA, you realise its a strange mix.

TinyFish co-founders Keith Zhai and Shuhao Zhang, and CEO Sudheesh Nair

CEO Sudheesh Nair looks like the part he’s played before. Former CEO of ThoughtSpot and President of Nutanix. He’s taken enterprise infrastructure through hypergrowth twice. He knows what it takes to turn complex technology into something the world takes for granted. You can almost see the glint of a man who’s directing the sequel to a film he already made a hit.

CTO Shuhao Zhang is the engineer who thinks in systems. At Meta, he managed the mobile infrastructure powering Facebook, Instagram, and Messenger. He knows how to handle reliability, that if it breaks, headlines will follow. Before that, he grew Edison Software’s engineering team from one to a hundred people. He builds teams like he builds systems: for throughput and trust.

And then comes COO Keith Zhai. Two decades as a journalist covering power across Beijing and boardrooms for Reuters, Bloomberg, and the Wall Street Journal. A Pulitzer finalist who once specialized in reading the tea leaves of Chinese politics. Such level of journalists are trained to find patterns before the world notices them. It also taught him something most technologists never learn: the hardest problems are a natural filter. If something is painful to do, it’s because it matters, and the payoff is real.

Together, the trio creates a productive kind of tension. The operator who knows how to scale. The engineer who knows how to build. The journalist who thinks about what’s coming next. Three very different people, but one clear mission to build out the agentic enterprise future.

Smart money also seems to like this chemistry. TinyFish raised $47 million in Series A funding led by ICONIQ Capital, with participation from USVP, Mango Capital, MongoDB Ventures, ASG, and Sandberg Bernthal Venture Partners.

The Tech: TinyFish in a Nutshell

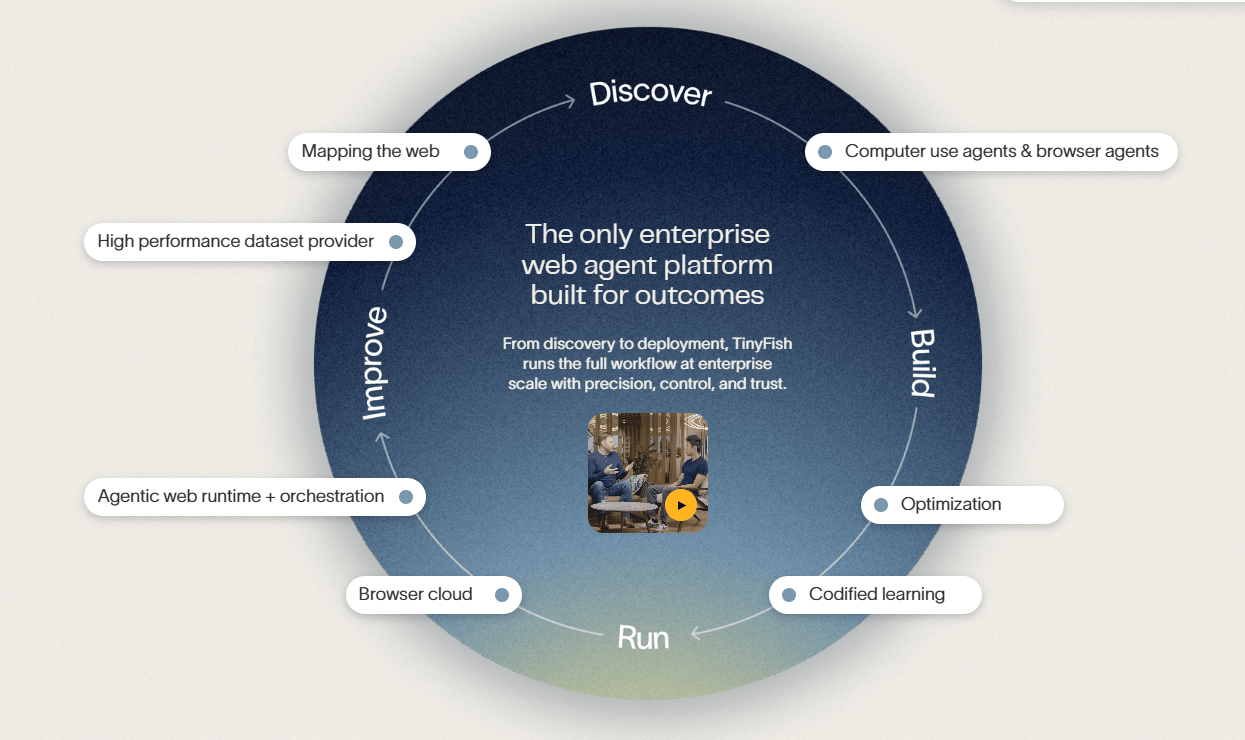

At a high level, TinyFish provides an Enterprise Web Agent platform that plans and manages web-based workflows at scale.

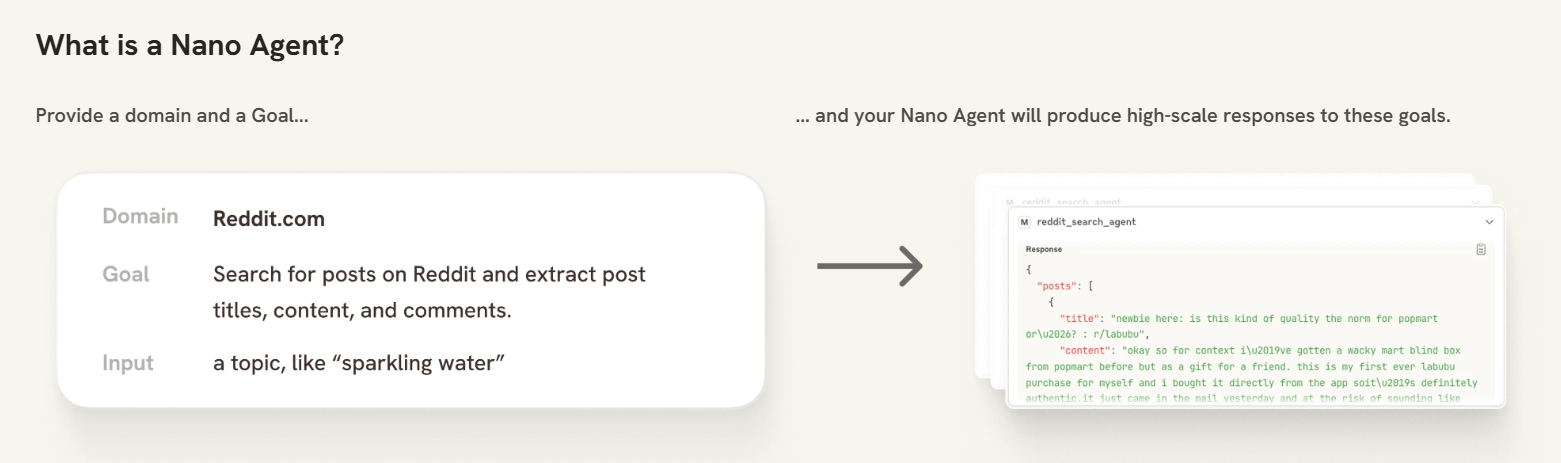

Within the platform, they have two products: i) MINO (nano-agents) and ii) AgentQL.

Product 1: MINO

Rather than having a single script handle an entire enterprise workflow, TinyFish builds an army of specialists called nano-agents, each trained to do one thing well. One might know how to handle a date picker. Another logs in with stored credentials. A third extracts pricing tables and so on.

Nano Agent

The magic is in how they work together. Nano-agents can pass information to each other, chaining micro-skills into full workflows.

For example, a swarm of nano-agents can predict bankruptcy 90-120 days before public disclosure. Nano agents can monitor signals of internal distress, such as drops in patent filings, increased executive LinkedIn activity, negative employee reviews, or the subletting of headquarters. When these indicators appear simultaneously, the system could provide early warnings for competitor analysis, acquisition opportunities, or supplier risk. This allows investment firms to anticipate market movements and identify distressed assets months ahead of others.

The modularity of breaking big complex flows into nano agents also localizes failures. If a particular part of a workflow, say the checkout button locator on a site changes, only the nano-agent responsible for that part needs to be retrained or adjusted, not the entire workflow.

TinyFish AI Architecture

Codified Learning

AI is probabilistic. It guesses. That’s fine when writing a tweet. But you don’t want the same model interpreting the same input field differently on two tries when you are parsing thousands of loan forms.

TinyFish solution is codified learning. It turns one-time AI insights into repeatable code.

This is how it works. The first time a nano-agent sees a new page (say, Wells Fargo’s loan portal), it sends in the AI like a scout. The model identifies key elements, such as name fields, submit buttons, and confirmation messages. But once it figures out the pattern, that knowledge gets locked in as code. So, the next time it processes a loan application on the same site, it skips the AI guessing phase and uses the code path directly.

This creates an unusual economic inversion. Traditional systems pay for inference every time they run. TinyFish pays once to learn, then scales for free. Many repeated sub-tasks become essentially free after the first few runs. The more it sees, the less it spends.

Over time, this creates a knowledge base, a growing “map of the web” where each site’s interaction model is learned.

With codified learning, TinyFish is not focusing on how to make websites readable, but how to read them anyway.

How does it actually work?

TinyFish turns various web tasks into modular units called “nodes.” Each node handles one specific action. Together, they form a “graph”, a connected network of steps, like snapping LEGO bricks into a working machine.

That structure has four big advantages.

Customization

TinyFish agents follow instructions from a central “Agent Orchestrator,” which decides who does what, when, and how often. You can add customizations, such as constraints, to avoid rate limits or being flagged by bot detectors.

This way, it can behave politely. This is similar to the beginnings of a new protocol layer between agents and websites, one where showing restraint earns you access. It’s what I’d call bot diplomacy. For enterprises, such customization matters. You can’t risk being banned mid-operation.

And when courtesy hits a snag or slows down, the orchestrator reassigns the task or queues it for later.

Easy to Debug

Each node emits human-readable logs. Not “clicked div#button123” but “added item to cart.” That makes it legible across engineering, ops, and compliance teams.

When something breaks, TinyFish tells you exactly what went wrong. This is possible because each node is typed, scoped, and self-contained. That makes it easy to debug.

If a checkout fails, the system doesn’t say “Step 47 failed.” It says, “Couldn’t find the discount code field.” That difference means everything when you’re debugging production workflows at scale.

Verification

Every workflow includes explicit postconditions that must be true for the task to count as successful.

For example, during an online purchase, after adding an item to the cart, the system checks "Did the cart count increase by 1?" After applying a shipping address, it verifies "Does the shipping cost now appear on the total?" After completing payment, it confirms "Is there an order confirmation number on the screen?"

This maps cleanly to enterprise promises because they can set an SLA that X outcome will be met, and the system itself checks for that outcome each time, effectively enforcing the SLA in real time.

If the final condition isn’t met, the agent doesn’t pretend everything’s fine. It retries. It reroutes. But it will never present a wrong result as right.

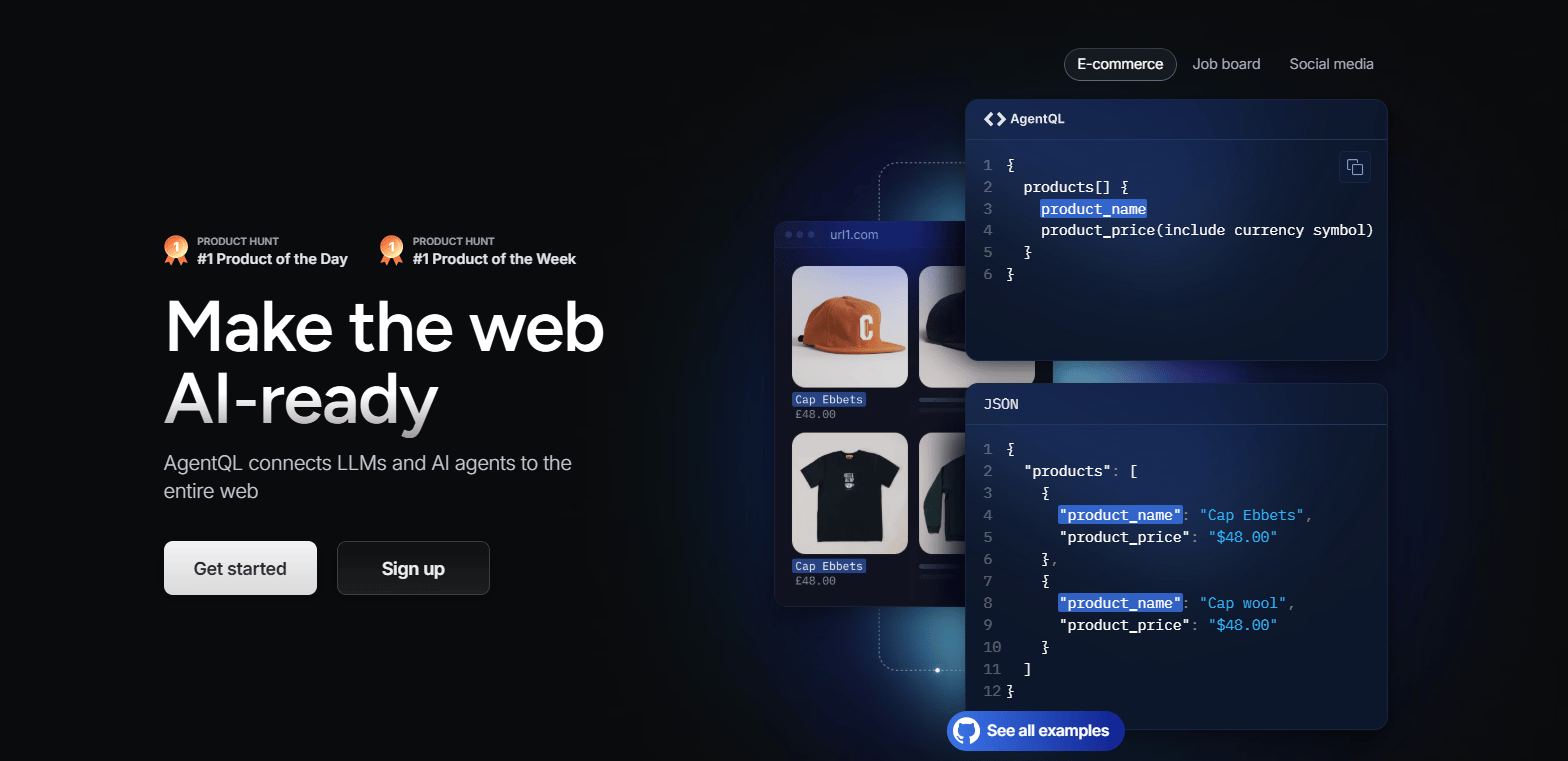

Product 2: AgentQL

Think of AgentQL like SQL for the web. You ask the web a question, and it works out the buttons, the fields, and the logic behind the scenes. It is an AI-powered query language that allows developers and AI agents to interact with web elements using natural language queries, replacing traditional methods like XPath and CSS selectors.

Agent QL

For example, an AgentQL query might be “find a hotel under $250 with free cancellation and return the name and price”

The AgentQL will figure out which elements on the page correspond to the query such as price filters and perform the actions accordingly. It is actually less a language and more a translator for intent.

Why do traditional parsers fall apart?

Basic Parsers

Many devs use a combination of Python libraries like Beautiful Soup and Requests to pull information from websites. This duo works well when the page is plain, static HTML.

The main weakness here is that websites are constantly updated. If a developer targets an element by id ‘checkout-button-v2’ and the site renames it to ‘checkout-button-v3’, the scraper stops working, which forces developers into a miserable loop of constant fixes every time a site gets redesigned.

Nobody wants a robot that can only find the light switch if it’s exactly where it was yesterday. These tools also don’t come with stealth or throttle control, so if you run them at scale, you get flagged and blocked fast.

Browser Controllers

Other powerful tools like Selenium and Playwright can click buttons, fill out forms, and navigate pages, but they require a developer to write out every single step.

For example, a script would say "click the text box for destination, type Paris, click the calendar icon, find the button for the 10th, click it" and so on. This creates a lot of work. And scripts are difficult to maintain, as any small change to the website's interface can break the entire sequence.

A new generation of tools like Stagehand uses AI to understand natural language commands. However, there is a fundamental difference in how they operate. Stagehand relies on LLMs for every action it performs, which can make the process slow, expensive, and sometimes unpredictable.

How is AgentQL different from existing solutions?

AgentQL solves all these problems by combining intelligence with reliability. It lets developers describe what they want in plain English rather than using technical terms. It can do that because it reads a webpage for its meaning.

Discovery to Deployment Loop

It understands that "Add to Cart" and "Buy Now" are the same in a commercial context. If a form changes "Zip Code" to "Postal Code," an AgentQL query for zip_code_input still finds the right field because it recognizes synonyms and context.

This semantic understanding also makes the queries portable. A single AgentQL can extract product listings from both Amazon and Walmart’s sites, whereas with conventional methods, you’d have to write two separate scripts.

AgentQL is also declarative, which means you simply state your final goal like "find the highest-rated laptop under $1000" and it will determine the actions on its own. This makes automation faster and reduces the maintenance needed when websites change.

Similar to other AI-based parser tools like Stagehand, AgentQL also uses AI, but in a “codified learning” way that I’ve just described above.

For developers, TinyFish provides Python and JavaScript SDKs so that AgentQL queries can be embedded in code if needed. They’ve built a Chrome extension AgentQL Debugger which lets developers visually try out their AgentQL queries on a live page.

With both of these products, the ultimate vision, as TinyFish puts it, is to imagine the World Wide Web as a World-Wide Database (WWDB) - a queryable database where you declare what you want and the runtime handles the clicks.

This could alter the economics of large-scale web automation by reducing maintenance overhead from linear to logarithmic scaling.

The Business: Total Addressable Market

TinyFish AI sits atop a market convergence that is one of the most lucrative opportunities in modern enterprise technology.

Timeline showing TinyFish's breakthrough positioning (approximation) as the culmination of web automation evolution.

New Market: The API-less web

TinyFish is surfacing a new market: the API-less economy.

Just like GPS before Uber. The data was there, but the value only emerged once it became actionable. Nano-agents do the same but for a different terrain: the vast sprawl of websites with no public APIs.

Out of 1.27 billion websites that exist, only a small fraction have APIs. The data’s trapped behind buttons, forms, and captcha puzzles. This is what I call the Dark Web Data Layer, a massive, under-tapped reserve of information that’s readable by eyes but unreadable by code.

Current knowledge automation could be worth $5 to $7 trillion, but that assumes clean, accessible inputs. But most of the web isn’t created for bots. So if you want to automate at scale across tens of thousands of unpredictable sites, the cost explodes.

This gives rise to a shadow TAM made up of questions that are currently too expensive to ask. With codified learning and nano-agents changes, questions that were too expensive to ask can become routine.

Can I track product placements across 10,000 e-commerce sites daily? Yes.

Can I verify compliance across hundreds of distributor portals? Yes.

Can I treat the messy, API-less web as a structured, searchable database? Yes.

The net effect is that new demand appears out of thin air because the ability to ask a new type of question has been created. This means that with nano-agents, companies can treat the web like an extended database even if there’s no API.

The TAM: Enterprise Automation & Alpha Feeds

Enterprise automation is already on track to become a $30 billion industry by the early 2030s. More than half of all enterprises already use Robotic Process Automation (RPA) to manage internal workflows, like updating spreadsheets, moving files, and triggering actions across internal tools. But RPA was built for what’s inside the business.

The web is outside, though.

Finance teams want to mine competitor portals. Retailers need to watch rival pricing. Travel companies scrape availability. Logistics teams check delivery statuses buried in supplier dashboards. These workflows are outside the internal system of enterprises. They’re just expensive and messy to automate with today’s tools.

And it’s getting harder. Cloudflare is now default-blocking crawlers. Websites are tightening bot defenses. Pay-per-crawl is becoming the norm. Enterprises need web automation that’s trackable, governable, and plays by the rules.

So the demand is there.

TinyFish can cause a significant reallocation of corporate spending. Money that a company used to spend on third-party data might instead be spent running its own agents via TinyFish. New budgets might come from departments that didn’t automate before.

It also cuts across labor. Market research firms, competitive intelligence teams, and outsourced analysts. Basically anyone whose job is to collect and synthesize web data becomes a candidate for augmentation or displacement

And then there’s the high end of the market: alpha buyers.

Data products for Alpha buyers

In the alpha‑data world, you are paying not just for raw numbers, but for uniqueness, freshness, and actionability. A well‑constructed feed from TinyFish might show, at 01:00 GMT, that 73 % of the riverside rooms at a remote Japanese inn are booked, while a traditional index won’t capture that until weeks later (if at all).

Hedge funds are comfortable paying multiple hundreds of thousands of dollars per year for that edge. A 2019 survey found more than half of funds using alternative data spend at least US$100,000 per year on it.

TinyFish can position itself as the “web as database” engine by fetching data from complex external web-flows and converting into enterprise‑grade feeds.

If alternative‑data buyers pay millions per dataset and the total market is at least a few billion (with budgets still climbing), then targeting a 5‑10 % share of an $11 billion pool is an ambitious but understandable goal.

For the buyer, the value is crystal clear. Rather than piecing together human‑scraped reports, clumsy scripts, or one‑time projects, they get clean, licensed, refresh‑hourly data that they can plug into models and confidently act on.

Customers Today

Any tech demo can look good in a slide deck. But users need to see deployment at scale on high-volume real-world tasks.

With more than 30M live agents, it seems tinyfish has passed the acid test. Here are some of its customers:

ClassPass

ClassPass allows users to book fitness classes, gym time, and wellness appointments at various locations. So it runs on data like schedules, class slots, partner studio availability, all of that needs to be collected, validated, and updated across hundreds of different systems, some of which don’t talk to each other.

TinyFish agents automate these external workflows. They extract structured data from sites that were never meant to be parsed and keep it fresh.

Navigating the AI wave to find tools that solve real problems… it’s clear [TinyFish is] building exactly that: next-level AI agent solutions that deliver real results.”

JobRight

JobRight.ai is a startup that helps job seekers auto-fill job applications on company career sites. The pain point is that every company’s application form is different, making it hard to have one bot that fits all.

TinyFish agents handled it end-to-end: detecting fields, uploading resumes, and navigating pop-ups. As a result, users spent 80 percent less time on applications and doubled their interview rates.

Doordash

In on-demand delivery, seconds matter. Pricing, wait times, and competitor promotions change constantly. DoorDash wanted a continuous intelligence layer to monitor the battlefield.

TinyFish delivered that. Agents tracked ride quotes, driver counts, and promo offers across services, updating every few minutes. Over 10 million data points are collected annually in a single region. This gave the company a high-resolution view of supply-demand dynamics. And the power to adjust pricing, deploy incentives, or shift driver supply easily.

Whether it’s thousands of hotels, hundreds of job sites, or millions of price points, the volume is high. The success stories suggest TinyFish met those scale requirements with measurable outcomes, which itself is a strong proof point.

Competitors

When I dug into TinyFish’s space, I discovered three meaningful peer clusters (and many smaller players lurking). TinyFish’s advantage becomes clearer once you map out what they’re optimizing for and what they’re avoiding.

Adept AI

Adept has built a very ambitious agent model (ACT‑1) that can drive a browser, click, type, scroll, and orchestrate software tools.

They position themselves as general‑purpose, pushing toward “digital tool use” and perhaps longer‑term AGI. Because they cast a very wide net, their enterprise focus on high‑volume web workflows appears less defined. In contrast, TinyFish is building a narrower but deep stack: workflow reliability, compliance, traceability, and large‑scale deployment across many sites

Skyvern

Skyvern is an open‑source / low‑code platform for browser automation that uses LLMs + computer‑vision and supports CAPTCHAs, 2FA, multi‑page workflows.

It’s compelling, especially for tech‑savvy teams or smaller operations. But because it is open, generalised, and less enterprise‑governed, it may hit a ceiling when enterprises demand full audit‑trails and SLA guarantees. In other words, a strong contender for developer teams, but less proven for mission‑critical automation at scale.

CloudCruise

CloudCruise is a developer-centric platform that turns browser workflows into programmable APIs. It offers smart maintenance agents that can auto-repair broken flows, built-in tools to evade bot detection, and tight API integrations for custom automation stacks. It’s clean, flexible, and clearly designed with builders in mind.

But it’s still early. Enterprise deployments are limited. And while CloudCruise is great for spinning up quick automations, it’s less optimized for what happens after: the long tail of maintaining, governing, and scaling those workflows across thousands of brittle sites.

In a nutshell, TinyFish’s current edge is specialization. It is narrowly focused on making the entire web accessible for business operations and has built a unique stack to do so.

And the competition isn’t always obvious. Sometimes it’s not a billion-dollar startup. Sometimes it’s just a random YouTube video.

A young developer recently launched Pars, a tool that lets anyone extract data from any website by turning it into a reusable API. It’s fast, slick, and growing. Today, Pars lacks the infrastructure that TinyFish has. But one well-funded round could shift that quickly.

Does TinyFish Have a Moat?

People keep asking about moats in AI: meaning what will prevent others from doing the same thing. To me, I see every nano-agent like a little artifact. TinyFish is hinting at a moat based on these artifacts or the accumulated code fragments their system gathers over time.

A competitor not only needs to create similar tech. They must replicate the thousands of client-specific learning cycles that have been solidified in code. That is a time tax that money cannot skip. Brian Arthur's theory of increasing returns explains why this matters. In traditional economics, returns diminish. In information economies, returns increase. i.e., each learned pattern makes the next pattern easier to learn.

At the same time, TinyFish's cost to automate a new website that shares a structural layout with one already in its library decreases with each additional feature. This is less a traditional software moat and more an operational one built on collected intelligence.

TL;DR: Replicating TinyFish’s tech is easy to brag about in a keynote. But very hard to copy in a quarter.

Scale adds a second layer. TinyFish’s agents will generate large amounts of data on how websites behave, such as how often they change, which common patterns exist, etc.

If one believes in network effects (ah, the magic of tech), then:

more clients → more sites covered → better library of nano-agents → better/faster service for new clients and more difficult for others to match.

The Future: Agent-Defined Economy

Something I’ve been thinking about a lot is what the future with AI looks like. I agree with the prevailing view that the internet will be dominated by AI agents quite soon.

Here’s one way I see it:

Agents compress information latency → visibility increases → companies react faster → intent becomes broadcastable → intent becomes tradable.

The Agent-Defined Economy, as I like to call it, begins with information latency arbitrage. The game has always rewarded speed. Just ask any HFT firm. However, their edge is in closed systems.

The bigger play is in open terrain. The lag between a product recall posted on a regional distributor site and when it hits earnings calls. A retail brand won’t just match competitors’ prices. Its agents will monitor Vietnamese supplier sites, spot shipping delays and shortages, and adjust prices before the competitor even knows there's a problem.

TinyFish compresses that delay from weeks to seconds. A furniture brand sees lumber shortages at Canadian mills before commodity traders do. A pharma company spots trial issues on hospital portals before the filing hits the SEC.

Whoever sees first, wins. The edge in business and investments will go to whoever runs the densest, most reliable mesh of agents across the public web.

Inverse Transparency Markets

For decades, companies paid to control what news leaked to the press and social media. But when millions of agents scan the web nonstop, corporate opacity becomes impossible.

The counter-response is to pay to manage what agents see.

Want your product launch to hit every retail agent at 9:00 AM sharp? That’s a service. Want to delay the signal from your supply chain stress until your own agents can respond? Also a service.

This is the start of what potentially could be Inverse Transparency Markets. Not hiding information, but sequencing it.

It also means UX changes. Not for users, but for bots. Agent UX will become a thing. Brands will design their sites for agent comprehension i.e., how to make their sites legible to machines that make decisions in milliseconds.

If you follow this to its logical endpoint, it leads to a Protocol for Intent. We’ve standardized how we move data (HTTP) and send messages (SMTP), but there’s no native protocol for action. TinyFish is edging us toward that layer.

Instead of navigating dropdowns and filling out forms, a company could broadcast: “Need 500 hotel rooms across three cities. Prioritize team satisfaction and venue proximity.” A swarm of enterprise agents, some from TinyFish, others from competing platforms, could parse that request, bid on fulfillment, and execute the workflow end to end.

And once intent becomes structured, it becomes tradable.

That’s the unlock. Imagine an Intent Futures Market. Retailers could sell futures on what they plan to do. A retailer planning a massive Black Friday sale could sell ‘intent futures’ to suppliers, who would then ramp up production based on intended rather than actual orders. It’s public, tradable, and conditional. Unlike today’s opaque forecasts and late, binding orders.

Conclusion

For most of modern business history, the assumption was simple: if you wanted to understand the world, you had to go out and capture it. Companies built operations around that belief. They hired teams to cross borders, parse context, and turn knots into real decisions. It sort of worked, but only barely.

TinyFish is changing how companies relate to information entirely.

It starts by meeting the web where it is. Not as a machine-readable grid, but as a reflection of human quirks and context. The Japanese innkeeper doesn’t think in RESTful APIs. He thinks in seasons, in returning guests, in the way sunlight lands on a breakfast tray. For software to be useful here, it has to understand his world.

That’s what nano-agents do. They read pages the way a person would. They extract meaning. And once software can interpret the entire web, including its implied intent, the idea of web “information asymmetry” starts to break down.

At this point, the translation burden switches sides. After decades of people learning to speak to machines, the machines are finally learning to listen. It’s performing computational anthropology, understanding the analog way we humans express intent online.

Takeshi's little inn is now fully booked most days and thriving in ways he once thought impossible. Because TinyFish fixed the space between his website and the world.

Soon, you only need to build things that matter to you. Let agents handle the rest.

Thanks for reading,

Teng Yan & Ravi

If you enjoyed this, you’ll probably like the rest of what we do:

Chainofthought.xyz: Weekly Decentralized AI newsletter & deep dives

Agents.chainofthought.xyz: Weekly AI Agent newsletter & deep dives (non-crypto)

Our Decentralized AI canon 2025: our open library and industry reports

Prefer watching? Tune in on YouTube. You can also find me on X.